Simon Frankau's blog

Ugh. I've always hated the word 'blog'. In any case, this is a chronologically ordered selection of my ramblings.

Thoughts on the Turing Test

The rise of AI Discourse means that I have discovered that I have Opinions on the Turing Test. I've gone through them a couple of times on social media, but I thought I'd record them here for posterity.

Most popular depictions and analyses of the Turing Test are either a simplification of, or a literal interpretation of Turing's original paper. They have weaknesses that stem from failing to understand Turing, and his strengths and weaknesses.

To start with, it's a fascinating paper. It introduces a way to cut the Gordian knot of "What is intelligence?", it spends a lot of text approaching various objections to artificial intelligence that still go on, and it makes predictions of the complexity required to produce an AI and very roughly when we might expect it, and did all this little more than a year after EDSAC was running!

Indeed the questions and replies in the text are remarkably prescient of where we are with LLMs. And, reading the original paper, Turing did not see the Imitation Game as a pure thought experiment, but something that could be done one day. So why is the conversation around the current state of the Turing Test so messy?

Most people don't see the computer science context of Turing's work. In theoretical computer science, a well-known concept is Turing-completeness. This is the idea that a programming language or system is sufficiently powerful to solve the same problems as any computer system.

The canonical argument used by Turing to show that a system X is Turing-complete is that it is able to emulate other systems known to be Turing-comlete. If it can emulate the other system, it can solve all the problems that system can solve, and must be at least as powerful as it.

It's not explicitly stated, but this is exactly the same arguemnt Turing is using for the Turing Test: If a computer system is able to emulate a human, it must be as powerful (intelligent) as a human.

There are a couple of subtleties here. One is that it defines a domain of emulation: It's suggesting all of practical intelligence can be expressed through conversation. To pass as a human for the purpose of testing intelligence, the system needn't perform physical tasks, draw, listen, etc. Turing suggests that the essence of intelligence can be evaluated through a text stream alone. I think this is reasonable, but it's also a little under-discussed.

Another subtlety is what's necessary vs. sufficient to demonstrate intelligence. A criticism of the Turing Test is that it takes a human-centric definition of intelligence. I think this misses the point. If a system can emulate another intelligent system, it is intelligent. If it cannot, that tells us nothing. That doesn't mean it's not intelligent. And, so far, humans are the only example of "intelligence" we have to hand. A hyperintelligent system that thinks nothing like a human, but can emulate one if it wants, will still pass the Turing test.

"Failing the test tells us nothing" is slightly interesting to compare to the theoretical computer science case of Turing-completeness. If we can show that system Y is not able to emulate a Turing-complete system, we know it is strictly less powerful. On the other hand, if I am unable to do a perfect emulation of Einstein, it doesn't mean I'm not intellligent. It doesn't even mean I'm less intelligent than Einstein (although I am). I can't do a perfect imitation of Trump, either, but I'm pretty sure I can beat him on a bunch of intelligence metrics.

The final subtlety I want to talk about with the Turing Test, compared to Turing-completeness in theoretical computer science, is that Turing-completeness can be formally proven. We can show how to emulate one system with another. On the other hand, the Turing Test is an experiment.

Turing was a fantastic theorist, but not particularly practical. This is a man who hid his savings during World War II by burying silver bars and subsequently lost them, and committed suicide with a cyanide-laced apple. I understand he did not get on well with the more practical computer builders at Cambridge. While he engineered things, I don't see evidence of a scientific mindset, and thus he did not look at The Imitation Game as a proper scientific experiment.

This oversight has plagued the Turing Test to this day.

What would the Turing Test look like as a scientific experiment? To be fair, Turing at least has an experiment and a control, by testing both a machine and a human. The hypothesis is that some machine is intelligent. The aim is to disprove the hypothesis. If we fail to disproce the hypothesis, we haven't shown it to be true, but we have gathered evidence to improve our confidence that it might be. We can construct increasingly elaborate experiments to stress the hypothesis further and further.

All this means that passing bar for the test should not be "a human thinks it's human", but that a set of experts, constructing increasingly elaborate sets of questions, including the feedback from previous rounds of interrogation, cannot put together evidence the machine is not human.

The naive Turing Test is clearly passed now, but it was passed decades ago with Eliza, too. By setting the bar so low, it encourages people to dismiss the actual progress over the years. It leads to conversations about how easily humans are fooled and all kinds of other distractions and confused arguments.

The scientific Turing Test has not been passed. People are able to find ways of making LLM models give distinctly non-human answers. In other words, the scientific Turing Test reflects reality. The iteration of finding increasingly complicated questions with which to distinguish humans and machines makes our progress clear - for an expert questioner, the gap between Eliza and ChatGPT is glaring, and the quality differences across generations of GPT pretty obvious.

Phrasing the Turing Test in terms of a scientific experiment has its own dangers. Focusing too heavily on the falsifiability aspect lets people claim that we can never prove that a machine is intelligent... but really that's just the same argument that you can never tell if any human you meet is intelligent. However, taken in moderation, it gives us a practical and thoughtful approach to assessing machine intelligence.

Posted 2023-04-29.

CMake is awful

CMake is awful. It's awful enough that writing about how awful CMake is is a stereotyped blog entry. It's so awful that I feel compelled to write about how awful it is, even knowing how unoriginal that is. I need catharsis.

CMake is bad at its job. It has a bad job, and it does it badly. In some ways it has many jobs, but the bit that matters is the bit that gets in your face. CMake is a dependency finder.

A decade ago, when I last used it in anger, it was a build file generator. We used it to generate build files for our project that would work on a bunch of different platforms with their various build systems. It was boring, it did the job. This is not a hard job: Your code is supposed to work together, making it do so is not so hard.

It turns out the tricky bit is building disparate stuff together: Taking random libraries etc. and gluing them into a coherent whole. CMake's real-world role is to make your dependencies work together.

It's a miserable job. This isn't even dependency management in the sense of package management: It doesn't have the authority or responsibility to own installations and make things work together. Oh no, it just has to scrape around your system, deal with what's provided, trying to find the dependencies, and staple them together.

The canonical failure mode of CMake is that you have a dependency installed on your system, and it cannot find it. You can see it there, sitting in the file system, while CMake's just refusing to see it. It's embarassing.

No human wants to care about this. When a piece of software breaks, I want to debug it. To debug, I need to build. And to build, I need the dependencies to work. I hardly want to care about installing dependencies. Why on earth should I care about getting CMake to recognise them?

No sensible human being, tasked with making software work, wants to have to care about the details of how CMake finds things. The fundamental problem of CMake is that it makes you become an expert in something you really couldn't care less about.

CMake, along with other tools that most people use glancingly and couldn't care less about, should follow two simple rules: 1) Work 2) When you don't work, be really, really easy to debug, so we can stop breaking rule #1.

CMake breaks this rule most egregiously. Old-school Make is... actually not that bad at being diagnosable. By default it has a relatively straightforward approach, and there are decent-enough tracing flags. Make is decades old, and gets this right.

Let's compare with CMake. In an ideal world, there would be a simple, obvious flag that puts it into a diagnostic trace mode, allowing you to see what happens. If it fails, it would explain in detail how it failed and/or explain how to get more info. CMake does not do that. Instead:

- Default output is uninformative. You need to set '--trace' to see what it's up to. Ha ha. Only joking. '--trace' doesn't expand variables before logging, so you get entirely useless lists of entries like "if(NOT TARGET ${_target} )". You need '--trace-expand' to get useful information. This is positively user-hostile and gives you a good idea of the mindset involved.

- This trace only tells you what it's executing, with no real clue to telling you how it got there. Fortunately, the mechanism for finding dependencies is clear and explicit. Ha ha. Only joking. As documented, attempts to find dependencies are automagical, using at least 3 different mechanisms, and generally involving searching for magically-named files in various search paths.

- To work out what it's finding where, you need a different flag: --debug-find. Because, as I said, if you're fixing an issue you don't care about, you want to become an expert in the various different flags.

- Except... as far as a tracing tool goes, it makes the classic error of helping you understand what succeeded, not what failed. It tells you what files it found, and you have to search for the failure-shaped holes yourself. It does not tell you what it tried, so you don't really know why it didn't find your dependency.

- And none of this really helps the fact that it does not make it easy to fix the problem! What I want to do is just put the path explicitly in some simple file, have CMake believe that the thing is there, and get on with my life. As it is, the correct solution appears to be to learn the language, learn how to specify the components of a library, create a FindWhateverLibraryImLookingFor.cmake file, and then trick CMake into discovering and using said file.

In short, it's a user-hostile piece of software. It does not care about the use case of "This thing isn't working. How do I easily make it work?". It... feels like the kind of person who thinks C++ templates are good because they're subtle and complicated and tricky, and thus make them feel smart that they understand them. It piles up accidental complexity and assumes its essential complexity. It's utterly awful.

In many ways, you can tell it's going to be bad when the standard usage recommendation is "mkdir build; cd build; cmake ..". Baked in, at the very first level, is that the obvious way to use it is wrong. It's a warning for all that follows.

Posted 2023-04-26.

On the Management of Socks

It should not be necessary to have to explain how to manage socks, yet here we are! I am looking at a very specific scenario, but one close to my heart: Always having a suitable matching pair available.

The secret, so often ignored, is to simply have a small number of large, readily distinguished pools of very similar socks. Similarity within pools, differing between pools. This is a long-term strategy, so let's start with the basics:

The fundamental idea is to make socks easy to match. If you buy a large number of identical socks, you can pick out any two, and you're done. Life is easy. Surely no-one could get this wrong, could they? Of course they can.

What you see here is a set of 7 Marks and Spencers pairs of socks, carefully colour-coded to maximise their incompatibility, and frustration should one sock get lost. I've even seen them describe the clear colouring as "easy to pair" in the past. No! It's easy to pair if you can pair any of them toegether. If you have to care about the pairing, it's not easy to pair. Grrr.

So, step 1 is to buy enough identical socks to last you for a few years, taking into account individial sock loss and wear and tear. Eventually, you will need to replace them, and it's time to bring in the long-term plan: Large pools of distinguishable socks.

The fool buys another batch of socks similar to the first pool. Now you have a pairing problem! If you don't pay attention, you will pair an old sock with a new sock, with all the disaster that this entails. Don't do this! Instead, buy another pool of socks that'll last you for years, but is readily distinguished from the old pool. Your transition is clear, and when the time comes to cull the last batch it is simple. Congratulations, you have managed your socks correctly.

There is, of course, more. You may live with people who do not believe in uniform socks. You may be given pairs of novelty socks. You are brought back down to the world of treating your socks as pets, not cattle. The key here is to separate the odd socks from the paired socks, because they have fundamentally different purposes:

- Paired unique socks can be worn as needed, without hassle. You do not need to contemplate unpaired socks, you can simply put on a full pair whenever needed. The pool is uncontaminated.

- Unpaired unique socks exist solely to be paired. Kept separately in a smaller pool, it is easier to match them up should the lost half be found, and by looking through this smaller pool you can be confident that you have not left any socks accidentally unpaired.

Thank you for coming to my TED Talk.

Posted 2023-04-09.

On the free idempotent monoids generated by a finite set of letters

Off the back of a Mastodon conversation that started with enumerating the elements of a two element idempotent rig (https://github.com/simon-frankau/two-generator-idempotent-rigs)), I ended up trying to work out how to enumerate the elements of the idempotent monoid generated by three letters. Basically, this is the set of all words divided into equivalence classes over repeated substrings - so "abcXYZXYZdef" and "abcXYZdef" are the same element.

Surprisingly despite the fact that there are infinitely many square-free words built from three letters - that is, words witout repeating substrings in them, there are only a finite number of elements to the monoid. It turns out that for all sufficiently long square-free words you can introduce a repeat, take out other repeats, and end up with a shorter word!

I coded up a brute-force tool to find all the elements of the monoid generated from 3 elements, at `https://github.com/simon-frankau/monoid-gen, but having read Chapter 2 of Jean Berstel and Christophe Reuteneur, Square-free words and idempotent semigroups, in Combinatorics on Words, ed. M. Lothaire, Addison-Wesley, Reading, Massachusetts, 1983, courtesy of this post, I realise it's very much simpler than that. Sufficiently so that I'll give an intuitive proof sketch here.

Notation-wise, I'll use upper-case letters for strings, lower-case letters for letters. Otherwise, it's just ASCII. My blog software is really dumb.

First, given a string AB, where B contains a subset of the letters in A, we can find a string that, massively abusing notationg, I'll call B^-1 such that AB B^-1 = A. We can prove this by going a letter at a time. Say AB = AB'x (that is, the last letter of "B" is "x"). "A" contains an "x", let's say A = LxR. Then AB RB' = LxRB'x RB' = LxRB' = AB' - we have found a string to append to "remove" a letter. Do this repeatedly, and we can strip off any word, as long as the letters involved appear again sometwhere earlier in the word.

By symmetry, we can find strings to prefix to "remove" prefixes.

Given this, we can now prove that a string LMR, where L and R are the shortest left and right substrings that use all the letters in LMR, can be reduced to LR, and hence we can break all the strings down into equivalence classes based on L and R (I'm deliberately ignoring the cases where L and R overlap for simplicity - the original proof handles this fine).

Roughly, we can find "R^-1" and "(LM)^-1" (as described above) such that LMR = LR R^-1 MR = LR LR R^-1 MR = LR LMR = L (LM)^-1 LMR LMR = L (LM^-1) LMR = LR.

This means, that rather than the exhaustive enumeration I did, I could simply generate all the left and right monoids on two-letter words, using two of the three letters, and add the third letter to get the left and right ends of the word, eliminate any overlap, and have an element. For example, choose "ab" on the left, "cbc" on the right. The left and right parts are "abc" and "acbc" respectively, there are no overlaps, so "abcacbc" is an element.

Posted 2022-12-30.

Game Review: Dungeon Encounters (Square Enix)

Dungeon Encounters has been described as a minimalist, bare-bones RPG. There's no real plot. Graphics are limited to a few illustations. All that's left is exploration of 100 grid-based maps furnished with nothing more than raw hex codes, and an RPG combat system.

It turns out that methodically crawling through 100 levels, bashing at each of the foes in turn to slowly level up is tedious as hell, but irresistable to the completionist streak in me. I did so, traversing all 100 levels in order (the main endgame is actually on floor 90, but there's a few post-game extras for those who are gluttons for punishment), and boy was it boring and slow. Just like a regular RPG without the bits that turn out, in retrospect, to be the good bits.

The game provides lessons about myself. I'm a mathsy kind of person, so I assumed that when I played e.g. Final Fantasy VII, I kind of enjoyed the tactical combat elements (apart from the incredibly slow animations near the end). Turns out, on further reflection, it was the plot that kept me going. I do like the challenge of an interesting puzzle, but easily clobbering baddies that are just weaker enough than me is actually very boring.

However, this is not the only way to play the game. You can sneak around enemy encounters. Many of the most tedious bits can be circumvented by grabbing items and skills from later in the game and using them. Playing strategically rather than bludgeoning through can apparently work quite effectively. Yet I didn't. I just whacked all the baddies in linear order until they're gone.

At some level, I realised the alternative was possible, and probably faster, but likely to be messier, and I'd miss things. An affront to my inner completionist. So, I get the fundamental takeaway that while getting a sense of completion can be very satisfying, and while this streak is useful to drive through big challenges to completion while applying attention to detail, I should really learn when to cut my losses.

The game? Basically not recommended. There are all kinds of other RPG-like strategy games that work like an interesting puzzle, that are much more worth playing. The combat music, classical pieces arranged for the electric guitar, rocks, though.

Posted 2021-12-07.

Reinventing tomography

Having had a bunch of CT and MRI scans made me think of something I'd been thinking about, on-and-off, for years: How does tomography actually work? You scan an object by seeing how much it absorbs from a bunch of angles, and somehow use that to reconstruct the original object's structure - how does this magic work?

The obvious way to find out would be to read up on the subject. I decided to do something a little different, and try to reinvent it without reading up the existing theory. So, that's what I did - with the results up at https://github.com/simon-frankau/rediscovering-tomography.

Reinventing areas of maths is... interesting. As well as working out how it all works in theory, making it work practically is full of fiddly tweaking - I'm very much an amateur at numerical coding - but it was a fun project. If you want to know more, go follow that link!

Posted 2021-10-23.

Management books vs. "no single root cause"

Lorin Hochstein made the interesting point that many management books read like morality plays - this company didn't do X, and they failed, and that makes the people running the company bad. Even ignoring the morality aspect, it's simple and reductive - when there's an incident and a postmortem, we look for a multiplicity of contributing factors, so why not the same for companies? I'm an SRE manager, but generally I enjoy management books. Isn't there a huge hole here?

First off, I hadn't read the book he quoted, but... wow, that's blame-y. There's a difference between pointing out that in a particular situation they didn't do something and that lead to a bad outcome, and saying that they're therefore bad people. I think I've mostly stayed clear of that sub-genre in my reading.

Having got past the needless blame, I do recognise a lot of "single root cause" in these books. Assuming an incident management approach applies to running a company, surely any book describing a single root cause must be wrong?

Well, on the one hand, it's what the industry's set up for. These books are going to tell you success is not random, you're in control, and moreover you just need to follow the central thesis of the book for success. These people did, they're all billionaires now. These people didn't, and they went bust. I tend to find examples in management books really useful, but they're almost always reductive.

On the other hand... there's a place for tackling specific problems. While a postmortem might want to collect a dozen contributing factors, there's also room for saying "make sure you can roll back quickly" and "have good monitoring". These books are action items, not postmortems. A lot of these books do the equivalent of saying "you just need quick rollbacks and everything will be fine", but if you treat them as simplifying models that handle just one slice of reality and synthesise them together, I think there's still real value.

If management books are "known common contributing factors", what's the management theory equivalent of a postmortem? I would (weakly and with no real evidence) argue it's the case study approach used by e.g. HBS. And... the interesting thing about that is that while that is supposed to foster open discussion, the case studies are often intended to teach a specific point - to look for a particular root cause.

This brings me back to another point - is company management really like incident management? In some ways I see similarities with running production - there's a weakest link element, in that if just one area screws up, it doesn't really matter how the other departments are doing, you will have a bad time. However, in another way, I'm not so convinced. Much of incident response work is based around the idea of defense in depth. We have multiple contributing factors because no single root cause should be allowed to break things.

I'm not sure if this applies to a business. How do you build defense in depth around a huge investment decision? How can you apply the swiss cheese model to business success when your strategy requires extreme focus? The danger of a successful idea is its over-application. Maybe SRE-like tenets should not be applied to businesses as a whole. We should be thoughtful about what ideas should be carried over, how things can be adapted. Blameless postmortems might be interesting in other settings, for example. As usual, blanket application of a methodology is no substitute for careful thought.

Posted 2021-09-19.

Solving nonograms

In my solving logic puzzles, nonograms (aka Picross) have recently eclipsed hashiwokakero as my go-to fairly-mindless puzzle-solving experience.

As a low-effort, mind-clearing activity they're great, but pretty mechanistic - perfect for automation (not that it stops me solving them manually!). So, of course I wrote a solver. Yet again, it's an excuse for practising my Rust, which in turn is a better use of my time than solving the puzzles by hand, even if it requires a bit more concentration.

It's pretty straightforward, and the result is up on GitHub at https://github.com/simon-frankau/nonogram-solver.

Posted 2021-08-14.

First Direct demonstrate how not to do a switchover

I bank with First Direct, mostly because I used to have a mortgage with them. I guess I should revisit this. Anyway, they're performing a switchover from Visa to Mastercard. They sent me a swish new card, along with a letter telling me how easy it would be.

I tried to use the new card, in a rather nice restaurant. For whatever reason, it didn't go through. I tried my old card, it also didn't go through. I did manage to pay in the end, so I didn't have to do lots of dish-washing, but it was pretty painful.

Thereafter, I tried again in a supermarket. Both old and new declined. I phoned up First Direct, and apparently there's no fraud stop, I've just been unlucky (?!). The bonus is that as soon as the new card is used, the old card is blocked, so any attempt to use the old card is mysteriously declined, despite me thinking I had a grace period.

My old card being blocked is frustrating because it's not clear the new card is actually working. The advice from the fraud person at First Direct is that I go and explicitly use chip-and-pin at some shop to activate it. The only problems are a) I thought I'd tried that, b) I've been having health issues and don't really fancy going to the shops unnecessarily c) there's not much I actually want to buy, and resent the idea of just spending money to activate a card.

Still, with the old card blocked and the new card yet to be activated, there's no real issue, is there? It's not like I've got the old card used for stuff in such a way that a sudden surprise block is going to cause problems, is it? Oh, hang on, I am using my debit card for a whole bunch of online things, and got my first "Uh, your regular card payment was declined" mail today. I've just signed myself up for Bulk Account Details Update Time. Hurrah.

I didn't foresee any of this, as the nice letter accompanying the card told me it'd be easy. In retrospect, it is easy, just for First Direct, not me. They've done the absolute minimum in this transition, and it sucks.

What would an effective transition look like? Make it easy for the customers. One thing you learn when running large systems, is that unless you actively hate your customers/users, you should be ready for dual-running. It makes you more resilient to failure, and it makes life easier for the user. So, give your customers a grace period of, say, just over a month from the new card being used successfully to the old card being deactivated. Maybe don't deactivate the old card when you've only seen the new card have a voided transaction?

For online transactions from stored details, the ideal customer-centric solution involves no work: When a transaction is declined, the error message can be of the form "Card no longer valid, switch to these details instead", and systems could auto-update with the new card automatically (informing the customer as they do so). Of course, we don't live in such a world, as that would be convenient.

Instead, we have to assume that customers will be updating a bunch of details. If you want to make the transition as easy as possible, create a report beforehand of what's likely to need updating, for each customer. They've presumably got "customer not present" details for the card transaction, and repeat online transactions are not likely to have you entering in the card number each time.

Then, during the transition period after the new card's been activated, regularly create a report of when you used the old card, which'll remind you not to use it when physically present, and prompt you to update details on the auto-payment systems. This should all be integrated into the app. After all, this is an online bank, right? App integration of significant changes, like the provider of debit card services, should be bread-and-butter.

But no, here we are. Maybe I need to investigate whether the new digital banks are any better.

Posted 2021-08-10.

Playing with SIMMs

I've been playing about with SIMMs, making them do stupid things. No, not Sims, SIMMs.

After something of a hiatus, I've finished (for now) another Teensy-based project, to read and write to a 30-pin SIMM from the '90s. The background is that I'm interested in eventually building a 68K Mac compatible, but it's a project I've never found the time for. However, warm-up experiments, like playing with the SIMMs I'll use, are very doable. :)

All the code and notes are up on Github, but really what I've been doing is investigating how quickly SIMM data decays if you don't run the required refresh cycle. The summary of what I found was:

- While the required refresh cycle is 128ms, most cells can hold the charge for much longer. Each cell has its own decay characteristics, with the effective RC value being roughly log-normal, as far as I can tell. The median cell takes 3 minutes to decay at room temperature.

- I'd read online that there's a strong temperature component to the decay rate. I'd tested the SIMM at 20-40 degrees, and could see a decent kink up in decay rate at 40 degrees, hinting that decay accelerates with temperature. Sadly, lacking decent tooling to generate stable temperatures beyond 40 degrees, this side is rather more qualitative than quantiative.

In any case, fun, fun, fun! Now to find the next toy microcontroller project - probably a good opportunity to get that RPi Pico doing something interesting....

Posted 2021-07-31.

Eigenvalues of random matrices

Recently, Twitter introduced me to the Circular Law, which is extremely cool. Not only do the (complex) eigenvalues of a random matrix end up distributed inside a circle, they do so quite uniformly due to a "repulsion" effect.

I wondered a little bit about what this repulsion looks like, and wrote some code to visualise it - https://github.com/simon-frankau/eigenvalues. I won't bother with an image here, you can click through to see what it looks like, but I found it a fun little project to do some basic numerical stuff. The "nalgebra" and "plotters" crates were a pleasant surprise.

Posted 2021-07-18.

Teleological incident postmortems

I made a Tweet I'm quite proud of, that got double-digit likes! ;) It goes something like this:

Teleological incident postmortem: "The root cause of this incident was the need for this system to be more reliable in the future."

280 characters doesn't do nuance, so I thought I'd write a little more. Even if, to be frank, there's not a lot of nuance.

Despite my advancing years, I only just learnt about teleology. My knowledge of ancient Greek thought is very limited, but I've started working my way through Bertrand Russell's History of Western Philosophy. It's a bit of a doorstop, but surprisingly readable with plenty of snark. I'm sure I'll do a review in due course. Anyway, the idea of teleology is (roughly) to answer the question "Why?" in terms of what it causes in the future. It's a bit like cause and effect, except with the event being the cause, not the effect.

I find it a startling way to think. I'm so used to "Why?" being in terms of cause and effect, that this framing, with its implicit hint of fate, is surprisingly alien. Why on earth would I apply it to incident response, beyond "I have a new hammer, let's hammer everything!"?

Firstly, I think it's an empowering framing. While I think it's not the way it worked for the ancient Greeks, it's good to be able to say "When we look back, what do want to say this event lead to? Let's make it that way!". It brings the right mindset to reviewing an incident, pushing it in the direction of making things better, rather than dwelling too much on the past.

Secondly, thinking about this slightly alien, human-story-centred approach to "Why?" can help remind us of the assumptions baked into our normal "cause and effect" approach to "Why?". Cause-and-effect covers a multitude of attitudes. It can be making up a story as to why a thing happened, or scientifically-grounded causation. The latter works best in situations with repeatable experiments, where the alternatives can be tested. Incident analysis, where we don't have access to the universe where the outage didn't happen, tends to the rather human story-telling end of things, and we should think about that.

In some contexts, sometimes "Why?" makes no sense. It's just trying to impose human values of meaning onto systems that aren't like that. Some events are random, some things are just the way they are. Not everything is about causation, and "Why not?" is just as (in)valid as "Why?". For incidents with human-built systems, this is less of a concern - if an outage happened due to bad lack or coincidences, you can still ask "Why didn't we have another layer to defend against this?". We should still proceed with care.

The lesson to take away, I think, is that there are different kinds of "Why?" for different purposes. It's a fun route back to the same old story. "Who did this?" is a bad "Why?" for improving the system. "What was the trigger?" is a bad "Why?". "What was the (singular) root cause?" is a bad "Why?". "What are all the key things that, had they been different, would have prevented or ameliorated this outage?" is perhaps a better "Why?".

In the end, our "Why?"s need to be driven by our ultimate goal for a PM, which is to improve the reliability of the system. Perhaps, counter to all my intuition, the teleological "Why?" is the actual best "Why?" in this situation!

Posted 2021-07-14.

Game Review: Tumbleweed Park

Hot on the heels of picking up Fez, I decided to play Tumbleweed Park, a graphical adventure in the style of SCUMM games like Monkey Island, which is not surprising as it's written by the author of those games, Ron Gilbert. I'd heard about it a while ago, got hooked in by the freebie mini-game staring the character of Dolores from the game, and, yep, ended up buying it.

It's weird to see where we are with such games. It's very pixel-arty, with various bits drawn deliberately below the native resolution, and just composited together, so you have big pixels on top of small pixels. To show off Advanced Technology you get to control five (!) different characters throughout the story, often making them work together. It's got the old-school limited set of verbs, and manages to capture the feeling of those old games while still taking advantage of the modern hardware. There aren't compreomises on the sound with Adlib-style music, though. Strangely, one of the things that hooked me in is the somewhat melancholy main theme!

This is very clearly the product of a Kickstarter. The phone book is full of backers who you can phone for messages, and the library is well stocked with a vast number of books. Strangely it kind of adds a chunk of depth to the world, and can be a great big distraction if you've got too much time on your hands.

Anyway, the game. It's nice to see there's an easy and hard mode. Obviously I want straight for the hard mode. ;) You could tell how that works through some of the puzzles being multi-part (nearly, but... just one more step!) where I assume the easy mode would just declare something done.

Speaking of puzzles, they were mostly pretty reasonable. If you can keep making progress in the game, it gives you faith that the puzzles are fair, and not just "try to imagine what the designer was thinking, even if it's not reasonable" or "combine all possible nouns and verbs until something happens". The only time I lost that was when I couldn't get into the factory, and it turned out it was blocked on (spoiler!) finding the pizza van, even though there's no actual logical dependency. I ended up taking a hint even if I think it wasn't strictly necessary, through frustration at that point.

This is a bit of a shame, as mostly the plot/puzzle motivation is pretty clear - you aren't wandering around trying random things, but are specifically trying to solve particular problems to advance the plot. This is pleasantly aided by giving each character a "To do" list. The only weakness is that sometimes you'll be given a puzzle you can't solve yet, and it's not obvious you can't, but mostly you'll have other problems to solve and not get stuck. Of course, I did get wedged for a while as I failed to mouse over a few pixels representing a key item, but that's how these games go!

Speaking of which, it's a game that's more than willing to break the fourth wall, as well as commenting on adventure gaming. Amusingly it takes a dig at Sierra adventure games for all their pointless deaths, reassures you it wouldn't do such a thing, and then chucks in one or two possible "game over" scenarios. Admittedly, not in a frustrating way!

(As an aside, my first PC adventure game was Space Quest III, and it was fun to later realise that constant unpredictable and surprising deaths are not actually necessary. That got replaced by the frustration of never quite getting my sound card configuration right to get the audio voices for Day of the Tentacle working (such a good game). Man, that was annoying.)

The ending is a bit meta, and quite frankly I also thought it was a bit meh. Having said that, I never really got on with the ending of Monkey Island 2, so what's new? :) However, the destination is not the journey, and I surprised myself with how much I enjoyed the game as a whole. Highly recommended, if you like that sort of thing.

Posted 2021-06-21.

Game Review: Fez

Fez is a Famous Indie Game, and I bought ages ago during a sale and never played it. My current medical leave has given me a chance to catch up a bit, and I've finally played it.

In my limited indie gaming vocabulary, I see elements of Undertale, The Witness and Monument Valley. It has the meta elements of Undertale, it has the layers of puzzles of The Witness, and it has the perspective puzzles of Monument Valley. In all cases, though I think it is a weaker game than those I'm comparing it with.

On the surface, the closest comparison is with Monument Valley. Fez's vocabulary of puzzles is far more limited than that of Monument Valley, making that part of Fez a somewhat simple and even unsatisfying puzzle platformer, despite the neat rotation mechanic, and cutesy graphics and sound.

However, Fez is two games in one. It's superficially a simple platformer, bright and friendly and easy. However, there's also a second level, of cryptographic-style puzzles. That level is... internet aware. Some of these puzzles are just horrible, because they're a challenge for the whole audience, not just you, and I guess spoilers are available if you want to complete the unreasonable puzzles?

I don't like this. I want a game that is a reasonable personal challenge to me, not a paean to internet sleuthing. The way these levels are mixed together is also jarring. There will be levels and puzzles that you have no chance of solving when you first see them, but this is not obvious when you encounter them, so you end up wasting time before realising you need to give up for now. This is particularly frustrating when the cutesy platformer puzzles are mixed with the secondary puzzles. Compare this to The Witness, where the layering is distinct and structured and... very playable. Discoveries there are enjoyable and surprising, not annoying and disappointing.

I think the epitome of this, including the frankly lazy cross-platform translation, are the tuning fork puzzles. I'm going to spoil them, and I don't care because they're rubbish. On the XBox (?) you'd see a tuning fork, and you'd get a left-right haptic sequence on your controller that you'd parrot back to unlock a secret. On the Mac, this has been translated to a left-right sequence of sounds. Which is completely non-obvious unless you're wearing headphones. The stereo effect on the built-in speakers is... just not there. A painfully obvious puzzle becomes obscure, simply through carelessness.

It's a wilfully annoying game. The clock puzzle makes that abundantly clear. It's a challenge to the players, but more in terms of what they'll put up with in the name of games, rather than in terms of an enjoyable gaming experience. Perhaps this is art, challenging preconceived notions of what a game is, but I'd prefer something enjoyable and engaging.

Posted 2021-06-15.

Thot leadership: A tale of two^Wthree outages

I'm useless at thought leadership. I am fundamentally incapable of getting my hot takes out before everyone else. Indeed, I only tend to get my thoughts down once everyone else has got bored and wandered off. That's what I'm doing here. I'm not unduly bothered by this; hopefully there's room to move on to more durable truths that stay relevant...

The particular outages that have been sticking in my mind are the Salesforce DNS outage and the Fastly config push outage. They're interesting to compare and contrast...

Salesforce DNS outage

Rather intriguingly, the outage report has been rewritten to remove any reference to a single engineer receiving consequences for the actions they took, but the initial draft... had that. To be clear, the first iteration I saw did not place that as the sole issue - it was mentioned with things like poor automation - but it certainly struck a chord.

A typical Twitter view was that anything like that is beyond the pale and a sign of an irrevocably broken culture. And I just can't quite get on-board with that. I view all outages as systemic issues, but that's not incompatible with having someone being negligent or even malicious. That is a systemic issue - why did you hire this person? Why did you not deal with it earlier? Why aren't your systems resilient against this? But it's also a people issue - you don't just go "Yeah, sure, you're negligent, but that's not your fault, we shouldn't have hired you. It's on us.".

To be clear, almost certainly we do have a screwed-up culture here, not One Bad Apple. I'm being pedantic because I think it's useful to avoid lazy thinking. How would you know the difference between "one bad engineer" and widespread issues? Well, the main thing is whether their pattern of behaviour is normal or not. This emergency change required approval, and was apparently inappropriately approved. Did that happen often? Are there consequences for the approvers? Canarying and staged rollouts were supposed to happen, but didn't - was that unusual?

I guess what I don't like about "blaming the engineer is bad" is that the opposite is not the real fix. You can avoid blaming the engineer and still make no progress. You need to understand the context, the norms, the reasons why alleged processes weren't followed and checks didn't work. The thing that really made me feel bad about the Salesforce outage write-up is that it had no answers to those questions, and moreover didn't even ask them. Take out the engineer-blaming, and it's still a concerning write-up.

As well as culture issues, this was, at heart, a "should have had better automation, with canarying and incremental rollouts" outage, which I think makes a nice comparison with the Fastly outage. So, let's move on.

Fastly config push outage

Despite being very short, I liked the Fastly write-up. For one, it didn't focus on the trigger, but on what happened. Buggy software was rolled out, and then a customer config change triggered it. It sounds simple, but so many incidents start with the immediate trigger (preferably "human error"), and are instantly wrong-footed in terms of managing the systemic issues. As it is, Fastly's response looks systemic.

So far, so boring. What was interesting to me was a Twitter conversation I had while the issue was still poorly understood. The assumption was that this was another operational config push gone wrong, not a customer config push. My correspondent asserted: 1) They wanted a write-up explaining how whoever was doing that operational work screwed up, and 2) They wanted proper multi-stage rollouts, with a human in the loop between steps, to prevent global failure in the future. I found this interesting, because there were little echos of Salesforce.

(My correspondent may disagree with how I'm portraying their views. Oh well. Pretend I'm making up a straw person to argue with, then! :)

Leading with "what the human operator did wrong" is, as I said above, dangerous to systemic thinking that will actually fix the system. Indeed, it's bad enough that people assumed that a config-push outage was an operational error, rather than customer control-path failure. Assuming it's human operator error is as bad as saying "It's always DNS", except it's not even a (misinformed, tedious) joke. Ok, in Salesforce's case it was DNS. *sigh*

The other point, about human inspection of multi-stage rollouts is... a lot more subtle. If you don't trust your rollouts, having a human in the loop and watching sounds good. In practice, it doesn't scale, and it sucks. Humans make mistakes, good automation is much more reliable. So, any decent system will have automated checks anyway. What's the human doing? Watching the machine to make sure its checks are working correctly. That's the approach behind Tesla's autopilot, and people have died because of that.

Get good at trusting your automation. We don't put human checks on the business path - no human is canarying the rollouts of customer-driven configuration. To say that the operational automation needs a human watching it means we're just not holding it to the same standard of software engineering as the application, and that is how you get outages. You don't want to stick humans in the loop by choice - they are just so darned unreliable. Look at all those outages caused by operator error! So, adding human vigilence is a sign of some underlying cognitive dissonance.

Where does this concern about automation come from? Well... *sad face*... there are valid concerns, which brings me on to the third outage, which I never intended to cover when I started writing this, but it's so informative:

Google's June 2019 outage

This outage is a case of automation taking down a large service in a very visible way. Automation makes it possible to do so exceedingly efficiently (and surprisingly!). Is full automation bad? Do we need humans in the loop?

One of the things about automation is it enables scaling. It enables complexity. If every production change were done by hand, we'd have a huge error rate and any large distributed change would be overwhelmed by the overhead of coordinating people. Replace that with robots, and you can keep scaling. You can build a really complicated system, and it'll function (most of the time). If you read the write-up, this is clearly a mega-complex automation system, with many layers and... it got too complex.

People might take away the lesson that automation is dangerous. They'll be tempted to put the much less efficient, much less safe humans back in the loop, and stop treating the problem like software engineering. Don't do that. Trust automation, but build automation that you can trust. Code lets complexity quietly pile up in a way that a human bureaucracy would immediately spot. It's a horrible temptation. Keep the automation simple and clear. Build it so that you can trust it.

Posted 2021-06-14.

On configs and general-purpose languages

Sometimes you'll read something that's clear, coherent, thoughtful and utterly at odds with your experience. That's just happened to me. In particular, it's this article on using a general-purpose programming language to specify the structure of your system. Well, "specify the structure of your system" is probably incorrect, since there's no hard line between the bit about system shape and the operations you perform, but that's roughly the gist? Anyway, the idea is to use general-purpose code to configure production.

As usual, bear in mind that I'm arguing against what I read, which may not be what was written. Interpretation is fun.

My TL;DR is that any time I've seen a general-purpose language used for something that doesn't need it, at scale it will turn into a horror such that you end up Googlng "The Office no meme". The freedom gets abused. I'm going to spend the rest of this post trying to add some subtlety to that viewpoint....

My Fun With DSLs (TM)

First, let's introduce DSLs...

Domain-Specific Languages (DSLs) are languages cooked up for a particular purpose. They should look like and feel like a general-purpose programming language, but they're specialised for a particular task.

A DSL can be Turing-complete - it can be set up so that any program you want to write can be written in that language, and you cannot, in general, tell if a given DSL program will terminate - but for most tasks you don't need that power and it's a pain to work with, so you'll make your DSL not Turing-complete. You can still have conditionals and loops, for example, but the loops must be bounded (kinda primitive recursive-level power).

I've seen DSLs used to great effect throughout my career. I spent a bunch of time in banking, and used them for specifying exotic equity derivative payouts and real-time trading algorithms (which are extremely different uses, even if the words mean nothing to you), as well as for configuring infrastructure. My PhD thesis was also based on a DSL, but I gotta say, looking back, it was more an exercise in how not to do it!

In general, I've found DSLs to work really well. I've found that when people break the box open and shovel in general-purpose language behaviour, things go really badly.

Note that I'm not against using general-purpose languages to write your DSL code in. Embedded DSLs, where you write your DSL within the syntax of another language, are a quick and easy way to bootstrap a DSL, and can give you a lot of mileage before you hit any problems. The important thing to note, though, is that you need to be clear about the DSL boundaries, and never leak into full general-purpose language functionality.

Terminology

The linked article uses a rather personal naming system. The ideas embedded in it seem to closely, but not necessarily precisely, align with concepts I have, so rather than risk confusing ideas, I'm going to use my own personal naming scheme, and suggest alignments. My stack-of-abstractions looks like this:

- Data: Roughly akin to "config", it's a data structure in some form, not too bothered about the details. It represents a "fully-expanded" view of the world.

- Domain-specific language: See above! A constrained language, contains some features of a programming language. Roughly aligns with "code", but not necessarily Turing-powerful.

- General-purpose programming languages: Go and stuff. :) Full Turing power, you can put whatever you like in there. Aligns with "software".

Why DSLs are awesome

Roughly, the article is "code sucks, use software", and my response is "general-purpose sucks, use DSLs". So, let's put forward my position...

The reason I like DSLs over general-purpose languages can be summarised as "If you give people a general-purpose language, they will use its full power, and this will screw you over." Breaking this down, we have:

- Pure functional behaviour: The DSL can be constrained to have extremely well-defined, consistent behaviour through well-known interfaces. Your general-purpose code can just go off and do whatever it likes. Good luck avoiding surprises in large systems, because people will make use of that.

- Separation of "what" and "how": Since the DSL is constrained, you are forced to specify the "what" in the DSL, and all the details of "how" go into the DSL implementation. You're forced to have some structure to your system.

- Analysability: This enforced structuring simplifies analysis. A decent DSL is easy to statically analyse. Since it's known to terminate, most "analyses" consist of "running" the program with a particular interpretation, and collecting the results, and you can be confident that it's not going to hit some general-purpose code that's going to take down prod or whatever, when all you wanted to do is calculate how many machines this config would require.

In practice, the main "analysis" that you use on the DSL is "expand out a fully-unfolded data structure/config", but it gives you the option to do better than that. And I always want the option to see the fully-unfolded config, as it will be used downstream, without actually performing any operations, as that's an absolute baseline for observability. And a badly-done general-purpose-programming-language-based system won't give you that.

DSLs are great, code sucks

Much of what I read in the article rails against the current state of tooling. All the YAML. Templating. Crappy tooling. Code as config/data with a thin coat of paint.

I think I've been really lucky, in that my career has kept me away from the "state of the art" of open source infra tools, and has let me play around with decent DSLs, supported by the people who use them. I've not had that pain.

If you have crappy "code" systems, I can see the temptation to move to "software" systems. I've seen people give in to that temptation, and as things scale up, I've seen them regret it. General-purpose languages are harder to reason about than DSLs, and we don't need more complexity. DSLs are an investment in keeping things simple, because if you're operating large-scale distributed systems complexity is a complete reliability killer.

My take is that if infrastructure as code sucks, we should make it not suck, not move to general-purpose languages. I get the impression that a lot of the tooling is built by infra people without understanding of programming language design, so we end up with bad tools. The proposal is to jump to general-purpose languages to steal those lessons and tools, but that's not the lesson I want to take away. Which brings me on to...

The infra engineer inferiority complex

I felt the article's idea of "software engineers" vs. "infrastructure engineers" very revealing. I could be reading way too much into it, as someone with a strong software background who's spent some time now in infra, but it feels like the infra inferiority complex.

The message I'm getting is "Infra engineers write code in these crappy tools, while proper software engineers write in proper languages like Go. Infra engineers should be like software engineers.". I wholeheartedly agree, but draw a different conclusion.

Proper Software Engineering throws in tonnes of abstractions. It builds DSLs. Look at Unix: You want to manipulate text? C sucks for that, let's build awk and sed and stuff. The software engineer approach is DSLs, but with real ownership - tools built by those who use the tools. They treat it like a real language, and build out the tooling so that it's not a joke to use. They don't just create a better string manipulation library for C.

I think the issue is the ownership. Right now, the "code" end is for infra engineers, and the tooling that actuates it is "software", usually part of some OSS project, and they're viewed as somewhat distinct, and in the end the infra people are just writing some templated config.

This is not how it should be. "Infra engineers" should have the same software engineering skills as "software engineers", and hence engineer software similarly. They should own the system end to end - both the config/what and the actuation/how parts - but still respect abstractions. "Software engineers" do not (ahem, should not) mix config into their source tree, even if they can. Infra engineers should be just as good at abstracting.

Back to Embedded DSLs

Is the article really suggesting something I totally hate? I can't be sure, but I think so. The "func MyApplication" example code is pretty much an embedded DSL - a data structure being built within a general-purpose language can be clearly distinguished from the embedding language. This does not scare me.

On the other hand, the linked pull request is clearly structured in a way that mixes deployment behaviour with parameters, breaking all the abstractions I'm such a fan of. So... yeah, I think I don't like this.

What now?

What now? I'm not sure. I'm sympathetic to existing tooling sucking badly. I really don't like the proposed solution. I've had a go at explaining why I dislike it, I have no idea if I've got that across or not. I don't have the time or energy to propose a better alternative, and TBH I don't know the OSS tools anything like well enough to make a sensible suggestion. All I can say is "if this is a proposed improvement, I think the starting point must be really bad.".

Or... maybe I lie. I do have strong opinions of my own. I like declarative systems, with reconciliation-based actuation. I like DSLs for those declarative systems, static-analysis-based tooling to leverage those descriptions, and strong end-to-end ownership of the full system by infrastructure engineers so that infrastructure management is truly treated as software engineering. Alas, I still lack the time and energy to act on those opinions.

In any case, that article really got me thinking about config for the first time in a while.

Posted 2021-06-14.

The Worst Books I've Read

Something I've been thinking about writing, pretty much for years, is a little about the very worst books I've read. I've done book reviews for years on end, and I rarely slate books. I'm also something of a completionist, so once I've started a book, I tend not to give up. After all, it might get better, right? This is almost never the case.

To start with, I'm not a fan of Kurt Vonnegut. I don't get why people like him so much. So it goes. Kilgore Trout, his fictional unsuccessful sci-fi author, seems to just be an outlet for bad sci-fi ideas, and we don't need more of those, intermediated or not. I acknowledge that Vonnegut played with ideas and tried various innovative things... they just all fell flat with me.

While I'm at it, to show I'm not just down on modern authors, I really don't rate Hardy. I don't know if it's the writing or the distance of time, but Tess of the d'Urbervilles left me nothing but bored.

Having said that, none of Vonnegut's or Hardy's books make my top three. Notably, my top three are all doorstops. A short bad book is one thing, but trawling through a long, tedious work is so much worse. They're also books that think highly of themselves, or at the very least have staunch supporters:

#3: Stranger in a Strange Land by Robert Heinlein

A lot of people think Heinlein is a classic sci-fi author. I read SISL and never read anything else by him. Judging by the descriptions of his other work, it varies and SISL is not fully representative, but... I don't care. I've wasted enough of my life reading Heinlein.

Why is it a bad book? It thinks a lot of itself, yet it's fundamentally naff. It tries to combine so many ideas, yet so many of the ideas are just so bad. It's suffused by mock spirituality, with the most tedious of '60s free love combined with... whatever can be found in the kitchen sink. It grinds away at the brain until, finally, several hundred pages later, you're released, thinking "What was the point of that?".

#2: Gravity's Rainbow by Thomas Pynchon

Why am I a glutton for punishment? I read a review of Gravity's Rainbow in the Cambridge University student newspaper by some English student, and decided to read it. The review was positively effusive, but it was really praising the book's cleverness as a proxy for the cleverness of the reviewer in understanding it.

I'm sure it is a clever book. It clearly thinks it is. But it's just too much like hard work. It's some kind of tedious shaggy dog story that is much more interested in showing off than actually telling a story. Is it meandering, or is it just plain lost?

All of which might be forgivable if it wasn't hundreds and hundreds of pages long. All the better to meander with. As it is, I'll be very happy to never read another "Slothrop sez".

#1: The Illuminatus Trilogy by Robert Shea and Robert Anton Wilson

Gravity's Rainbow was a clear product of the '70s, and Stranger in a Strange Land has its hippie free-love elements. There's some kind of strand here that gets combined in The Illuminatus Trilogy.

There's a paragraph in Fear and Loathing in Las Vegas about the wave of hippie idealism breaking and rolling back, and... that's the seventies in so much culture. The leftovers of the '60s gone soured and seedy.

I've never been a hippie person. When choosing between those who fought actual Nazis and built rockets to the moon, and people who dropped out, I know which side my modernist, straight-laced self would fall on. It took me a long time to realise it was ok to not like hippies - growing up, the media did rather go on about how the '60s were the best of times, and it took me forever to understand that this was because the kids of the '60s were running the media.

'70s counterculture, as epitomised by The Illuminatus Trilogy, though, that's something else. Crappy conspiracy theories that look like a trial run for Trumpian reality avoidance. Awful, awful writing. Nonsense plot. More crappy, bad, seedy sex than you can shake a bargepole at. A thousand and one other bad qualities, and behind it all... an astonishing vacuity. It would have had the redeeming quality of being short, but it's a trilogy in an omnibus book and for some reason I read the lot in search for something when I should have given it up as a bad job.

Why on earth would I read such a thing? It's a long and complicated story! As a computer-liking kid in a small town in Gloucestershire in the early '90s, I felt somewhat socially lost. In one rare bookshop trip, I found and bought The New Hacker's Dictionary, which was like a window into the history of computer geekery culture - there was a tribe out there I could belong to!

TNHD was itself derived from "The Jargon File" which had been floating around on the proto-internet (the culture described in it actually being a mish-mash of several subgroups, but that's not terribly important). The bookification process involved Eric S. Raymond (ESR), who also added an appendix on "Hacker Culture", which overlooked the variety of people making up "hackerdom" in exchange for really just scribbling down his own politics. Naively, I bought in.

Oh, and his politics just happen to be quite crappy, with an interest in awful '70s counter-culture. So, when The Illuminatus Trilogy was mentioned as a way of understanding the mindset, I scooped it up. It's crap, I suffered cognitive dissonance, and came to realise his politics sucked. So, I guess it did some good. The real lesson I eventually learnt was that you want to be extremely careful who your role models are. Since then, it's become increasingly obvious that ESR is not a great person, and widespread internet access has made computer geekery into a very broad church - effectively TNHD has been made obsolete as the communities have become directly accessible.

Later, I found out that a friend of mine saw me reading it, took a skim when I put it down, and thought I was some kind of weirdo for reading that crap. To read it and enjoy it, that's a bit weirdo. To read it, not enjoy it, and continue reading anyway... that's my idiocy.

Worst book ever. Seriously.

Posted 2021-04-02.

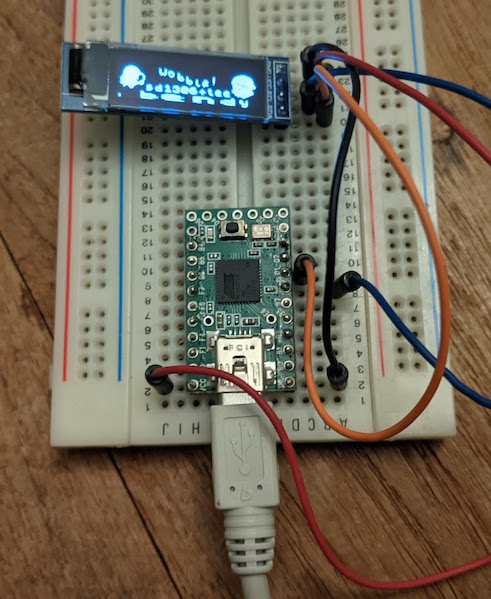

Microcontrollers and OLEDs

The launch of the Raspberry Pi Pico has reminded me to play about with microcontrollers. I have completed a first-year-undergrad-like project: display something on a little 128x32 OLED display, using a Teensy 2.0! My biggest fear was installing the toolchain, but it turned out to be easy. Maybe things have improved in the last few years. Microcontrollers being big enough and powerful enough to happily run C rather than really prefering assembly is also definitely pleasant.

Of course, a photo is necessary:

Source is up on Github, and there's a video here.

Big-banging I2C was relatively painless (having previously done SPI for SD card access on my Dirac Z80-based machine), and I got to play with very simple graphical effects. So, fun.

Of course, the whole thing is a yak-shaving exercise. I want to do this as a warm-up before using the Teensy 2.0 (5v compatible, yay!) to drive a 30-pin SIMM, as an early experiment towards building a 68K Mac-compatible, which has been on my project bucket list for ages. I'm starting to realise how these MCUs can make a nice support device for these retrocomputing experiments.

Posted 2021-02-13.

Doing stuff in 2020

I think it says a lot that my last update was January. 2020 has been quite the year, and I don't think I've got an awful lot to add on top of the general conversation. What I want to do, though, is remind myself that it was still a year where I managed to do stuff - managed to build things and learn and achieve.

So much has been going on at home, between the rounds of children learning from home and just trying to keep sane. And all the work-from-home, of course. Getting a good videoconference set-up was like a project in itself. My son moved school this year (to one in central London, even! It seemed like a good idea at the time.), so I had my share of masked school runs. However, I don't really blog family stuff, so let's skip over that.

I accidentally got an MBA. I like MOOCs, and as a techie it's very easy to have a career where you can vaguely see the business in the distance, but never really understand it, so learning more about it all seemed extremely interesting. I got some very polite smart.ly spam on LinkedIn, thought "Why not?" and joined up for this non-accredited online-only Executive MBA course - the official degree status was not important to me, I was just interested in Learning Stuff. A year later, they're now quantic.mba, they received full accreditation, and I have an Executive MBA with Honors. Ooops! :)

I got promoted at work. This one was very much not accidental, being the culmination of years bashing at the system, but I got there. I'm strongly of the opinion that the easiest way to get to a level at Google is to be hired at that level. It was a bright point in a very grinding year of work.

I dealt with my father's death. Not exactly a deliberate project, but something hard that we got through nonetheless. The timing was fortuitous in otherwise bleak circumstances - lockdown lifting meant we could visit him in time and hold a small funeral in person. My sibling was a star in the hospital visits, my wife has done amazing admin work, and family friends really helped us out. Everyone was wonderful in an otherwise difficult time.

I learnt to draw. Ever the book-learning type, I bought a couple of introductory books and started practising. I'm by no means any good, but it's great to pick up something that I feel I have no natural aptitude for, and just... learn a new thing, be bad at it, and that's ok, and just gradually get better. It also made a nice distraction from dealing with my father.

I became a licensed radio amateur (M7FTL). Software-defined radio and the like sounded fun, and a work colleague mentioned the exams had gone online-only, so it seemed a great opportunity to find distraction from the grimness of 2020 in study. Who doesn't love an exam?! I completed my Foundation license and, well, I've not done much with it, but it was a fair amount of fun, and I learnt a bunch.

Last year, I learnt to sail. I was really looking forward to getting better this year, but in the end only got a quick, single session in. It does make me feel a bit better that I have managed to find other things to learn and improve at instead. Screw 2020, and here's to our achievements despite it.

Posted 2020-12-27.

A Fire Upon The Deep (again)

I decided to re-read A Fire Upon The Deep. It looks like I last read it... 15 years ago?! Really?! Well, there you go. It's been one of my favourites, largely because it so effectively steals ideas from computer science to make a science fiction novel that's ostensibly space opera.

It's been really interesting to come back to it. I suppose it's fun to go look at the themes involved:

- The structure of the galaxy follows the pattern of complexity classes, where different things are possible. The galaxy is arranged like a Venn diagram! A Power offers an "oracle", presumably capable of answering questions not answerable in that region of space, just like the complexity theory equivalent.

- The whole book is about computer security. The Blight is sentient malware, and decades before the Internet of Things was a thing, one of its first actions is to subvert a tiny sensor to own a ship most destructively. And there's a most excellent back door that I don't want to spoil.

- The Known Net is Usenet: It's decentralised and anarchic and centred around interest groups and mostly full of rubbish. Not much more to add!

- The Tines are networked multiprocessors. While the normal scalability limits of a fully connected architecture is around 5 or 6 Tines, there are lines (vector processors) and Flenser experiments with other topologies such as hub-and-spoke and hypercube.

As well as the compsci elements, there's the structure of the story: Pham Nuwem is a Christ figure. A god (well, transcended Power) made flesh, sacrificed to save people from their mistakes. Also resurrected, although in this case at the beginning of the story.

Not everything has stood the test of time for me. Going beyond the fun compsci space opera, I felt the characters were somewhat weak and the battle at the end was pretty muddled. These complaints are still missing the point, though, when the ideas are so fun. I'll probably re-read A Deepness in the Sky in a year or two. :)

Posted 2020-01-03.

Galette: A GAL assembler

Yak-shaving at its finest!

I want to build a 68K-based machine. To avoid needing too many TTL chips, I wanted to use GALs. I played with GALs, and eventually got them working, but not before looking at the source to galasm and deciding that it was a bit old and crufty and I didn't really trust that I understood its corner cases.

So, I wrote Galette, a largely-galasm-compatible GAL assembler, written in Rust and hopefully a bit more maintainable. Yaks-shave completed.

Posted 2019-04-22.

Linux 1.0 kernel source reading - 22: TCP, and we're done

So, my reading of the kernel code ends not with a bang but with a whimper. tcp.c is the last source file to read, and is a 3.7kloc mess. Most of the code looks plausible, but would take far too much time to understand if it's doing the right things. tcp_ack is pretty horrible. tcp_rcv is too. The structure make responsibilities unclear (not helped by C's tendency to mix the lowest-level details with the highest level abstractions). I guess one could charitably say it's compact, but it's in the "so complex there's no obvious bugs" category. To be frank, I just skimmed it.

There are various things I've learnt reading this kernel. One is that a clear and consistent programming model is necessary if you want a maintainable kernel (e.g. around how to avoid race conditions, how to handle operation completions, etc.). Another is that C is a really bad language for this kind of thing, and RAII-like tooling is necessary to stop resource leaks being risky. Clear abstractions are great, good comments are important, and life's too short to read hard-to-read code.

Posted 2019-03-23.

Linux 1.0 kernel source reading - 21: All but TCP

After another hiatus, I've almost finished reading through the Linux 1.0 kernel. Building on last time, I've now read everything but tcp.[ch], which is probably a good time to take a break as tcp.c is pretty near 4000 lines on its own, and the longest source file in the tree.

- Network-related headers from include/linux. There appear to be a bunch of structs that are borrowed from elsewhere, and never referred to. It's clear the stack is under development, in a slightly magpie-like way.

- arp.[ch] Pleasantly straightforward. It did take me a while to realise "arp_q" is not a queue of ARP requests, but a queue of packets that can't be sent until the ARP responses are in! I read most of the way through the file before realising that the handling of cache clearing is done by timers when sockets get closed, so that logic is actually distributed else where.

- ip.[ch] So much to nit-pick! Lots of special values all over the place (usually 0) to mean "not filled in" or "not applicable". I'm loving languages with explicit Option types, but at least give a named constant for this kind of thing! "ip_send" is poorly named, filling in the MAC header but not sending. "ip_find" has an unused "qplast" variable - it was clearly copied from some list-mutating code. "ip_free"'s call to del_timer with the interrupts on looks like a great race. The file has a horrific mix of tabs and spaces. The IP fragment-collectiong code is very willing to run the kernel out of memory, and is not programmed defensively. Generally, though, it's easy-to-follow code. It's weird to see that there's retransmit-handling code at the IP layer, despite this being a TCP feature.

- raw.[ch] And then we're back into the world of using complicated, overloaded structs. Due to the way that raw IP sockets work, each socket opened dynamically inserts a new protocol into the IP stack, in order to intercept all the packets that arrive. Pretty heavy!

- icmp.[ch] Pleasantly readable and straightforward!

- udp.[ch] Slightly less readable than the ICMP code, but still pretty unexciting.

Posted 2019-03-03.

This week's hobby: Crochet

We took a short break to Norwich (surprisingly nice), so obviously it's time for a new hobby. A bit of background: I've already tried my hand at knitting, and there was a rather nice crocheted/knitted Groot at work. Then, at some point, it disappeared. I thought it should be replaced.

I found instructions for the amigurumi Groot model here, and followed them. It turns out YouTube is actually really useful for learning a new manual skill. This is the result:

I'm actually rather proud of it!

Posted 2019-02-23.

Learning Rust and the Advent of Code

I am finally learning Rust!

I should have designed Rust. It's been clear for far too long that languages like C and even C++ are far too dangerous, unnecessarily allowing many classes of bug to exist, somehow in the name of power and being close to the metal. So many things that could be fixed with decent use of a type system and static checks. On the other hand, great languages like Haskell require GC, which makes them an odd choice for systems programming. Go says "No, you can write your systems programs in a GC'd language, and it'll be ok", but there are still applications where this feels wrong, and Go makes some really suspect decisions in the name of simplicity (*). There was an obvious gap in the market.

(*) The trade-off being suspiciously similar to Not Invented Here syndrome, where "here" is Bell Labs in the late '70s, possibly early '80s if you're lucky. Hmmm.

At the same time, this is kinda my area of expertise. My PhD was on statically-allocated functional languages, so it was right up my street. Seeing the problem, and knowing the area, I could have tried something in this space. I didn't.

And you know what? I'm glad I didn't. The result is really good, and I don't think I'd have produced something as nice as Rust. It's pleasantly pragmatic, the lifetime inference rules are simple (certainly compared to the thing I saw in research), and I got the language I wanted without a decade of hard work!

The documentation is pretty good, so I'm learning the language from the site, although the language still appears to be in flux even now - despite learning the language much later than I originally hoped I'd be able to (I first planned to learn it several years ago, but never found the time), I don't regret it too much given how it seems to have changed! I'm not entirely sure the docs are up-to-date.

I don't know whether it's me getting more experience in programming, or just the nature of Rust, but I'm not feeling confident just from reading the docs - I want to understand the idiomatic style of the language and read some good Rust code. I've not done that yet, but I have at least started writing code, rather than just reading the manual in the abstract.

For this, I've done the Advent of Code, admittedly extremely late (which is quite good, as it stops me trying to be competitive. :). Running through a couple of dozen simple algorithmic coding challenges is a great way to learn the feel of a language, and understand the subtleties that docs don't get across. And Rust does have subtleties. It doesn't have the pointless accumulated crufty corner cases that C++ has, but the features combine in ways that take a little while to get used to. So, that's what I've been doing.

My code is now up on https://github.com/simon-frankau/advent_of_code_2018 and I plan to carry on with my learning. I think it's my clear favourite systems language now. Being able to code without the fear of segmentation-faulting stupid mistakes from C and C++, but with the full feel of an effective systems language is great.

Posted 2019-02-10.