Simon Frankau's blog

Ugh. I've always hated the word 'blog'. In any case, this is a chronologically ordered selection of my ramblings.

Stephen's Sausage Roll

I'd heard good things about Stephen's Sausage Roll and on Black Friday it was ridiculously discounted. So I bought it. And it ate my time.

As people have said, it's a puzzle tricky game. I never got stuck for too long, but there were certainly puzzles where I had to quit the game, go away and think for a while. So, I guess that counts as a nice balances.

It weirdly feels like a linear interpolation of The Witness and Sokoban. It's got that pushing-stuff element of Sokoban, but the explore-the-world-working-out-how-puzzles-work-for-yourself-and-then-uncovering-the-story-of-the-land aspect of The Witness.

Making it a game about sausages just makes it weird, even as the story gets darker, and I think this ties up with the lo-fi aesthetics. I found the visuals just offensively bad - they hurt my eyes while playing the game. It's certainly A Look. I kind of wonder what it would look like, even if just the textures were upgraded.

However, it's clearly about the puzzles, and I think the visuals cement that message. Moving from land to land and working out the different ways the sausage and fork work, discovering ideas (or, more realistically, being subtly taught them by the game) and using them is fantastic. Even if it sounds ludicrous.

Posted 2018-12-15.

The NTK 31337/Elite t-shirt, again

Back by popular demand! I never actually posted the image for the NTK Elite t-shirt, as I've now had my second request ever to have a copy of it, I've now put it up online. If you're lucky it'll not show up on your browser - it's white on a transparent background. I'm sure you can work it out. :)

Posted 2018-11-03.

Linux 1.0 kernel source reading - 20: Networking edges

On to the next bit of the network stack. My plan had been to cover the stack from the bottom up, but in the end this turned into an "edges in" approach, covering both the device-interface layer and the user API.

- dev.[ch] General send/receive glue (interrupts, etc.), plus ioctl handling. ioctls code is alwyas dull switch statements.

- packet.c Raw send and receive of packets. As opposed to "raw", which deals with raw IP packets. This file uses "datagram", which uses "sock", so let's take a look at that...

- sock.h "sock" is one huge and complicated structure. proto is big but not quite as bad.

- sock.c Yep, that's a big pile of user-land-interfacing code. 2kloc btween the .h and c. "destroy_sock" is a big method that's complicated, and it's not clear to me when it's really done. A bit more documentation, probably through invariants, would help, I reckon. Then there's {get,set}sockopt. As if ioctl and fcntl aren't enough?

- datagram.c Provides useful reusable functionality across protocols.

So, having both sides of the interface defined, I'm at a point where we can do a little bit of filling the middle and reading up the rest of the stack.

Posted 2018-10-21.

Infocom's Enchanter

Work's performance appraisals continue, so more distraction was needed, and I decided to go for an Infocom game, Enchanter. It's magic-based, and pretty fun. I got through it all on a single hint.

I particularly liked the in-game hint system. When you sleep, your dreams give you hints as to what you need to do. Which is helpful, since some of the things are rather unobvious - there's a bunch of solving problems you didn't even know you had.

I got particularly stuck when I found a thing that was clearly supposed to do something, but no idea what it was needed for. This was made trickier by the fact there were some red herrings.

What I forgot is that '80s games, especially text adventures, were sadistic. Despite the game not supporting several solutions that I thought were quite reasonable, that it didn't like, it also allowed me to solve one problem, apparently succesfully, in a way that left the game unfinishable. And once the puzzle was solved, the dream hints didn't cover that puzzle, leaving me without hints at the actual correct solution.

Once I'd read online hints and gone back to the start to replay it successfully, the in-game sleep hint was really obvious. Most frustrating.

Between that and the occasional "guess the verb" there was plenty of frustration, but a fair amount of fun and exploration too. A very '80s experience, not quite as good as Trinity or Bureaucracy. Followed by Sorcerer and Spellbreaker.

Posted 2018-10-06.

Linux 1.0 kernel source reading - 19: Networking periphery

On to networking! This is a fairly chunky area in itself, so I thought I'd start with some peripheral areas: The non-IP networking stack, and the infrastructure parts of the inet stack. As usual, I started with the Makefiles and READMEs to get an overview. Not much of interest.

From there, I looked at the source in the "net" directory itself. ddi.c and Space.c are a simple module mechanism that has hopeful comments that it'll be picked up for everything else in the system. socket.c handles the socket-related system calls. It's mostly standardised wrappers around the fake-virtual-calls-in-C for the specific socket types, called from a big switch statement, plus some helper functions. This is pretty useful to help me get my bearings. Now's a convenient time to read a few related headers.

Then, net/unix. net/unix/sock.c is annoyingly fiddly, and makes me think that when people say "In Unix, everything's a file.", it's in an Animal Farm world where some things are more like files than others. Maybe Plan 9 gets this right. I should probably take a look sometime.

Having read net/unix, it's time to start on net/inet. I'll skip the headers and just concentrate on the C source fils I read:

- proc.c is the usual "convert stats to some text" proc fs entries.

- loopback.c clearly lives higher up the stack than I intended to poke around at this point, but is also a good introduction to how a device should behave - which I guess is a bit out-of-order, since I've already read the ethernet device driver code.

- eth.c isn't an ethernet device adapter layer. It's actually a few utlities used by the ethernet drivers to create the low-level ethernet devices.

- timer.c is messy. I was hoping it would be generic networking timer-handling code. As it is, it's actually the implementation of various timer-triggered chunks of code, stored far away from the code that starts the wait, or is waiting on the timer handler to do its job. I am not a fan of this structure, since it doesn't group the code together in a maintainble way.

- protocol.c appears to be the code that sits between IP and TCP/UDP/ICMP , performing the multiplexing. I originally intended to read the stack bottom-to-top, so this is slightly out-or-order, but it gives me a better idea how things are tied together in the middle. Divide and conquer!

- route.c manages a simple routing table. Way before iptables and ipchains, there was a simple list of what should be sent to what interface.

- Finally, skbuff.c handles the skbuffs, or socket buffers. skbuffs normally live in a linked list, so this is just a set of utilities to create and destroy the skbuffs, lock and unlock them, and handle their management within the list, in all cases caring about thread-safety. Pretty straightfoward and quite pleasant. I was slightly surprised that it doesn't do anything to do with manipulating the data in the buffers, arranging buffer headers, etc., so it keeps nice and simple.

And that's laid the groundwork. Now, I plan to work from the bottom up, starting with dev.c, and eventually making my way to sock.c. Let's see how long that takes...

Posted 2018-09-23.

Magnetic Scrolls' Myth

Work's got busy and stressful - it's performance appraisal and quarterly planning time, simultaneously, and so I've been looking for a light distraction. Myth is it. A short text adventure, original not sold but given away as a gift with a subscription service, it's short and sweet.

It's a simple, solid text adventure. Nothing like the mess of Corruption, and much more clearly structured than, say, Jinxter or Guild of Thieves, it was pleasant and quick to complete - I think it took me three evenings of not particularly strenuous effort. I couldn't really ask for more!

Posted 2018-09-22.

Linux 1.0 kernel source reading - 18: Character devices, part 2

Now, the terminals end of the characters devices. I've already read serial.c, which means I've read a terminal driver already without being properly aware. pty.c is the first one I read deliberately. Yes, it looks like a couple of connected terminals.

Then, onto keyboard code. defkemp.c, kefkeymap.map and diacr.h look like dull scan code conversion tables, so meh. kd.h is complicated - it's not just keyboard stuff but also includes video modes etc. kbd_kern.h covers LEDs, locks and modes for the keyboard. keyboard.c handles the keyboard itself - mostly how to handle incoming key presses (unsurprisingly), with a horrlbe mix of table-driven code, switch statements and ifs. Messy but not mysterious. It even uses an octal constant.

vt.h is short and obscure. vt_kern.h is short and obscure. vt.c is the ioctls for the terminal.

Then, onto console.c, a big and interesting file to implement the console on a text mode display. It starts off with a giant structure and then macros to make it convenient to access those fields. Scrolling functionality is a pile of assembly, which I'm sure is efficient, but I'm not going to try to decode inline gcc assembly.

This first part of the file is actually pleasantly readable video buffer wrangling. Then there's a 300 line function to interpret the stream of characters written to the console, then other console functionality before finally doing some font-setting stuff. In the end, much more straight-forward than I expected for a 2kloc file.

tty_ioctl.c is what I'm used to for this kind of file by now. Spoiler: It's a great long switch statement.

Finally, tty_io.c. Ugh, so tedious. I'm impressed Linus wrote all this as a student. Writing schedulers and VM and stuff is fun, but this is deeply boring and very practical, and... it's done. It's needed to make a basic usable Unix clone, and he did it. I really don't want to read it, and I'm mostly skimming.

There are some comments about "be really careful modifying this code, don't modify unless you understand it, as it's full of race conditions". Hint: Don't do this. If there are risks of races, explain them explicitly or even better remove the risk. Don't write an obscure comment, feel proud about how clever you and your code are, and wander off.

One function in particular I found interesting: tty_write_data covers the same issue I saw in the SCSI stack with "How do I call callbacks if I don't want to overflow the stack?". In this case, it's implemented with a bottom-half handler, which I guess is following the same pattern of "queue it up until the current work unit is complete". In other words, we convert the usual LIFO execution model for a FIFO model, from stack to queue.

Anyway, I declare character devices both boring and done. 17 thousand lines of network stack to go and I'm done with the Linux kernel!

Posted 2018-09-09.

Linux 1.0 kernel source reading - 17: Character devices, part 1

Having finished off SCSI drivers, I've turned my attention to character devices. There's a lot of console stuff here, but I thought I'd start with the not-terminal devices first, and work my way up to that level of tediousness.

Starting with the Makefile, I chose to read through the short and optionally-compiled mouse driver files - atixlmouse, busmouse, msbusmouse, psaux and mouse. There's a standard pattern to the bus mice, they're easy to read and it's a good start. By the time we get to psaux, it's not clear to me what's going on with psaux vs. 82c710, but I don't really care.

Then I read lp, mem, serial and tpqic02. mem is in some ways the most fun. It supports not just /dev/mem, but /dev/null, /dev/zero and a couple of others. It also contains the main chr_dev_init function, presumably because it's really not optional. lp, serial and tpqic02 are all longish (lp shorter than the others), full of device-specific details, well-commented and tedious.

It's really devices like this that make me want microkernels or something. Why should the kernel have to care about such tedious details of specific devices? Apparently you need to have full access to all memory and state of the system to decide whether or not the tape drive should be rewound.

While much of the resource management of the kernel makes me wish for explicit RAII, the low-level drivers, with their callbacks and interrupts, top and bottom halves etc. make me wish for some kind of explicit coroutine support. And the layered structure and asynchronous completion makes me think of microkernels and monitors/servers for the drivers. There must be a neater way of doing all this.

In any case, I've read the easy stuff, and now it's time for various terminal-ish things.

Posted 2018-08-31.

Linux 1.0 kernel source reading - 16: Low-level SCSI

I enjoyed reading the low-level SCSI driver code much more than I expected. After reading the sound and network card drivers, I imagined the low-level drivers for SCSI would be quite boring, and potentially rather uniform and repetitive. They turned out to be rather interesting.

As I mentioned in the previous post, I'd read a bit around SCSI as I didn't know much about it, and this background knowledge came in rather useful for the low-level drivers.

I started with scsi_debug, which is a fake low-level SCSI driver (and hence a good introduction!). In various cases it calls back into the "done" callback immediately, which can cause internal_cmnd to then call back recursively into the scsi_debug code. Kernels aren't supposed to do recursion, so I can see why scsi.c cares about stack overflow. This recursion is a bad thing. Why aren't they handling it? In other cases, they hook the callbacks off timers, creating a more realistic set of timings (and probably helping to avoid stack overflow).

Having got the basic idea with scsi_debug, I moved onto the smallest self-contained real drivers. I say "self-contained" because some of the 5380-based drivers are small, but rely on the rather long NCR5380 source file. So, next up, aha1740 and wd7000. These are both simple drivers that rely on a smart card. You just fill in a memory-mapped struct, queue up the mailbox request, let the card do its thing and DMA, and then get an interrupt when it's done. Lovely. A great introduction to the basic ideas of SCSI as you can elide so many details! All the hard work is carried out by the hardware.

So, that was nice. Let's have a go at the NCR 5380-based drivers. g_NCR5380, t128 and pas15 all use NCR5380. Lots of funky (maybe icky) macros allow the code to be reused, with various different modes, including stuff like pseudo-DMA. There's a lot more low-level big-banging and implementing the SCSI protocols by hand - much more code, much more to learn from, and much more CPU overhead.

NCR5380 is nicely commented and has to handle all the conditionally-compiled cases, but it's still loooong. There does seem to be an inverse relationship between how long the driver is and how nice the hardware is. Short drivers and clever cards, please!

However, it's nice to see the NCR5380 "run_main" takes recursion seriously - it is implemented as a coroutine that refuses to run itself if already running, and checks for more work to do before returning, thus effectively implementing a queue for the work rather than a stack. Having not dealt with much interrupt-driven kernel workloads before (beyond "interrupt arrives and wakes up process"), it's nice to see this pattern to handle this shape of problem.

Then I'm onto the remaining drivers, that are longer (and hence generally based on dumber hardware), but not 5380-based. The ultrastor driver is for clever hardware. The length comes from highly-commented code and dealing with multiple card variants. It shows a few signs of being thoughtful in its design, including caring about re-entrancy.

The fdomain card is fairly dumb, so we have to twiddle most of the lines ourselves, but it does at least to DMA for the data. Bizarrely the earlier chip doesn't seem to tell you which direction the data's going, so there's 130 lines saying which direction data goes based on the command id.

The aha152x.c driver is another long driver (2.4kloc), for a dumb card. However, the lines get spent in weird ways - the code is quite vertically-oritented, with planety of ifdefs, there's around 1000 lines of introduction, and then aha152x_intr is a 1000 line function. I don't believe a 1000 line function is ever good style. It's well commented, though. It then finishes off with about 250 lines of verbose debug-text-printing code.

The final driver is seagate. This is another driver that worries about stack overflows and works around it. Yay. However, it also has a 1000 line "internal_command" function. Boo. "Fast mode" bit-bangs the data out without any form of handshaking. This means that a) there's no DMA. Oh well. b) Surely fast machines will go beyond the the synchronous SCSI speed limit? (Well, maybe the card will insert wait states. Given the cheapness of the card otherwise, I doubt it, though.) It's another vertically-oriented source file, but this "big long function" approach is still ridiculous. Finally, it's amusing that the code seems to have been written by a reader of the New Hackers Dictionary. "bork" and "bletcherous" and all the rest.

And I'm done with SCSI! It was kinda fun, although pleasant that there's only a smallish number of drivers. One of the things I learnt through all this is that SCSI is not tricky, it's just pretentious. "Contingent allegance condtion", "request sense", "nexus", etc. Sounds complicated, but actually pretty simple. Why give it silly names? I don't know.

Posted 2018-08-27.

Linux 1.0 kernel source reading - 15: Low-level sound, high-level SCSI

After something of an extended hiatus, I've taken this post-operation recovery time opportunity to read a bit more Linux 1.0 kernel. 'cos why not? :)

I finished reading the sound driver stuff, and I must admit I mostly skimmed it as the low-level details of individual sound cards didn't seem terribly exciting to me. "gus_card.c" is the longest source file outside of "tcp.c" - 3.5k lines! There's a lot of faff in there that I didn't care about, with both low-level hardware mangling and having to care about sound stuff. In comparison, the Soundblaster midi code is a doddle. Anyway, the sound directory is done.

For a bit of a change, I thought I'd attack SCSI. SCSI had always been a bit of a mystery to me. When I built my first Linux-specific PC around 1997 or so, I got a rather fancy Adaptec 2940 PCI card and SCSI disk, since SCSI disks were deemed important for performance back in the day (along with plenty of RAM - 16MB at 30 quid a meg!). However, the details of it all were magic, and this is a chance to learn!

Indeed, Linux 1.0 here doesn't support PCI or the AHA2940, but I guess only having the simpler stuff is better for me as a reader. :) Lots of the supported cards are built around the NCR 5380, so for preparatory reading I read the Wikipedia pages about SCSI, and skimmed the NCR 5380 data sheet. Then I plunged into the source...

The source is split into layers, with the abstract drivers, the intermediate glue layers, and the low-level device-specific drivers. I decided to start at the top end and work my way down. The generic end is around 8.5kloc, the device-specific code is 14kloc.

- Makefile, constants.[ch] Usual Makefile structure, and the constants file contains a bunch of nice constants I recognise from reading up on SCSI docs.

- s[gtrd].[ch], s[rd]_ioctl.[ch] High-level drivers for SCSI generic devices, tapes, CDROMs and disks. They sit on top of the infrastructure provided by scsi.[ch], and convert between the world of Unix requests and the actual SCSI commands needed. It's somewhat annoying that "sg" is overloaded as "scatter-gather" as well as generic SCSI! The block devices (sr and sd) use the standard block device drivers, which somewhat unobviously hook in through the do_s[rd]_request functions.

- hosts.[ch], scsi.[ch] and scsi_ioctl.[ch] These provide the "middle layer". "hosts" deals with the adapters, and "scsi" the devices. An awful lot of the middle layer adapting seems to be handling errors and timeouts. As SCSI is a bus that can connect all kinds of weird peripherals and fail in all kinds of exciting ways, I guess this makes sense. The SCSI code has a lot more debugging (kprintf) code than other subsystems.

Once again, the code reminds me of how basic C makes it difficult to ensure invariants are met, and keep reasonable abstractions. For example, there are several places where the runnabilty of the current process is changed in different ways, directly, rather than using standardised sleep/wait mechanisms. And error handling... ugh. So easy to leak resources in little-used error paths.

I sometimes think "How could the code be structured better?". There are some core multi-hundred-line functions that could be broken up. Given some operations span over multiple functions through completion functions etc., I think there could be explicit state machines. Mostly though... yeah, I want invariants and abstractions. I want the code to be obviously correct, not mysterious. Doesn't seem too easy to me. :/

And that brings us to the end of the device-independent part. Next up, I'll be working through that, but I imagine it'll be pretty dull, with just some tedious register mangling. We'll see, shan't we?

Posted 2018-08-12.

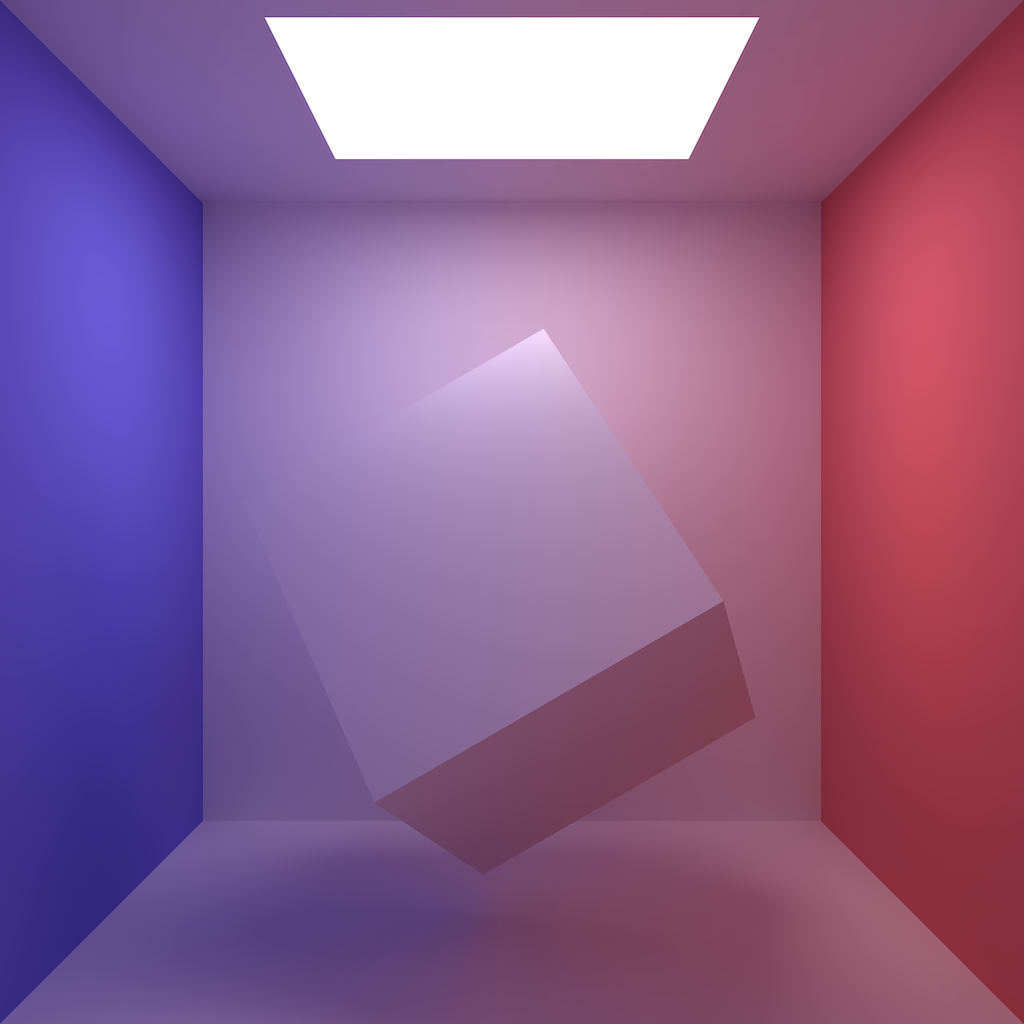

Radiosity

I can't believe it's been so long since I did my ray-tracing toy. Anyway, another graphics approach I've always wanted to try is radiosity. So, I've finally been working on it. It's taken a while to implement as I've been trying to be careful around the maths and have somewhat limited spare time, but a couple of days ago I had some minor surgery (yay, hernia op) which has given me a little bit of quiet time, and now it's done. Code's up on github, and another itch scratched. :)

I'm rather pleased at how it's turned out. The way the red and blue wall colours have leached into the surrounding white surfaces, and the soft shadows and darkening in the corners have all worked makes me really rather happy!

Posted 2018-08-10.

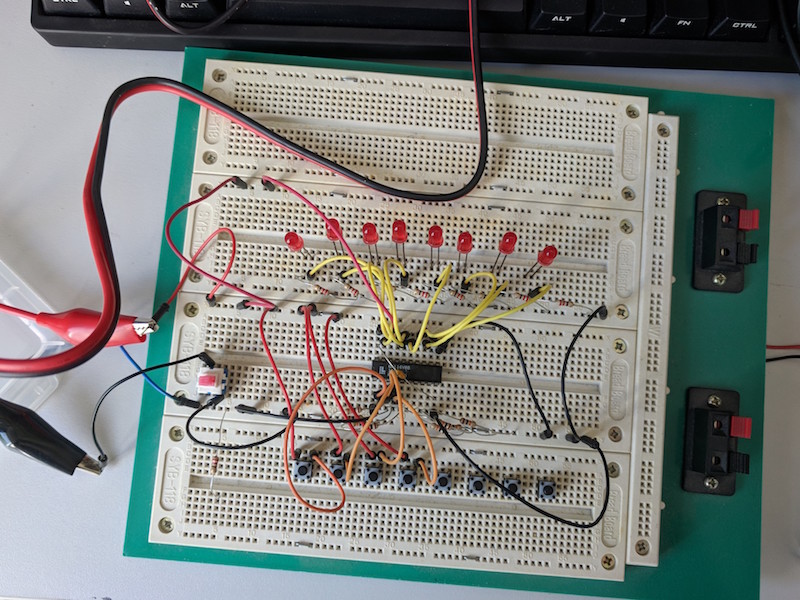

Playing with GALs

I haven't been doing much electronics for quite some time, but I've been thinking about my next project: A 68K-based machine. While I could do all the glue with TTL, or go the other way and put everything on an FPGA, I would like something that keeps the spirit of discrete logic, but isn't so fiddly. Enter GALs, the follow-up to PALs and PLAs, and happily erasable and reprogrammable.

I bought a few off ebay, downloaded some equation-compiling software (open source - yay!), and I'm off. Compiling the JEDEC files was simple, and using my "Minipro" programmer was even more so - it supports Lattice GALs, even if it doesn't support the "compatible" Atmel parts. All I needed to do is put it in my test circuit and watch it work!

I built a test circuit, and... it didn't work. This is fun, compared to TTL, as there's whole new ways it might not be working. As well as electrical problems, the JEDEC file could have been prepared incorrectly by the compiler, or applied to the chip incorrectly by the programmer. Or it's just a hardware screw-up.

I looked around, and found a couple of bugs in the compiler, albeit ones that didn't affect the JEDEC output. It did not inspire me with confidence. After a while, though, I suspected the hardware. Was my output LEDs too much load? Had I screwed up the decoupling capacitors? Put some pins into the wrong state? Bad chip?

After much faffing around, it turns out the batteries I was using to power the circuit were running low. D'oh. A quick switch to the bench power supply, and it was running perfectly. Ho hum. The time waste was painful, but having some simple programmable logic devices is now opening up whole new (if small :) vistas of opportunities for me!

Posted 2018-05-08.

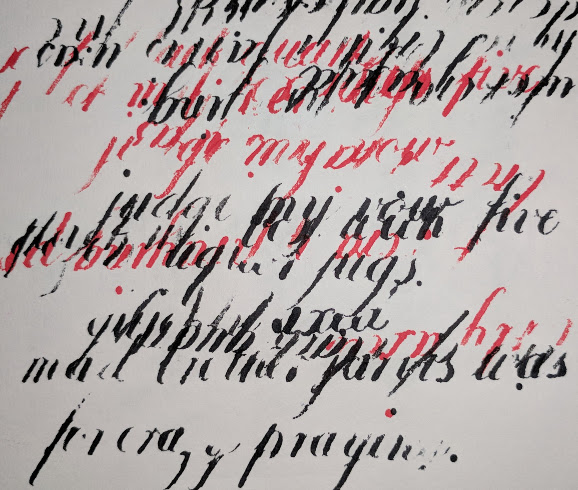

On Learning to Write Copperplate

A few months ago I decided to attempt to learn copperplate writing. My handwriting is awful, and I thought it'd be interesting to produce some writing that actually looks rather nice, even if calligraphy turns out to be really rather unrelated to everyday handwriting (dip pens are inconvenient for taking notes).

It turns out that learning copperplate calligraphy is rather akin to learning the violin because you liked the sound produced by expert violinists.

It's still a fun hobby, but I'm still trying to think of any practical applications for ugly, bad calligraphy. :D

(Bonus feature: The blotting-paper image of my practice is a bit more fun, as the fuzz hides the worst of my mistakes...)

Posted 2017-12-30.

Worse is Better: Lego

You may be aware of Worse is Better. I have just noticed another example that has been staring me in the face for years.

My mother-in-law played with Bayko while growing up, and obtained some for our children to play with. The rod and block system is more complicated than Lego, but produces better buildings. For example, it has special, fiddly bricks to make the corners look good, and that works. Lego produced inferior models, but was more successful commercially. Lego is "Worse is Better".

However, this goes much further than just a comparison to Bayko. Lego models often don't look like the thing that they look like. They look like Lego models of those things. They put a bound on the difficulty that goes into producing the model and the accuracy that can be produced, and limit the complexity of the model, compared to, say, a genuine scale model that strives for accuracy. The little bumps are pretty much an aesthetic feature - they can be a code for "in the real world, there'd be fine-grain detail here".

This isn't a bad thing. After all, by working within self-imposed constraints, you can often create fresh and interesting things. Lego models can be distinctive, and the ways of using limited palettes to best advantage can be very creative.

The limitations of the material are what make it so good. Worse is Better.

Posted 2017-04-16.

Further Reversing of 'Head Over Heels'

A few years ago now, I started reversing Head Over Heels. It was quite a bit of fun, but eventually I stalled. I've done incremental work, but really wanted to make a bit of a push.

What's really got me unblocked recently is to process the labels in the code and build a call graph, visualised using dot. While I could poke around before, looking for likely chunks of code to reverse, a call graph makes things much clearer - it's obvious which subsystem a chunk of code ties into.

From there, I found that the best approach was bottom-up - identify what the small chunks do, and go from there - and I think I've finally pulled apart the full structure and all the functions, even if I haven't got all the details sorted. Currently it stands at 13K lines of assembly, including comments and blank lines. Data gets added on top.

I think I'm pretty much there. The first 80% is there, and I just need to get that last 80% of polish in place to have a nice, clean fully-reversed Head Over Heels. Fun, fun, fun.

Posted 2017-04-07.

Unicode combining characters

I'm a bit behind the times, but today I learnt the details of Unicode combining characters.

This means I can now p̤̮ṳ̮t̤̮ s̤̮m̤̮i̤̮l̤̮e̤̮y̤̮s̤̮ ṳ̮n̤̮d̤̮e̤̮r̤̮ l̤̮e̤̮t̤̮t̤̮e̤̮r̤̮s̤̮.

I think you'll agree this was time well-spent.

Posted 2017-04-07.

Linux 1.0 kernel source reading - 14: Sound drivers base layer

Looking at the sound code, the subsystem can be divided into 4 layers:

- Linux-specific interface

- Generic driver layer

- Device type layer

- Device driver layer

In this entry, I'll be dealing with 2 1/3 of these layers!

The sound subsystem is supposed to be OS agnostic, with the majority of the code being reusable (with macros for some of the OS-specific interfacing, like dealing with waiting). "configure.c" is the configure script for Linux sound. Yes, a configuration script written in C. It was a barbaric time. "soundcard.c" is the entry point for Linux, and "os.h" provides an abstraction layer for the rest of the code.

The main switching point, as it were, is "sound_switch.c". It implements the status device in a mildly nutty way - there's a fixed, shared buffer for the status message, so only one person can have it open at a time. For everything else, it delegates, calling functions audio_*, sequencer_*, MIDIbuf_* and mixer_devs* for the per-device-type operations.

In the third layer, "audio.c" handles the generic playing of samples, delegating in turn to the specific drivers (which I haven't read) through "dev_table.c". "dmabuf.c" handles DMA in a generic way, called from the specific drivers.

And that's where I've got to so far. Next up, I plan to poke around the other abstract device types, and then look at the drivers for a simple sound card.

Posted 2017-02-11.

Linux 1.0 kernel source reading - 13: More file systems

A few months ago I read the rest of the Linux filesystem code, wrote a few notes, and forgot about it. This write-up is going to therefore be a bit vague.

nfs is centred on proc.c, which implements all the tedium of RPC, and then the rest is pretty much wrappers around this.

msdos - all I have is a note "Thoroughly bored by namei.c". I can't tell if this particular file was boring, or if this was the last file in the directory, and I was bored by that point. There's a little bit of caching around the FAT layer because FAT is basically a poor structure for large file systems, but I don't remember much else being interesting about it.

hpfs The implementation is all in one file, but it's pretty readable nonetheless, perhaps because of its limited scope - it's read-only, which makes life a lot easier. It appears to be based on an old paper, rather than solid knowledge - a bit of a reverse engineering exercise by the looks of it! The code is interestingly written in a top-down style (a style discouraged by C's scoping) - I think this helps with its readability.

ext2 The famous, venerable ext2! Compared to ext, there are a bunch of little, neat changes. You can put a small amount of data inside the inode itself, for "fast links". Block sizes are now variable. There's preallocation code, so that blocks can be allocated together for efficiency. There are a certain number of blocks per group, which hold together blocks and inodes, presumably also for locality. Bitmaps for inodes and blocks are back - I guess this is to allow support for preallocation.

On top of the basic storage level, there's a direectory entry cache for files with short names - which is what you end up needing if directories aren't structured for fast search. Rename locking is improved, which is nice as the whole area looks like a pile of race conditions waiting to happen. There are plans for ACLs, but they aren't implemented. And one of the stranger things it has is automagic upgrade of filesystems from the 0.2b version. Putting that in the fs layer itself seems a bold move to me, but there you go.

... and that's it! File systems are done. Next up: Sound devices, which should make a nice change from the core, standard stuff.

Posted 2017-02-11.

Linux 1.0 kernel source reading - 12: Some file systems

Back to a fairly core component of Unix systems: The filesystem. Linux 1.0 supports ten filesystems, and I tried to start at the "easy" end and work my way up...

isofs Read-only is simple, right? Unforunately, there's a fair amount of mess to it, and lots of stuff like Rock Ridge implementation and so on, so it's painful to read at the detailed level (I skipped those details). On the other hand, the structure makes it easy to see the big picture.

ext This looked like a good option next. Not as big as ext2, but somewhere in that ancestry. And... yeah, it's quite readable. It's a fairly traditional Unix-y filesystem, and it's all straightforward and pleasant if you don't worry about races.

If you do worry about races, there's plenty of subtlety. We're back in the early days of Linux, so no SMP or random interruption of your FS operations, but if you do any I/O you might get context-switched to something else trying to operate on the same filesystem. "Truncate" is particularly messy, but the whole thing makes me twitch.

There's nothing enforced by the language - it's all "write your code carefully and hope". And... this somehow seems topical, given the recent discovery of the "Dirty COW" race condition that's left a privilege escalation in Linux for the last 10 years. Sigh - we need to do better.

minix It turns out ext is basically a modification of minix. The differences are relatively minor - ext has nice improvements in that the bitmap of used blocks and inodes is replaced with a free list, block numbers are 32-bit rather than 16-bit and triple indirect blocks exist. So, I'm reading things backwards. The removal of free block/inode bitmaps actually makes ext easier to read, though, so it all worked out ok in the end!

By now, I noticed that all the file systems are following the same stereotyped code structure - to the degree that many of them are clearly forks of the minix filesystem.

xiafs is another modification of minix. I assumed "xia" was an abbreviation for something (cf minix, ext - lots of "x"s), but it's just written by Frank Xia! I can see why xiafs was a bit of a dead end - while it dealt with allocations in zones rather than blocks, it doesn't really add much else, and I can see why we followed the path from ext instead.

sysv is another copy of minix, rather neatly designed to cope with several different variations of the the sysv filesystem used by other Unices. As I say, rather neat. It's interesting to see how these various Unix filesystems a) have similar structures to each other, and b) really are quite simple to understand, with simple code to implement them.

proc After several similar file systems, this was a nice distraction and a good example of semi-abusing the fs infrastructure! I remember it being a really cool feature as I first played with Linux, but the implementation is mad. I noticed a bug in that if CONFIG_INET is disabled, then/proc/net/unix appears as a file but doesn't work due to inconsistent placement of #ifdef-ing.

There's a fair amount of filesystem code, so I'll save the rest for another post.

Posted 2016-10-24.

Linux 1.0 kernel source reading - 11: Networking hardware

After working through block devices, I ended up going even further afield: Network card drivers.

My, Donald Becker was busy here. His code is nice and readable. One fun side effect is it means that a significant proportion of the kernel is copyrighted by the US Govt., represented by the Director of the NSA. Take that, conspiracy theorists!

The 8390 was one popular chip in the '90s. I read the code associated with this particular chip, and then all the card drivers based around it (largely varying in autodetection and how the buffers are mapped). Once I understood the pattern, the various other non-8390 drivers made sense.

This is not the most glamarous of directories, but it makes a nice change from the filesystem-y stuff.

Posted 2016-10-24.

Linux 1.0 kernel source reading - 10: Block devices

I'd just finished the filesystem-type-independent part of the fs/ directory. So, what would be the logical next step? The filesystems? Well, I was bored of that directory, so I actually decided to read up on the block devices. I guess this kinda makes sense - you can use the filesystem-independent layer to read and write block devices, and the actual filesystems are built on top of the block devices.

Anyway, here I am looking at block devices. I'd already forgotten fs/block_dev.c, which much here relies upon, so I had to go back and read up on it.

ramdisk.c is (unsurprisingly) very simple, but made a little more comple/fun by the "load the ramdisk contents from boot disk" element.

genhd.c is a really badly named file for the functionality that parses partition tables.

ll_rw_blk.c provides low-level block device handling, between that and functionality smeared elsewhere through the kernel. Slightly weird.

The algorithms involved are also quite dumb - for example, there are linear scans, rather than trying to use free lists and hash tables and stuff like that. When you're doing lots of I/O on a smallish system I guess the algorithmic complexity isn't so important, and simplicity pays dividends, especially in C. I think I'd find it difficult to keep it that simple.

hd.c implements the actual low-level I/O with a hard disk. I must admit, I got bored and lost.

xd.c is an XT hard disk controller. I'm pretty sure that's heavily retro, even in the early 90s. One of the few files with more than 80 columns in the source. Still, feels more readable than hd.c.

floppy.c - Linus says he doesn't like programming floppies. Reading this file, I see why. On the other hand, it's well-commented. Perhaps that's the flip side of having to code unpleasant stuff.

mcd.c is a CDROM driver. Well-commented and pretty understandable. Long lines. Looks race-condition-tastic to me as use of getMcdStatus sleeps, which could interleave driver calls in different processes?

cdu31a.c is a Sony CDROM driver. Polling-only. Lots of long functions - "get_data" and "scd_ioctl". Very tempted to start just skimming these bits of source.

sbpcd.c is another dreary CDROM driver. Funny indentation, lot of comments. Lots of conditional compilation and debug. Very enterprise, very tedious. Just skimmed.

Looking at these drivers, both in quality and quantity, I can see how Linux would have seemed like something of a joke in the early '90s, compared to any commercial effort.

Posted 2016-10-16.

Linux 1.0 kernel source reading - 9: fs/ buffers etc.

I've been reading more source for a while, but let's catch up on the write-up. In this post, I'll cover the remainder of the non-fs-type-specific code from the fs/ directory.

You may notice my descriptions getting terse as I get a bit bored of the same old stuff, at this point.

pipe.c and fifo.c implement pipes, as you might guess. fcntl.c is boring. locks.c is very well-commented. Perhaps even overly-commented! It doesn't stop "lock_it" being hairy, and I'm too lazy to read it properly.

open.c actually contains a bunch of inode-twiddling syscalls. It's a bit sad to see the level of C&P between the sys_XXX and sys_fXXX syscalls. read_write.c is just a few syscall wrappers around the underlying functionality. select.c... well, it shows you how the select system call is implemented over the kernel waiting mechanism.

Finally, stat.c is a surprisingly boring set of syscalls to end off with.

At this point, I believe I've read all of the "core" OS. What remains is device drivers, filesystem implementations and the networking infrastructure. As tends to be the way with these things, the majority of the code is in these peripheral parts. So, plenty to go.

Posted 2016-10-16.

Linux 1.0 kernel source reading - 8: fs/ buffers etc.

Well, it's been a year since I last did one of these posts, it seems. Gosh. Time flying.

buffer.c does a lot of looping around the buffer list for nr_buffers * 2 elements. Not explained in any comments. I guess it's an, ahem, heuristic for dealing with race conditions? "free_list" confused me for quite a while, but it's actually more a "freeable" list, containing things that are free or able to be freed.

inode.c is similar, but for inodes rather than buffers.

super.c - yep, superblocks. Reading this code, and the million calls to "iput", I'm so thankful for smart pointers and RAII.

namei.c demonstrates cut-and-paste code for a bunch a of system calls. "unlink" and "rmdir" lack synchronisation, while the others have it. I guess this is because you can't race on named entries when all you're doing is removing them. No explanation in the source, obviously.

block_dev.c is fairly obvious, although the read-ahead infrastructure is nice.

devices.c has a magical default device file operations structure, which just replaces itself with the device-specific operation structure when open is called. That's kinda nutty.

Lessons: I think I keep saying this as I read the code, but C is a horrible language for this kind of thing, and I love RAII to track resources, and I really like Don't Repeat Yourself code. Structs/objects should either be completely initalised, or not at all, so that things are always in a sane state. The use of NULL for "do default action" is crazy. Tony Hoare had the right idea about NULL.

Posted 2016-09-19.

Electronics for Newbies: Disk writing

After a small amount of work, I now have disk writing working for Dirac. A few stupid bugs, and it seems to do the job:

CP/M-80 Version 2.2c For the Dirac SBC a>dir A: ASM COM : BIOS ASM : CPM SYS : DDT COM A: DEBLOCK ASM : DISKDEF LIB : DSKMAINT COM : DUMP ASM A: DUMP COM : ED COM : LOAD COM : PIP COM A: READ ME : STAT COM : SUBMIT COM : XSUB COM A: WRITER COM a>ren readme.txt=read.me a>dir A: ASM COM : BIOS ASM : CPM SYS : DDT COM A: DEBLOCK ASM : DISKDEF LIB : DSKMAINT COM : DUMP ASM A: DUMP COM : ED COM : LOAD COM : PIP COM A: README TXT : STAT COM : SUBMIT COM : XSUB COM A: WRITER COM a>pip readme2.txt=readme.txt [...] CP/M-80 Version 2.2c For the Dirac SBC a>dir A: ASM COM : BIOS ASM : CPM SYS : DDT COM A: DEBLOCK ASM : DISKDEF LIB : DSKMAINT COM : DUMP ASM A: DUMP COM : ED COM : LOAD COM : PIP COM A: README TXT : STAT COM : SUBMIT COM : XSUB COM A: WRITER COM : README2 TXT a>

The "[...]" was because the warm boot code had a bug (now fixed), requiring a restart, but otherwise things are looking pretty good!

Posted 2016-07-30.

Electronics for Newbies: A working copy of CP/M

Last time I left off, I had a broken copy of CP/M. I've now whacked the basic bugs on the head, and it can run some simple executables. For some reason, MBASIC isn't working, but I'll leave that for another day.

There were various minor odds and ends, but in the end it came down to two problems, both embarassing in retrospect. The first was that the whole thing was pretty unstable. I could run a "dir" command, and it would work, more-or-less (see below), but then crash the second time I tried the command. It smelled like memory corruption - but what could be going on? The BIOS code I wrote was really very simple and I saw no reason for it to be doing bad things.

Eventually, I worked out it was the memory mapping. The same page was mapped in twice, so modifying it in one mapping corrupted it in the other. This in turn happened because I configured the mapping of the final page (mapping over where the EEPROM image goes, which I only do once we're done with the monitor) in two places - originally, I set up the mapping in CP/M initialisation code, but then I moved it to the boot loader... however, the bit of code left in CP/M got out of date, and clashed with the mapping for the rest of memory. Ooops. I removed the copy in the CP/M initialisation code, and the problem went away.

However, all was not well. "Dir" only ever returned a single entry, and "type" read the file but then gave up after a single character. I started digging into the source of "dir" in order to understand the problem... and it was very, very silly! Both "dir" and "type" stop if you press a key. My terminal emulator was set up to send "\r\n" at the end of a line, but CP/M only needs the one. It treats the other character as another keypress, and immediately gives up. I changed my terminal emulator settings, and suddenly everything was good:

CP/M-80 Version 2.2c For the Dirac SBC A>dir A: MBASIC COM : BHEAD BAS : BHEAD COM : MORE C A: MORE COM : LOAD COM : RW13 COM : APDOS COM A: BHEAD C : CPM56 COM

There's an awful lot left that's basically not right yet, but I finally feel that the basics are there, and this is in some sense a working CP/M computer.

Posted 2016-07-16.