Simon Frankau's blog

Ugh. I've always hated the word 'blog'. In any case, this is a chronologically ordered selection of my ramblings.

Linux 1.0 kernel source reading - 3: Kernel entry points

The bottom-up approach I was previously taking wasn't really helping me get a handle on the kernel's structure. If you squint and turn it upside-down, the kernel looks a bit like a library, admittedly one that wraps the code calling it, and with various other fancy bits. In any case, it seems that a sensible place to start is the points where we enter the kernel - the system calls, traps and interrupts. Rather than try to trace the start-up process further, we can assume the system's running, and see what it gets up to.

kernel/sys_call.S This file provides the low-level handlers/entry points, but doesn't actually install them. The handlers then call the core implementations - XXX calls through to do_XXX. The placement of the user mode segment into FS, used as described previously, is done here. "lcall7" is a mysterious name, but a little tracing shows that it's the iBCS emulation stuff.

ret_from_system_call and friends are here, and provide interesting complexity and functionality. They do a re-entrancy test, run the bottom halves, reschedule if required and post signals. There's also some virtual 8086 unpleasantness.

kernel/traps.c is the next layer up form sys_call.S, handling errosr and faults. The comment at the top refers to the non-existent asm.s. Mostly, it sends signals to the process involved, and kills things if something went wrong in kernel mode.

Most of the numbers in the kernel go through appropriately-named constants, but 'trap_no' has mysterious un-named values. A little bit of digging suggests they're related to iBCS emulation.

kernel/sched.c is, as the file comment says "the main kernel file.". It not only handles task switching and timers, but also contains "sys_call_table", so it's basically the entry point for all non-trap, non-interrupt situations. This is where we can see the "sys_*" naming convention for the naming of system call implementations. It also includes a few of the implementations themselves.

"switch_to" is the core of task-switching. This uses a funky x86 ljmp mechanism and TSS stuff I don't really want to understand. For some reason, I thought the mechanism used to save the registers when switching from user more to kernel mode might also be used when switching tasks, but apparently not. Hey ho!

This file also references a few core header files. "task.h" shows a maximum of 128 tasks. So ickle! "timer.h" deals with the timers managed in sched.c. It shows there's a transition going on between two ways of handling timers, with a nice comment explaining it all. Interetingly, timer_list is defined as a doubly-linked list and used as a singly-linked list!

Bonus feature! Having dealt with files defining the very core of Linux 1.0, I thought I'd read through a few more of the smaller and less challenging files in "kernel/". Not much to report for "info.c", "ioport.c", "itimer.c", "ldt.c" (x86-specific low-level twiddling, ick), "panic.c" and "vsprintf.c".

"module.c" and "ksyms.*" implement a basic module system (although nothing I've seen so far indicates it being used). This is quite fun. "time.c" handles the time, and reminds me how a kernel has to incorporate a bunch of really tedious stuff.

Posted 2015-03-29.

Inside a clothing security tag

Those security tags you get in clothes shops have always been mildly mysterious to me. Somehow, we ended up with a tagged item of clothing at home - looks like the shop assistant failed to remove it. Whatever alarm was supposed to go off as we left the shop either didn't, or was ignored. So, I got a hacksaw out and cut off the pin that goes through the clothing, and it all turned out fine. Then, I thought I would take a peek inside it.

I was a bit worried because I'd heard about those ink tags that stick permanent ink over eveything if you tamper with them. No such problem. Indeed, what I found was that the whole thing was nothing like tamper-proof at all. The main thing about the design, I'd say, is "cheap". It's a little strange to me that they're quite so cheap. After all, they're regularly recycled as they should never leave the shop! If they're marginally more expensive, but make theft that much more difficult, it sounds worthwhile.

Anyway, cutting off the back reveals a central bit holding the pin, plus a coil and capacitor:

The coil and cap are completely separate from everything else, forming an LC resonator for the alarms at the exits:

The rest is basically a shell to hold the pin-holding elements:

Taking apart that structure, you can see how it works:

The spring at the right pushes the small metal cap into the big metal cap, jamming the ball-bearings against the pin, as far as I can see. I'm surprised this provides so much force to make the pin so difficult to remove, but apparently it does! As far as the removal devices go, I think they're just Really Strong Magnets, since most of the chunks of metal involved are magnetic.

I guess this is just another example of looking inside a mysterious security device and finding out that the security involved is actually quite straightforward, prosaic and breakable!

Posted 2015-03-24.

Linux 1.0 kernel source reading - 2: libs and include/asm

Having read the initialisation code, I kind of understood how the kernel got itself into the right shape to get going, but not really how things ran from there. 'main.c' makes system calls as the init process, and then I kind of got stuck.

Where now? My first thought (which I must admit hasn't really worked!), was to try to dig into some of the utilities. By this, I mean the 'lib' directory, and the 'include/asm' directory - the former because some utilities/libraries sound a good bottom-up area to start with, and the latter because these headers kept getting included and causing magic to happen, so perhaps it would be good to understand what they're up to.

lib

There are basically two kinds of file here. System call stubs, and implementation of shared utility functionality. The former covers _exit.c, close.c, dup.c, execve.c, setsid.c, wait.c and write.c. These are stubs that call into the system calls (not implement them). Presumably, they were used to implement the initial processes in main.c. However, main.c now contains inline implementations, so I believe they're never used at all!

The others are generally paired with headers in include/linux. "ctype.[ch]" implements the C library functionality you'd expect (with a simple table lookup). "string.[ch]" implements standard string functions, most of the functionality coming from inlined grotty assembler in the header file. "errno.[ch]" implements the error codes (including plenty that are never used!). "malloc.[ch]" is the most complex part, but also very well-documented. It implements the kernel (small chunk) memory allocator, with a comprehensive debug mode if you need it. It sits on top of the page-level allocator.

include/asm

Some of the files really are just headers providing macro/inline functionality. Others turn out to be the header associated with a .c file that I also ended up reading...

In the former category, bitops.h provides atomic bit-twiddling, and io.h provides support for poking I/O ports. Memory-mapped I/O is clearly more pleasant! segment.h is really data-copy-to-and-from-user-space.h, which relies on the user data being mapped into the fs segment. How is fs set up like this? I don't know, so I'm obviously reading the code in the wrong order.

system.h contains 'move_to_user_mode', used by main.c. This is kind of interesting, in that it does move to user mode, but it's clearly not a user mode process, as it's still executing code from the kernel side. Presumably the 'exec' call fixes all that. This file also provides important interrupt-twiddling functionality, such as disable (cli) and endable (sti) interrupts. I didn't care for the low-level x86 details here, as I want to learn about the kernel, not x86isms.

Even though the kernel is a monolithic, single-threaded kernel, the attention that needs to be paid to race conditions is non-trivial. Interrupts can quite happily happen in kernel mode, so you end up either using atomic operations, or disabling interrupts when dealing with shared values.

The next couple of files are paired with .c files in the 'kernel' directory. 'dma.[ch]' is yet more low-level grubbing. There are fun comments about future plans now long past, and guesses about poorly understood hardware.

'irq.[ch]' are rather more core. irq.h contains macros to build up interrupt handlers. It culminates in BUILD_IRQ, a really ugly macro which builds a fast interrupt (only saves some regs), normal interrupt (all regs and may switch tasks when done) and bad interrupts (ignore). irq.c then uses the macro to define the handlers (and admits their ugliness), and has the code to initialise the interrupts and support the hooking of interrupts (which uses signal handling infrastructure).

By this point, reading the code, it's very clear that Linux 1.0 is an incredibly cheap-n-cheerful Unix clone. (It's not bad code at all, it's just not exactly fully-featured). If I were a commercial Unix vendor in the mid'90s, I'd be amused and very much not scared by it. On the other hand the rate of growth and change that happened after that is something astonishing.

Posted 2015-03-21.

Code reading: Lua 5.1.4

One of my resolutions is to read more of others' code. Especially decent open source code, as there's plenty of it and it provides a rather different view compared to work code. So, Lua seemed a pretty good choice as it's featureful, interesting and 17kloc in total. 5.1.4 is just the version I have lying around and use. Let's get started!

The code is really very compact and terse. It's written as if it's meant to be read on an 80x25 monitor. Much more squished up than the Linux source, and much less commented. I know comments can be misleading, but sometimes just trying to work out what the code is supposed to be doing is a bit painful. I think they're trying a bit too hard on the density.

Reading through the code, I can understand the general shape of what's going on, but I have very little confidence that I'm not missing bugs as I'm reading it. Unsurprisingly it's rather stateful, but it's also not clear what the invariants are, or how they are maintained. While functionality is split quite nicely across the source files, it's not well-encapsulated (C makes this a lot more difficult than C++, with objects).

To understand in depth, I think I'd need to trace the code as it runs, rather than just stare at the code.

The source is divided into the core part, and the libraries. I started with the core, which is broken down by function. Each source file is nice and short, generally a few hundred lines with the longest (the parser) being a touch over 1300 lines. I started with the periphery/base layers - the implementation of strings, tables, allocation etc. All fairly straightforward.

The chunkier parts are the VM, garbage collection, and code generation (the Lua input is converted to bytecode that the VM runs).

The Virtual Machine

The VM is stack-based, but it's not pure stack access. Each call frame has a base address, to which offsets are applied. It's rather like the Sparc moving register file. There's a separate stack for values (as just described) and calls, a bit like Haskell's STG.

Lua's "upvalues" (lexically-scoped variables) can be open or closed. If they're open, they're still live on the stack, and are represented by pointers to those values. When the item comes off the stack, they become closed, and become self-contained.

There's some weird GC handling of upvalues I don't quite understand. If an upvalue is unused, it can be resurrected if something else refers to it. I don't know if this is an optimisation, or just a way to prevent multiple upvalues pointing to the same actual value.

OP_CLOSURE's definition doesn't match what really happens. Or rather, instructions to load the values needed for the closure appear after the OP_CLOSURE instruction. This perplexed me both when reading the VM code and the code generation code!

Some of the opcodes seemed rather specialist, to deal with Lua's specific structures. Not unreasonable, I guess.

Garbage Collection

The idea of incremental garbage collection in Lua seemed mildly magic, given the hoops jumped through by languages like Java to do this kind of thing. In the end, the implementation is surprisingly pedestrian: The marking can happen incrementally, and if you put a garbage-collectable value somewhere outside the heap that's already been marked, add it to the "to process" list. Life is made a lot easier by the code being single-threaded, with the garbage collector being able to synchronously kick in at nice, safe points.

The code initially confused me with heap objects being black, white or grey. It took me a while to work out that black means marked (fully processed), white means unmarked, and grey means incompletely processed (we know it's accessible, but haven't chased through it). Obvious in retrospect, I guess. There are two whites, presumably so that we can distinguish "not yet marked in this iteration" from "should have been deleted already", and to avoid nasty mess around the transition.

Weak tables and finalisation provide a bit more fun, as usual. Ugh.

There are a few little bits of non-native-English-speaking amusement in the code. For example, the variable to hold the GC "debt" is "gcdept".

Code generation

This is split between the lexer, parser and actual code generation functions. The lexer and parser are hand-written. For this kind of thing, I certainly don't blame them. The parser is relatively long, but better-structured than the code generator, and makes the syntactically-driven code generation a bit clearer. The split of work between the parser and code generator does seem a little uneasy, though. Unclear invariants and repsonsibilities.

The code generator is mildly funky, mostly because it tries to perform peephole optimisations as it goes along. Jump handling is also a little tricky. It keeps lists of jumps that need their destinations overwriting as a linked list, linked through the jump destination field that will be overwritten. This is made more complex by the code that fills in that linked list, which has a special case not to do so if the target of the jumps is another jump (instead jump straight through to the final destination).

Similar tricks abound for handling variables and values, to make sure they end up in the right place at the right time without extraneous register moves. Boolean expressions are handled as expressions with jump pointers for the true and false cases, which I guess is a neater representation if you want to handle conditionals and short-circuiting nicely, but then there's extra conversion if you just want to treat the result as a boolean value.

Libraries

Having read through the core, the libraries seemed to make a nice wind-down coda. Mostly, it's wrappers around useful functions. "loadlib.c" is a bit interesting, as it breaks Lua's usual pretence of ANSI C, and has some quite platform-specific code. The string-matching code is... interesting, too. Clearly they want the code to be compact and simple, but the Lua regexp-alikes use back-tracking. Ugh.

Summary

The code, in itself, is readable, I guess. It never makes life easy, though. None of the above is documented explicitly, so you have to work out everything for yourself. Somewhat frustrating. I guess it's good exercise, though.

Posted 2015-03-11.

Linux 1.0 kernel source reading - 1: Initialisation

I should read more code. Working with other people and reading other people's code are good ways to learn, and I don't do enough of either. Working with other people is something I do at work, so I get some of that, but it doesn't get me looking at the code of world-famous, effective programmers. So, I should read more code.

I've wanted to read the Linux source for some time, but a modern version seems a bad place to start, with several million lines of source. The 1.0 kernel, on the other hand, has 170K lines total. Pretty practical.

It's something of a time capsule. I extracted it from my 1997 Linux Developer's Resource CDROM set (where it was already a few years old), and the feature-set is utterly different from even a few years later, let alone now. x86 only (no 'arch' directory). Single processor only. A floating-point emulator in case your CPU lacks an FPU. The number of drivers is... laughable. The design is clearly an extremely vanilla Unix clone - Tanenbaum's comments on Linux's retro design at the time seem very fair.

Looking at comments in the source, there are lines I'm reading going back 25 years, which is somewhat scary. When I first used Linux, a few years after that, aged 16, OS kernels seemed black magic - something I could compile and play with, but not truly understand. At the time, this was probably true, not just because of my inexperience, but also due to the lack of available documentation. There weren't wikis full of the minutae of x86 OS construction. Coming back at it now, it's wonderfully convenient, so I can fit it in at the end of a work day when the children are in bed.

Anyway, that's enough of the nostalgic back story. I'm reading the source. For warm-up, the FPU emulator and top-level docs, and then the boot process. My plan is to understand most things reasonably, but I'm not aiming for a fine-toothed comb on everything. I just don't have time.

drivers/FPU-emu I've also been reading a bit of Lua source. In comparison, the FPU emulator is wonderfully well-commented, even if I am skimming it. It makes it clear how much (tedious) work is required to get the details right in emulating an FPU. It also highlights the dearth of documentation available in the early '90s - the author notes they don't have access to a 486 or a copy of the IEEE spec, yet they still have a darned good go at emulating it!

CREDITS There are a decent number of recognisable names here. The most amusing is to see Raymond Chen, noted MS employee and "The Old New Thing" blogger, who contributed the Configure scripts.

Makefile Looking back, this thing is really very simple!

Configure A "little language" in the grand old tradition of Unix, it parses the "config.in" file and asks configuration questions off the back of it, and then generates the config files. Nice. As noted above, written by Raymond Chen.

config.in Wow, a seriously small number of drivers! It is warned that turning on verbase SCSI error warning will bloat your kernel by 12K!

build.c Before tackling the actual start-up process, I thought I should look at the tools used to construct the bootable image. "build.c" assembles the kernel from a boot block, a (16-bit) setup chunk, and then the main (32-bit) image. It's remarkably simple, perhaps because the files being glued together are very simple. The a.out format used makes it just a few lines of code to check the object file headers, strip them off, and glue them together into the final image.

zBoot/ The whole compressed image thing is charmingly straightforward, too. Rather than having a kernel with a base address of 0x1000, it is now based at 0x100000, and the initialisation code, er, starts at 0x1000, calls standard decompression code that'll undo gzip, and then calls into it at 0x100000. The tools that do all this are nice and simple.

inflate.c is hairy but standard (g)zip decompression implementation, so I skipped it.

boot/ Things move all over the place! "bootsect.S" gets loaded at 0x7c00, moves itself to 0x90000, loads "setup.S" after itself, and then puts the compressed system at 0x10000. "setup.s" calls a bunch of BIOS stuff, and moves the compressed system from 0x10000 to 0x1000 (obviously), before calling it in 32-bit mode. Most of the code seems to be about selecting and setting up the video mode.

At this point, we call into the "head.S" of zBoot, which decompresses the kernel, and then calls into the "head.S" of boot.

"head.S" is the final 32-bit start-up code. Turns out the initial start-up code is very similar to the "head.S" on zBoot, which isn't really that surprising. We then stash parameters down in the zero page and identify the processor. Set up paging, reload segment registers, and call "start_kernel"!

init/main.c Called by head.S. The first thing I see is lots of extern declarations in the .c file, and some shared structs being defined again. Very much not "Don't repeat yourself".

It starts everything up, moves into user mode as process 0, forks init (process 1) and enters the idle loop. How does entering user mode just work? It does a return from interrupt to the next instruction, only with the segment registers switched from kernel segments to user segments, which (for this process) are mapped to just be the same as the kernel segments, only user-readable.

"init" starts by calling the "setup" syscall. I need to find out how syscalls get dispatched! Then it tries to exec some version of init, and then there's a little bit of emergency fallback stuff. Done!

Posted 2015-02-19.

Malware-completeness

By rough analogy with Turing-completeness and NP-completeness, I think we have entered a malware-complete age. By this, I mean that it's pretty difficult for an individual to have confidence that their machine does not have malware.

Looking at all the NSA revelations, plus various other stories of state-sponsored hacking, it's clear that there are huge resources available. If a large government wants you hacked, you'll be hacked. They will have known exploits for pretty much all the software you use - look at how long Heartbleed was about, for example, to get an idea of what they probably know about.

Computer viruses really entered the public awareness in the early '90s, and the Morris worm was in '88. Reflections on Trusting Trust was '83, and I'm sure there's plenty more before that, so there's plenty of history to malware, but I think it's only recently that it's become impossible to reasonably harden a general-purpose computer.

The thing is that there's now so huge an attack surface. If you're got gigabytes of RAM and terabytes of disk, it's easy to hide things. You can have user-mode spyware, root kits, things that hide in virtualisation. You can probably do fun things at the microcode level, like a malicious version of the F00F bug fix. There's the BIOS. Everything will have a microcontroller and firmware in, keyboards, mice, SD memory cards, hard disks. All able to be subverted. Your internet router probably has a back door.

I was thinking to myself, 'What is the most useful computer I could feel confident working with, to feel my data would be properly safe, against government-style foes?'. Assuming they can't access my stuff physically (ha ha), and ignoring Tempest, etc., it would probably be a late '90s PC with an open source operating system, disconnected from networking and all external storage I/O disabled. And you know what? I still wouldn't trust it.

Posted 2015-02-19.

ToyTCP, a toy TCP/IP stack (well, not even that, yet)

I am reading through TCP/IP Illustrated (review to follow, obviously!). "Doing" seems to be rather more effective than just "Reading" for learning stuff, so I thought I'd write a little user-space TCP/IP stack.

I have a tendency to have many projects on the go at once and switch between them, so I have now hit such a switching point, having created the absolute minimum program that does something, namely negotiate the LCP level of PPP, badly. I now have code up at github. I'll do a little bit of something else, and come back to it, hopefully bringing it to the level where it can actually receive and drop an IP packet!

Posted 2015-02-10.

GCSE physics lies: Rainbows and total internal reflection

When I said I was done with ray-tracing, I was lying.

I've not done any more coding, but I thought I'd just write down something that I only realised while doing the ray-tracing work.

The story you get at GCSE-level physics about rainbows is that they're caused by total internal reflection causing the spherical raindrops to act like mirrors, and the angle of the arc is thus related to the critical angle, etc. The refractive indices are supposed to be slightly different for the different frequencies, hence the colours.

This simplistic answer is rubbish. If you take the path the light takes once it refracts into the sphere, you can use symmetry to show that the angle between the entry point and its normal, and the exit point and its normal is the same. Therefore, the external angles will be the same, and you will certainly never get TIR under this simplistic model. A physicist friend suggests rainbows are really about Fresnel reflection, but I don't know anything about that.

I noticed this behaviour when writing the ray-tracer, as I'd left in a "TO DO" case for dealing with TIR, and noticed it never got called when doing my refracting spheres demo. I thought a little bit, and had an epiphany. It's taken me nearly 20 years to realise that the GCSE explanation couldn't work!

Posted 2015-01-04.

Electronics for Newbies: An update on Dirac

Another year since my last update on my Z80-based single board computer. I've really not worked on it. The monitor I wrote was unreliable at reading, which made loading programs incredibly frustrating, so I kind of wandered off from it. Since then, I've got a new job, settled down into it, and started on electronics again by building a PCB to display a VGA-like signal, and by scraping the contents of a floppy disk. All psyched up and ready to attack the SBC again!

First up, it's been named Dirac, and has a github repo. The name's not particularly important, it just needed a better handle than "That Z80-based computer". Second, I finally decided to attack the unreliable serial input.

The SIO docs say that for async (RS232-like) input, if you're using the 1x serial clock, it should be synchronised to the input. I wasn't doing this. This seemed like a likely culprit. I needed to use the 16x clock mode on the SIO... but this required me to generate a 16x higher frequency clock from the CTC.

I was using the CTC in "timer mode", which prescales the counts by 16x or 256x. I was using 16x mode. In short, this prevented me from running the CTC signal fast enough for it to be 16x a sensible bit-rate for the SIO. I really wanted to run it without prescaling, but timer mode doesn't allow you to run without prescaling.

On the other hand, "counter mode" does allow you to run without prescaling, but it counts ticks on a trigger input, not clock cycles. However, if I feed the CPU clock into the (previously unused) trigger inputs, they behave rather like "timer mode" without prescale, which is pretty much what I want. Almost like they designed it like that, ahem. It would have been nice to have it as a suggested design, I guess.

So, I feed the CPU clock to the trigger lines, switch the CTC config from timer mode to counter mode, and switch the SIO from 1x to 16x clock, and everything's good and it reads serial data reliably! I make this sound straightforward, but there was plenty of faff involved.

But... not quite. Somewhere along the way, by switching CTC source, the tick rate has changed by a factor of 2, and the thing runs at 4800bps, rather than 9600. Unfortunately, the timer constant for setting up the CTC is a small odd number, so if I try to adjust it to bring the serial I/O back to 9600bps, it'll be waaaay off. I guess I'll just live with reliable 4800bps comms.

I've been reading up on CP/M, and at this point a minimal CP/M with RAM/ROM-disk is starting to look pretty plausible...

Posted 2015-01-03.

Electronics for Newbies: The software

Argh. Looks like it's been a year since my last update on my z80-based toy computer. Kind of tragic.

A number of things got in my way. I finally had a machine upgrade around last April, and my new machine had no parallel port for the EEPROM programmer. So, I bought a parallel port PCI card. Making the programmer software recognise the card on 64-bit Windows was a complete nightmare. Only after much, much faff did I manage to get it to twiddle the line levels as I expected.

However, it wouldn't program the chip. Probing the lines showed waveforms as I expected. Tracing the schematic through and checking, everything looked ok. I added some temporary pull-ups to see if it helped. It did not. My final guess is that there's just some marginal timing somewhere, and the PCI card and/or driver and/or whatever is just a bit naff and doesn't get it quite right.

In the end, I bought myself a Minipro TL866A USB-based EEPROM programmer. It cost 50 quid, but it's absolutely awesome, and just works, even on 64-bit Windows. Yet another decent recommendation by EEVBlog. (To be honest, the Willem EPROM programmer I'd been using previous had always been a bit of a pain. The fact the power cable is a USB cable with USB type A plugs on both ends is a bit of a hint that it might be cutting a few corners. Super-cheap, and you get what you pay for.)

So, I could now restart development, as I had a way of getting my programs back onto EEPROM. In the past, I'd been assembling the files on my laptop, and then sshing them over for programming. I thought life might be easier if I had the assembler running directly on Windows. My assembler of choice is kio's "zasm". I'm not sure this is a particularly good choice, given it appears to be a bit eccentric, but there you go. The source targets Unix, but I ported it to Windows. This was much harder than it needed to be, as it uses kio's utility libraries, which assume a whole pile of unixisms, despite the fact that the assembler doesn't really use that functionality. Hack, hack, hack and I finally have something that will assemble files.

Then I tried to write a simple monitor, by modifying some existing code. For some reason, it just wasn't playing ball. It would write individual characters to the serial port, but my incredibly simple string-writing routine kept being off by one character, when it wasn't actually just plain flaking out. After much hacking, I did get it print a string. The "\n\0" in the string constant was rendered literally, as I didn't realise zasm didn't handle C-style escapes, so I replaced it with a "$0a, $00" in the assembly. That worked better, except... my routine was printing a CRLF pair. Hang on... they're both in the ROM image, but not in the source. Further inspection reveals that all "$0a"s (including those in opcodes or operands) become "$0d0a", and this is why my string constant addresses are off, when the whole thing's working at all.

Stupid Windows, stupid zasm. zasm calls "fopen" with "r" and "w", rather than "rb" and "wb". This makes no differences on Unix, but on Windows it still controls line ending translation. And I certainly want no line ending translation on my binary files, thanks. A dumb thing to miss on my porting effort, and incredibly frustrating, as I'd been assuming my bug was somewhere in my assembly source, not the assembler. *sigh*

In any case, I can now make a little progress on my toy ROM monitor, and apparently have a working toolchain.

Posted 2015-01-03.

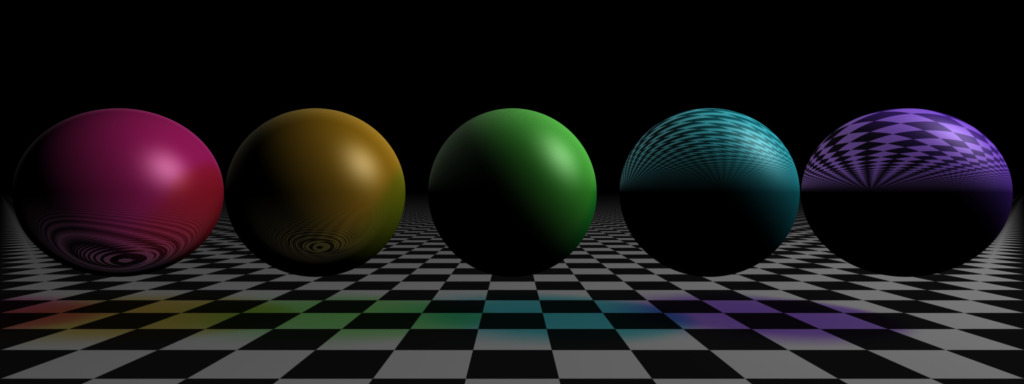

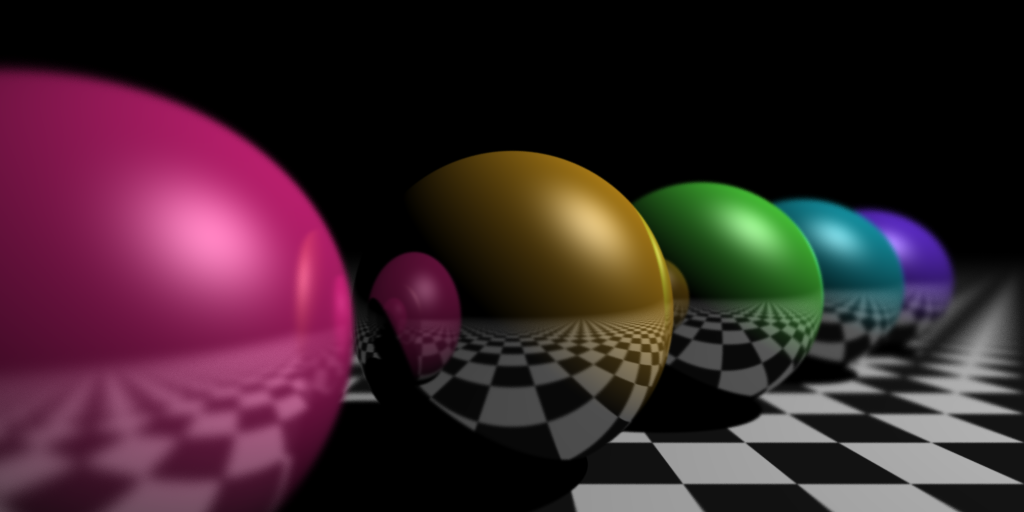

Ray-tracing fun: Transparency and refraction

So, I've been doing all the distributed ray-tracing effects, and this isn't one of them. It is a traditional ray-tracing effect, though. Ray-tracing's best claim to fame is fancy reflection and refraction. Turns out I don't like this one particularly. Refraction is actually a fairly unsubtle effect, and I can see why crystal balls were used for gazing into - it's almost impossible to see what's going on. If you render a sphere, it's basically a big lens, and setting a scene up to render so that you can properly see what's going on with the effect is a nightmare.

Nonetheless, I've had a go. I think it more-or-less works, but basically I don't care if there are bugs in it, as I've got an image out of it and I'm done with it.

(The image shows various different refractive indices - I quite like the one on the right, actually.)

One thing I didn't expect to be thinking about was the attenuation of light. I'm demonstrating transparent, coloured materials. The logical basic model is exponential attenuation. However, this reveals the problems in a basic RGB light model. Let's say I create a material that almost immediately attenuates blue, doesn't touch red, and mildly attenuates green. In this case, a thin piece of material looks yellow, and a thick piece looks red. In other words, the colour qualities change with the thickness of the material. So far, so nice - real materials can behave like this.

However, under this model, asymptotically the material can only become red, green or blue (or cyan, magenta, yellow or white, if the components are precisely balanced). The separation of selectively attenuating frequencies from a spectrum and then projecting into RGB space really does not work the same as applying the function component-wise. I vaguely remember reading something about this kind of stuff in Foley and van Dam, but this is actually the first time I've actually really dealt with it...

In any case, I think I'm done with all the basic ray-tracing effects now. Hurrah.

Posted 2015-01-01.

Distributed ray-tracing versus financial Monte Carlos

As I've been doing all these distributed ray-tracing extensions, I've been thinking how it compares to Monte Carlo pricing in finance. Theoretically, they're taking the same approach (paths with randomised variations) for rather different integration problems, in order to deal with the same limitation: The curse of dimensionality.

In finance, if you've got a path-dependent exotic option where PDE doesn't work, you've got not much choice but to simulate price changes across each of the relevant dates. If there are a lot of dates, this is suddenly a high-dimension problem, and MC is the way to go. In practice, for quite a lot of cases, there are actually some rather more important dimensions, and you can get quick convergence using Quasi-MC and Brownian bridges. The only problem is to make sure you're not screwing it up and getting the price wrong, which is... quite a big concern.

Anyway, assuming you are doing standard MC, what you really want out of your MC pricing is stability. If you make small bumps to the input, or move forward a day, or tweak a parameter, you don't want the price to change unpredictably. MC pricing noise is a fact of life, as MC convergence is slow. However, if this noise is consistent, you have a hope of getting stables greeks out, and being able to explain what happened with your profit-and-loss from day to day. You don't want noise.

In ray-tracing, I think the curse of dimensionality is rather less prevalent (maybe integration dimensions for anti-aliasing, for depth-of-field, for motion blur, soft shadows, etc. - less than ten in total). Just like with quasi-MC, we can also take some of the dimensions and simplify them with more traditional integration techniques (e.g. anti-aliasing by rendering on a finer resolution, using frequency-limited/anti-aliased shaders (the RenderMan shader books are quite good on this) etc.). However, the MC/distributed ray-tracing approach simplifies this by putting them all under the same umbrella.

What an MC approach does, though, is give us noise. The results are grainy. People are used to grainy images. They look like photographs, or dithered images. The noise means that, over wider areas of the image, there is convergence to the right value, and locally you've got approximately right values whose inaccuracy is covered by the noise across pixels. If the algorithm were adjusted to, for example, repeatedly use the same points in an area light source for soft shadow calculations, the shadows would be steppy and ugly. We are deliberately not looking for spatial stability in MC behaviour, and it's a remarkably different approach.

On the other hand, moving into an area I know less about, I'm not sure what you want to do with animation. Presumably intra-frame noise is something you're happy with. In a static scene the pixels could mildly flicker, in the way that they simulate with that overlay on static images in YouTube. On the other hand, if you want the image to remain stable, you'd end up in a complex situation where you want the image to retain temporal coherence while being spatially noisy. I guess that could be a bit tricky to implement... but I suspect that no-one cares about that?

Anyway, it surprised me to realise that such different approaches to noise could be taken in two different domains associated with Monte Carlo techniques.

Posted 2014-12-30.

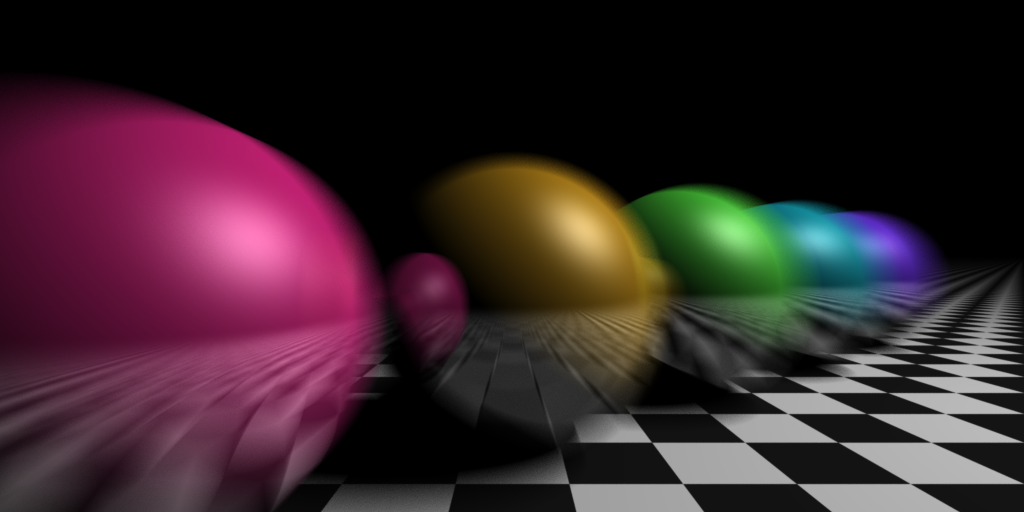

Ray-tracing fun: Motion blur

Next up in my distributed ray-tracing experiments: Motion blur. It's the same scene as the depth-of-field image, with the balls moving, and depth-of-field disabled, so that the only blurring comes from the motion.

Posted 2014-12-23.

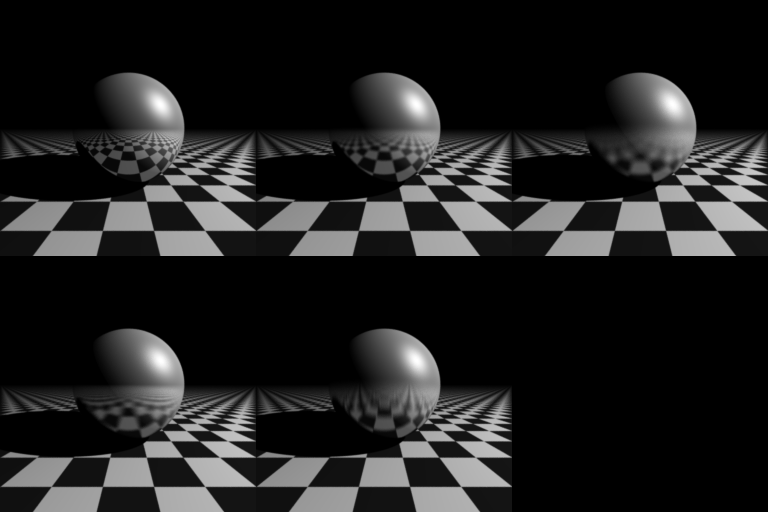

Ray-tracing fun: Fuzzy reflections

Continuing my experiments with distributed ray-tracing, I've added fuzzy reflections. To be honest, I'm not terribly impressed by it, but you can kind of see the effect I'm aiming for. The five sub-images are non-fuzzy reflections, two levels of fuzziness, plus anisotropic fuzziness with the fuzz in horizontal and vertical directions. It's a bit dull, but I prefer to report the negative results as well as the things I think look good.

Posted 2014-12-23.

Complex but not chaotic

This occurred to me ages ago, but only now, given the choice between writing and tidying the house, am I bothering to write it up!

As a child growing up in the '80s, I have a natural tendency to look at anything non-linear, and go "Ooooh! Chaos!". For example, water flow, when the water breaks up into little funny-shaped droplets and goes all over the place, that's got to be chaotic. No way could I write a program on a computer and believe that the simulation will match what happens in reality.

But then I see something like this Hackaday link, effectively stroboscoping such falling water. Being able to stroboscope the water is basically saying that the configuration is reproducable at each sample point. There's clearly going to be small perturbations of the input each time, yet the output is still pretty much the same. You can see water breaking up into droplets at the same place several feet down. Highly sensitive to initial conditions? Yeah, right.

So, complicated behaviour? Well, surface tension acting on water being waved falling through the air, so I'd say, "yes". Chaotic behaviour? Clearly "no". There go my 80s retro preconceptions.

Posted 2014-12-23.

Ray-tracing fun: Soft shadows

As a follow-up to depth of field, I've now implemented soft shadows. I used two lights, one large, dim area light above to soften most of the shadow (getting right under the sphere on all sides), and a smaller light to the side which changes colour across it, to give the image some interest in the colour. I'm not sure the colour on the shadow works quite right, but I think it gives some nice warmth to the highlight in what would otherwise be a monochromatic image.

Posted 2014-12-22.

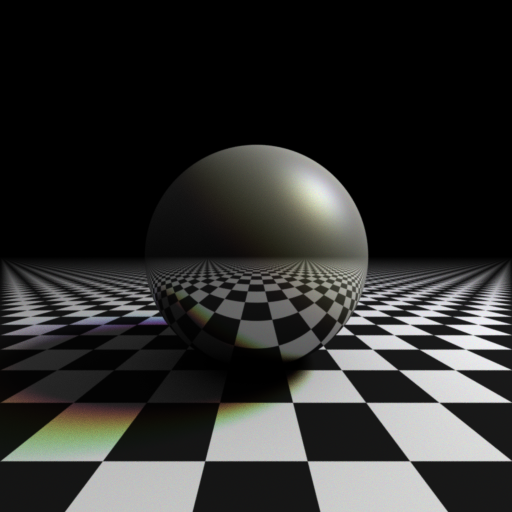

Ray-tracing fun: Depth of field

As reasonably-well documented, I like ray-tracing as a way of writing programs that generate pretty pictures. I wrote a simple ray-tracer aaaages ago, and only recently found the source. This got me tempted to write some extensions that I never got around to before.

So, I've finally implemented depth-of-field blurriness. It was quite fun. I got to do the standard checkerboard pattern, and some shiny spheres with colours from a colour wheel and stuff like that, so it's kept me quite amused. Ah, the winter break.

Posted 2014-12-21.

On the design of eyes

Yes, I know it's a really bad idea to anthropomorphise evolution, and attribute elements of "design" to it, and it's the starting point for all kinds of logical mistakes. I'm going to do it anyway.

The camera-like design of the eye is very neat, but I've never really thought about why it is the way it is. Specifically, what is it with that whole fovea business? Why is there a yellow spot which we use for doing most of the detailed seeing with?

It's not without cost. It means we have to have the eye as an encapsulated spherical unit with a nice, smooth coating that can be swivelled around at high speed, and an optic nerve that can handle that movement. Why would we just have a small area with decent vision which we move around, unlike an actual camera with a uniform input area, which would allow for a whole lot less eye movement?

Previously I'd probably have explained it in mechanical terms - putting all the cones in the centre allows you to put lots of rods around the edge, for seeing movements in the peripherary, in low light etc. It simplifies the optics, as you only need to get good focus in a small area. It allows the presence of a blind spot, by putting the optic nerve connection in part of the low-resolution area.

More recently, I've been thinking about machine vision. Spotting a thing in a scene is an exercise in fancy pattern matching. You want to apply a matching structure over a full scene - convolve it with the image, or otherwise apply the matcher in all the different parts of the image. Doing this with a neural network (like the ones in our heads) is quite a tricky thing. On the other hand, if you limit the recognition to a single area in the input, and then slide the input image under the recogniser, things are simpler. Perhaps our eyes are simply space to time multiplexers for pattern matchers?

I'm pretty sure it's more complex than that. After all, I think I can recognise things even if they're not totally bang in the middle of my vision. However, I think it's a more interesting reason for moving eyes than phyiscal constraints.

Posted 2014-12-13.

Anselm Keifer at the Royal Acdemy

I recently saw the Anselm Keifer exhibition at the RA. I've been interested in his work for some time now, and the exhibition is about to close, but I made it, just. I am so glad, since it's awesome. I don't think pictures of his work can do them justice. They're so textured, so large, so layered in symbolism that miniature reproductions make them look trite. The full thing blew me away. There was a lot of awesome stuff, but my favourite was the (very recent) set of paintings of Morgenthau Plan, which is rather more colourful than his usual work, but just stunning. I don't think it would work in any other way than around the walls of a very large room, so I am incredibly happy to have had the chance to see it.

Posted 2014-12-09.

Delimiters, escaping, stuffing and all that: How long is a piece of string?

I've been reading about shell scripting, and one thing that has always bothered me is how easy it is to get things wrong by having to deal with filenames with funny characters in - how do you handle filenames with spaces, newlines or even just colons in? Disappointingly, the book's recommendation is mostly along the lines of "Don't do that, then".

Indeed, this is a fairly common issue to deal with in computing, at all levels - how do you know when the field/line/lump of data you're reading is over? In a non-streaming, binary-blob world, the answer is relatively simple - use a length prefix to tell you how much to expect.

In the text-oriented, streaming Unix world, this isn't really much of an answer. Unix, in its simplicity, tends to go for special characters for terminating or delimiting. In /etc/passwd, newlines terminate records, and colons delimit fields.

However, this approach is so fundamental it's taken for granted. C-style strings are terminated with NUL - we have a character we can't put in our strings, and we mostly don't worry about it. I do like C, and I'm terribly used to NUL-terminated strings, but Pascal-style length-prefixed strings do start to look pretty sensible. Not only can you put in any characters you like, but it becomes more difficult to buffer overflow if you're forced to track the length.

Back in the day, I guess having to track the length/end position in an extra processor register was a pain and performance issue, but nowadays a Pascal-style string probably helps a modern processor's branch prediction - a string copy can be unrolled, knowing the exact length in advance, without having to do conditional behaviour based on the contents you're reading, checking at every byte. In summary, I guess I like length prefixing, where you can do it.

Having said that, C++'s std::string (and various other languages' equivalent) do track the length, but in most cases people using such types then don't really bother trying to support embedded NULs for most applications. Hey ho.

The basic Unix approach, then, is special characters as delimiters. It can get a bit silly. Say that you're reading /etc/passwd. You might be processing it using NUL-terminated strings, so that's an internal delimiter. You read records separated by newline, and break the fields apart with colons. You pull out the home directory, and the path components are separated by slashes. That's four characters used to delimit elements in a single context. If you want a home directory name containing any of those elements, bad luck.

In this most basic approach, the delimiters are special, and cannot be used for anything else. In the filesystem, file and directory names may not contain slashes or NULs. Period. (They may contain periods, though :). This seems a fair trade-off, since escaping slashes or NULs in file names would introduce some very unpleasant and risky complexity.

In many other places, though, the delimiter characters not being available otherwise is convention, enforced by things breaking if you don't follow the convention, as anyone putting spaces in their file names will know.

There are plenty of times when you do want to shove in whatever data you like, despite the delimiters and terminators. What can you do? A basic approach is an escape character. In shell, you can remove the file "A B" with "remove A\ B", and the backslash escapes the space which would otherwise separate the filenames. In C, a string literal which would otherwise be terminated with a double quote can have that double quote escaped.

Escaping provides more than just the ability to put a terminating character inside the data without terminating the data. It allows you to put in special characters (e.g. "\n" in C strings), or handle lots of special characters (e.g. "*" in regex vs. the literal asterisk in "\*"). However, basic escaping suffers from one particular hassle: The terminator can appear literally in the message, and the context must be checked to see if it's a terminator or not. In other words, if my fields on a line are separated by ":", and it can be escaped as "\:", I still can't just naively split on ":" itself.

The next question, therefore, is "How can I write my message so the delimiter never appears in the message?". If you know what the message is, and can choose your delimiter, you simply choose a delimiter not contained in the message. This is precisely the approach used by "here docs" in shell or Perl. In effect, it's also the half-hearted approach in shell when you put quote marks around a string - you're switching the delimiter from a space to the quote marks. Of course, if your string contains quote marks another approach is necessary, usually escaping.

This approach is also the one taken by Lua's "long strings" and similar comments. These are opened by tokens of the form '[======[', and closed by square brackets with the same number of equals signs, allowing you to embed anything inside as long as you've chosen the appropriate number of equals. If you know your string content, the chosen delimiter approach is nice, since the core data itself can be represented literally - there are no escapes or stuffing. The downside is that the delimiters have to be able to be multi-character, and there is the need to choose the delimiter, which is especially fun if you are streaming the content and don't know it in advance.

Another approach is to have fixed delimiters, but arrange your escaping so that the delimiter never appears in the content. Probably the best-known example of this is HTML/XML. A piece of text contains tags, but the tagging characters "<" and ">" never appear literally in your source. They are replaced with < and >, and the ampersand is escaped as &. This means delimiter scanning can be done without understanding the escaping, although you clearly need to understand the escaping to return the content to its original form.

A similar way of doing this is described in the Lua book. All characters you wish to eliminate from your string are converted to numeric escapes - i.e. \xxx for some numeric xxx (backslash being escaped like this too, of course). This means that you can then safely use the escaped characters as delimiters, without fear of seeing them anywhere else. Once the values have been split up, converting back to the original string is simple, too. A rather neat approach, I thought.

The "separator never appears in data" approach also appears fairly commonly in a multi-byte-separator context, but it's not immediately obvious what's going on, since it looks like you can embed the separator "character" in the message. Byte stuffing for mail messages has "\n.\n" as the terminator, and avoids this appearing literally by adding an extra period to the start of any line that starts with a period. CSV files escape quotes by repeating them, thus distinguishing them from the terminating quote marks, which are followed by a field-separating comma, newline or end of file. In both these cases, you might think the terminator is a single character (period or double quote), but really, it's a sequence.

The same idea applies in lower-level binary systems, too. Synchronous HDLC uses a particular bit pattern as a delimiter, and uses bit stuffing to insert bits to prevent that pattern appearing in the message. HDLC with asynchronous framing uses byte stuffing to prevent the delimiter appearing in the data.

In summary, the approaches are:

-

Prefix a length

Use a special terminator that may not appear in the message

Use a special terminator that may appear in the message, escaped

Use a user-defined terminator chosen to not appear in the message

Use a special terminator that may be encoded so that it never appears literally in the message

While I'm on the topic, I thought I might write a little on some related stuff.

Shell scripting mostly uses 'terminator that may not appear in the message', and escaping if you're lucky. This makes it extremely difficult to make scripts that work reliably over lists of values which have to be encoded in a single string. *sigh*

Regular expressions need both special characters representing regex operations and to be able to express the underlying characters. So, escaping's a key part of it. It's a real shame that the standard regex expressions are so ad hoc. Some things get escaped, character ranges have special cases for special characters, some things are special in some contexts and not in others, and some characters become special when escaped. Oh, and the extended RE syntax is not a simple superset of the basic RE expressions. *sigh*

UTF-8, while not being strictly about delimiters or escaping, is a great example of a good design, thanks to its self-synchronising properties - a great deal of thought has been put into what bytes may appear where. This seems vaguely ironic to me, as it was designed by Thompson and Pike, who were heavily involved in all the unix systems that were somewhat weak at escaping delimiters! UTF-8 is neat, and all is forgiven.

Quines - programs that print their own source - are all about escaping. The basic idea is to have a program that contains a literal string (with some escaping used by the language), and it will then print out that literal string, with the escaped version of that string substituted into it.

For example, in Haskell, we can do:

let x = "let x = ; (y, z) = splitAt 8 x in putStrLn $ y ++ show x ++ z" ; (y, z) = splitAt 8 x in putStrLn $ y ++ show x ++ z

Posted 2014-11-28.

Doing Google Code Jam questions (and knowing when to give up)

My day job involves plenty of design decisions, but not much in the way of real algorithmic exercises, and there's always the fear of going rusty. So, I thought I'd have a go at some Google Code Jam questions. I missed this year's Jam, just starting out on my practice as the competition's final round was happening. So, I wasn't doing any of this in competition setting, but I did try to time myself where possible.

I'd never really looked into the Code Jam before. An ex-colleague of mine, Luke Pebody, made it to the world finals, so it did seem interesting. I mean, yes, the guy's really quite bright, but how hard could it be? Presumably, it's just writing bug-free code nice and quickly, and even if I'm not super fast I should be able to get it right.

Oh no, this is hard stuff. Finding the right algorithmic approach, let alone coding it bug-free in any reasonable time, is difficult. I did the example questions, and then ploughed on in to the 2014 questions. Even in the early rounds I'm running an integer multiple times slower than the best must have been working. Occasional individual questions took me more time than the whole of that round. I thought I was good at algorithmic stuff. This whole thing has been exceptionally humbling.

The final straw was 2014 Round 3, Question C: Crime House. This question was correctly answered in time by 16 of the very best coders in the world (from a round of several hundred entrants). It's hard. The small case is doable. The hard case required me to try approach after approach as I tried to wrap my head around the optimal solution. It's taken me an embarassingly long time, and it's been seriously painful. I've finally completed it. It is utterly depressing to me that I'm still not entirely sure why my solution works.

At the end of that, I realise that there are 16 people who managed to do this in the 2.5 hour timeframe, with other questions. Astonishing.

Anyway, if I daren't spend this kind of time on any more questions, so for the moment I'm putting this on hold (the very next question was answered correctly by precisely zero of the world's top coders. I don't fancy my chances). I'll come back to it when I'm ready. In the meantime, my horribly hacky code is up on github.

Posted 2014-10-01.

Fixing Only Connect

Only Connect, BBC 4 (er, 2's) rather cerebral quiz show is a favourite in our household. As of Series 10, it's clearly shown its worth, as it's moved to BBC 2. At the same time, the "play at home" feature has gone away. This allowed you to play the "wall" round at home, using a Flash website. This was a lot of fun, adding a lot more interactivity than would otherwise be possible. The other rounds, you can shout out your answers with everyone else, but the mechanics are such that without the play-along website you wouldn't really be able to get the same experience on the wall.

So, we lost the website. That made us sad. Looking up Only Connect on Facebook revealed a whole host of other people who were similarly disappointed. What is one to do in such situations?

Clearly the correct solution is to reverse engineer the website and add the walls for the new series. This is what I've done, and the result is up here. After ripping the Flash apart, it turns out it's configured from some very simple XML files. It really does make me wonder what the difficulty was in updating the website for the new series. Politics of some kind, no doubt.

In any case, the site's now up. I'm trying to keep it up to date with the new episodes. The sad thing is that I have to watch the program in order to enter the walls into the site, which means I can't play them fresh, but at least Caroline gets a go at them, and hopefully they're of use to anyone else who wants a go out there on the wider internet.

Posted 2014-09-16.

Finally, a complete hardware project: A keyboard

I have built myself a keyboard, and am sadly proud of it.

I didn't do any of the tricky electronics or software - I used a pre-existing PCB for the "Phantom" design, and existing firmware on an existing microcontroller board. I did solder it up myself. I did minorly tweak the design of the metal keyboard plate (editing .dxf files! Woo!) before sending it to a laser-cutting company.

I did do the physical assembly. It was the first time I'd spray-painted metal - it does produce a lovely finish. It was the first time I'd attempted woodwork in about twenty years. I am still absolutely useless at it. The result, at best, might be described as having rustic charm. It works, no-one's going to mistake it for a professionally constructed device, and I'm still proud of it.

If you want to know more, I put up notes on github.

Posted 2014-08-09.

Generalisation from a single example

My previous post could be vaguely thought of as being about generalised external interfaces, versus specialised internal interfaces, and this reminded me about the process of generalisation.

Abstraction is the big thing in computer science, and generalisation a major tool in the kit of the computer scientist. Often, when designing things, we go "We need to do X. Right now we need to do Y, a form of X, so let's build a system to allow us to do X, and use it to implement Y.". How do we approach that?

The usual approach is to squint at Y until it's blurry enough, and call that blurry thing the general framework X. At some point in the future, we want to do Y', another form of X, and we discover that is doesn't fit in our system. What did we do wrong?

The basic rule should be: Don't generalise from a single example. Building a general system given a single example is about as sane as trying to extrapolate a curve from a single data point. Instead, build the system that deals with the single example nicely, and when further examples come along, that's the point at which to spot commonality and generalise.

This is not to say that the original design shouldn't be made without an eye to the future.

Another dodgy analogy: In building architecture, if you want to create a building that you want to allow extensions on later, you wouldn't place locked doors all the way around the perimeter which you'd unlock when an extension is built on the other side. You'd just make sure the walls could be safely knocked through to create passageways through into the extension, and if you want you can install doors later. Your design for generalisation shouldn't be general from the start, but should be easy to refactor into the general case. The second rule might be: Don't try to get it right initially, but design for easy rewrite or refactor.

Posted 2014-05-30.

Library interface design isn't necessarily good interface design

I used to work in C++. I knew people who tried to write all their code like it was in Boost. This was a horrible idea. Boost code is horrible and obfuscated and painful. This is often because it's got to compile on every compiler and platform known to mankind, and be used in a wide variety of circumstances (not necessarily forseen by the original author), and it's trying to optimise performance and provide all the features that everyone needs. And sometimes, it's because someone's being a smart-ass and trying to show off. In any case, writing Boost-style code for use in a one-off project to be maintained by non-experts was pretty clearly a silly thing.

Fast-forward a few years, and I'm writing Java. The language takes great strides to stop Boost-like code. Surely at this point, designing your internal interfaces to look like those provided by well-regarded third-party libraries makes sense?

In general: Still, no. Even though the code won't be tricky, the interfaces for libraries will focus on a) Extra-general use cases, b) Making life easy for the user, c) Backwards compatibility.

If you're working inside your own code base, you can change the interface. An interface tied into backwards compatibility is much more difficult to maintain. Moreover, it needs to be designed up-front for such maintainability, which will complicate the design concerns. Extra generality is likely to lead to more complex code, and making life easier for the interface user is also likely to. If you only use it once inside your code base, it's likely that you'll be putting more effort into making your own life easier than the work you save.

With internal interfaces, you can keep it simple, keep it specific to your problem, and put minimal work into compatibility. You control it, and can change it safely. Designing an interface for internal usage, and defining a long-term external API are extremely different projects, and it seems people sometimes forget this.

Posted 2014-05-30.