Simon Frankau's blog

Ugh. I've always hated the word 'blog'. In any case, this is a chronologically ordered selection of my ramblings.

More GitHub, some code

I've put another project on GitHub! Oh, the excitment. It's a toy project which generates regexps that accept numbers that are n mod m, base b, for given n, m and b, but I'm still kinda embarassingly proud of it, if only because I'm getting some code, however noddy, out there, which I've not really done before.

More generally, we've started using git at work. With lots of people and co-ordination to deal with and some extra tools to streamline process, it's awesome.

Posted 2014-05-03.

Computer Upgrade Fail

For the last few years I've had a desktop Windows PC sitting out the way that never really worked properly. That's ok, since I mostly used it for playing games and a Mac laptop's been pretty good for everything else. I have, from time to time, wanted something that booted up first time and didn't randomly crash.

A while ago, I tried swapping the motherboard and graphics card. That didn't work. More recently, I had a more serious attempt. Swapping everything else around, I think the only things staying constant were CPU and power supply. It still crashed. It was all getting a bit creaky with age, so I decided: Time to upgrade.

This time, it would all be good and working, and mystery brokenness and hassle would be behind me.

My choice is probably a bit silly, but all I want is a fairly low-end PC for playing oldish games, so I went for one of these funny AMD CPUs with a built-in GPU. I didn't even know they did such things a few weeks ago. I bought an AMD A10 7850K, and stuck it on a Gigabyte GA-F2A88X-D3H motherboard.

The only problem turned out to be that the motherboard only supports that CPU if you upgrade the BIOS. However, without a CPU that works on it, you can't upgrade the BIOS. So, I had to buy the cheapest, oldest compatible CPU in order to perform the BIOS upgrade.

All done? No. The BIOS can only be downloaded as a self-extracting archive. Which is nice, unless you don't have a Windows PC to extract the archive. Fortunately, Stuffit will do the job on a Mac.

All done? No. The BIOS can upgrade itself from a flash drive containing the BIOS except for the upgrade I needed. That requires you to run the upgrade from an executable. I ended up putting the BIOS, the upgrader and freedos on a USB stick. Getting it all bootable nicely and the rest of it, given I was using a Mac, was more pain than was really warranted.

All done? No. Once the machine was flashed with a BIOS that allowed me to use the CPU I bought, it was time to install the OS. I had a DVD image of the OS I wanted to install, but I have no DVD burner. USB stick will be fine, won't it?

At this stage I faffed a lot, with various different configurations, and learnt a fair amount about EFI. Finally, I read this post. It all finally became clear: Modern BIOSes don't boot vanilla ISO images from USB drives.

That's right, despite having all the code to boot CDs directly, and knowing about all different emulation schemes when booting from a USB drive, and having the resources to store a fancy graphics front-end, BIOSes don't actually bother to join the dots and do El Torito booting of USB drives. Any time you actually have a DVD image and boot it off USB, it's clever tricks in the image. Grrrr.

So, after some manual hack attempts, I used unetbootin (available for MacOS - phew) to convert the ISO into a USB-bootable form. Then I hacked it some more, as it wasn't quite working right. And... it finally chain booted through to the OS installer, which promptly fell over, being not quite able to find the disk as it expected.

At this point, I gave up. PhD in computer science or no, at some point you need to know when to cut your losses. This wasn't fun any more, and I just want a working PC. I've bought myself a DVD burner - they're dirt-cheap now - and am waiting for it to arrive. I'm sure there shall be further tales of woe, as it all fails to work for no adequately explained reason.

Posted 2014-04-08.

Yet another (minimally painful) disk crash

About a week and a half ago, I had a disk crash. Another one. The second one with a Macbook Air hard disk, as it happens. Disks break semi-regularly, and I finally got the memo, so I had reasonably recent back-ups. Hurrah. The things I learnt were:

- Disk crashes happen. Like I need reminding.

- Regular back-ups are good. More regular would be better, but still, so much better than previous crashes.

- If you're operating old hardware, spares are great. I had another MBA, and it could donate its disk. At the same time, I discovered the spare's battery pack had developed a disturbing bulge. So I got rid of that sharpish.

- The easiest way to install Snow Leopard on an MBA is copying the DVD to a USB drive. Apple won't tell you this, as they presumably think ripping their install DVDs is, er, dodgy, but on a diskless laptop a thumb drive is soooo much more pleasant than that remote disk faff.

- Disk crashes will nuke about a week of my time. Always. The whole rigmarole of getting the hardware sorted, reinstalling stuff (I just don't trust whole-disk back-ups), working out precisely what went since the last back-up, etc. Very tedious.

However, everything's pretty much back now, and with minimal pain. Hurrah.

Posted 2014-03-06.

The Last Big Thing: Process

Every so often in programming there a Paradigm Shift when The Next Big Thing comes along. Object orientation was one. It looks like functional programming might get a minor go at it. I'm guessing structured programming might have been one, but it was something before my time. The general pattern involves academic use, widespread adoption, and eventually "how did we do without it?".

The pattern also involves some overzealous usage, and eventual identification of the core good ideas. So, in the case of object orientation, the good stuff is effectively interfaces, encapsulation, subtyping, and having instances to remove globals. Something like that. And, at its peak we had deep hierarchies of implementation inheritance and spaghetti inheritance etc. making life a nightmare.

I haven't really noticed a major, almost universally-accepted programming language change since then, though. Was OO really the last Paradigm Shift (TM)? I don't think so. I just realised that the Last Big Thing was actually process. Except process is dull, so nobody quite talked about it in the same way.

Things that are now pretty much universaly agreed as good things that have quietly snuck in:

- Comprehensive automated testing, with plenty of unit tests.

- Continuous integration

- Advanced version control - ideally DVCS

- Bug-trackers

And that's just the basics. Sure, this stuff has been done forever for larger projects, but it's percolating down into SOP for any non-trivial operation now. There's even the full-on overzealous and uncritical embracing of extreme versions, such as Extreme Programming and full-on Agile development. There's also some stuff that doesn't look fully settled to me, such as use of Kanban and stand-up meetings or scrums, which... well, I dunno if they're universally useful or just good special-purpose tools. Anyway.

And of course things like XP and Agile get all the airtime, but the real revolution is in the stuff that's now being taken for granted on the average project. The Last Big Thing, quietly, was process.

Posted 2014-03-05.

On the GitHub

I am slowly making my way in to the 21st century, and have got myself a GitHub account!

The great thing about being a late adopter is that when you pick something up, it tends to be easy to understand and work well and all that kind of thing. On the other hand, you look a bit foolish when you excitedly tell everyone about it. Oh well. So, it does seem to be everything SourceForge should have been. It's not clear to me exactly why it's taken over that space and thrived, but I've not been paying attention there for a decade, so perhaps it's obvious to anyone who has watched.

Still, yay. Now I just need to do stuff to fill it with.

Posted 2014-02-02.

Reversing 'Head Over Heels' - Work Starts

So, I've gone quiet for a couple of months. That's because I've moved off one Z80-based project onto another. Specifically, I've started reverse-engineering the classic ZX Spectrum isometric game "Head Over Heels".

I've a few reasons for doing this. I've intended to do more reading of others' code for a while. Reverse-engineering is perhaps an extreme way of doing this, rather than just reading source code, but it's one way to go about it. I've been intrigued by how a quite complicated game like this gets implemented on a simple machine, and the whole thing has a huge nostalgia value. So, it seemed like a great project.

It looks like I'm not the first to do something like this, given there's a free-but-not-open-source reimplementation for modern computers. On the other hand, it doesn't seem a perfect copy, and, well, I haven't seen them publish reverse-engineered assembly, so it's still something of a mystery.

So far, it's been an interesting reverse-engineering exercise. There's somewhere around 8 thousand lines of assembly in there, and I think I've now identified about half of that (some very loosely). The entry points have been the literal entry point to the code, interrupt vector routines, and those routines that perform I/O - keyboard/joystick reading, sound output, interrupt triggering, writing video to the screen etc.

From there, I can start to identify and reverse the routines for writing text and sprites to the screen, constructing menus, how user controls are passed in, etc. By taking the memory dump and appropriately twiddling it into an image file, the sprites are easily found, and references to them can then be located.

Having built up a set of low-level routines, how the higher-level routines use them can be found. For example, the screen drawing routines make it possible to find the locations where lives and inventory are stored. The keyboard routines allow me to find the code that handle the "pick up object" case, which then provides me with a reference to the data structures representing the objects in the room. Each abstraction provides a crack that can be forced open to provide insights into the next chunk of code, which previously looked completely impenetrable.

So, that's where I am right now. While I'm halfway-through, or so, that's the easy half done, of simple, low-level routines. The rest may be something more challenging.

It's also reminded me that standard software-engineering techniques really helps. I'd had a previous pass at doing this before, but got nowhere near as far. This time, two things have been really helpful. The first thing is that I got the disassembly assembling back into the original image. By doing this, I can test that the change I'm making, improving the labelling, pulling out image files, etc., don't actually affect the meaning of the code, and what I'm reversing is true to the original image.

The other thing is to use source control. The ability to checkpoint the work I'm doing means I can undo silly mistakes, and see exactly what I've done since the last checkpoint. It's about the dumbest use of source control possible, but it's really brought home to me how useful it can be in contexts outside developing software or writing a paper.

All round, it's been a fantastic learning experience so far, in terms of picking up reverse-engineering techniques, but also in terms of learning about Z80 coding and writing games for the Spectrum. Wonderfully educational!

Posted 2014-01-24.

Cheap exploding power supplies

One morning I tried to log into my 'nix box, only to discover it was off the network. Investigating further, it was off, full stop, along with everything else hanging off the same power strip. Further investigation revealed that the power supply for my el-cheapo network switch was broken. It rattled.

I was vaguely aware of the dangers of cheap dodgy chargers, yet I had somehow been blazé about the cheapo PSU bundled with the cheapo router, left plugged in 24 hours a day. I guess I'm glad the house hasn't burnt down!

The introduction of switch-mode power supplies into those wall warts is fascinating. SMPSUs are really cool, and seeing them become so cheap, universal, and in many really rubbish is kind of amazing. Opening it up, the rattling part was the top that had been blown off the controlling IC. What triggered the failure, I'll never know, but everything around the chip was blackened.

As indeed was half the other side of the PCB. It must have been quite the little explosion. Looking at the rest of the thing, it's quite fascinating. The transformer's tiny (and I think only really necessary over an inductor for isolation), and the mains pins are just wired up to the PCB with ickle wires. No fuses in sight. I guess it was a good thing it was put on a fused power strip.

I know that e.g. an Apple power supply will be an absolute work of miniaturised art, but seeing how a small switched-mode PSU is done badly is, in its own way, just as interesting!

Posted 2013-12-05.

Another classic Cory Doctorow quotes

There's another classic Doctorow quote from here:

"For the second half of the 1990s, my standard advice to people buying computers was to max out the RAM as the cheapest, best way to improve their computers' efficiency. The price/performance curve hit its stride around 1995, and after decades when a couple gigs of RAM would cost more than the server you were buying it for, you could max out all the RAM slots in any computer for a couple hundred bucks. Operating systems, though, were still being designed for RAM-starved computers, and when you dropped a gig or two of RAM in a machine, it screamed."

Now, I know what he actually means: Around a decade ago, buying lots of RAM was a simple way to improve performance. As I grew up amongst the Amish, I was regarded as technically competent, and spouting this advice made me look god-like for five or six years. Please ignore numbers and dates in anything I write, as I don't do accuracy. Taken literally, however, it's rather more amusing.

According to this site, which matches my memories, and a bit of extrapolation, one gig would cost $32,000 in 1995, $3,700 in 1997 and $1,600 in 2000. And it's really more than that, since it's not inflation adjusted. "A couple hundred bucks" is a particularly interesting way of looking at it. Plus, of course, there's the question of what you could physically cram in. There certainly was a huge price drop in the first half of 1996 ('around 1995'), just after I bought myself a big pile of RAM, but the days of happily shovelling gigs of RAM in were certainly a lot later.

Unsurprisingly, his entry is a link-fest to all the gear to buy from Amazon, with all the kick-back referral commission stuff enabled for him, which kinda tells you what it's really about.

Still, as the quote goes, "Those who cannot remember the past are condemned to look like idiots.".

Posted 2013-12-04.

Electronics for Newbies: Basic serial communication

I have basic serial communication working! It turned out to be reasonably straightforward.

On the hardware side, it was a pretty simple wire-up of the SIO, with the only even vaguely non-trivial part being the cross-over between the SIO pins and the TTL-level RS232 USB module thing (yes, I'm not even bothering with a MAX232 level converter and real RS232 connector - nowadays USB is going to be more convenient). I swapped TX and RX, and CTS and DTR. Apparently the super-cheapy FTDI USB serial breakout boards prefer to break out DTR over RTS because of some Arduino reason I wasn't paying attention to. One nice thing about using USB is that you get 5V power. And given the low power consumption of CMOS, I can happily run my little computer off the USB-supplied power.

On the software side, it was a simple case of initialising the SIO's registers, and writing routines to read and write characters, polling to make sure the appropriate buffers are non-empty and empty respectively. Initialisation was made more fun by the data sheet being vague about the 'write registers' for control. Register 0 is used to set which register number will be read/written for the very next read/write, but this is explained somewhat implicitly. Code example? Don't be silly. Anyway, once that was in place I could write a very simple piece of code to do comms. No flow control, no interrupts.

So, the next features to test will be getting the flow control working, and making it work on an interrupt-driven basis. However... the next piece of code I plan to write is a hex loader. I've reached the point where, in theory, I should be able to load code onto it through the serial port, and then execute it. This should help shorten the development cycle, so it's what I'll do next. Then I can use that to develop the rest of the I/O stuff. Hopefully at that point I'll be able to port BASIC to it. Just like a real little computer.

Posted 2013-11-14.

Electronics for Newbies: Tying up loose ends

The 'tying up loose ends' is not to say I'm nearly finished. More that in order to make progress I had to fix some bugs where I'd not paid attention to detail.

First, I wired up the data buses to my SIO and the buffers/latches that I'll use to drive an SD Card interface, hoping to incrementally wire them in to everything else. The machine then stopped working. I could tell something was nastily amiss as the current consumption had gone up non-trivially, and I was a bit worried I'd burnt something out.

Once I wired the chips up to power and hardwired their 'chip select' lines to disable them, everything started working. Phew. No need to unsolder and replace major components (all the more worrying given the rat's-nest of my construction).

My assumption that the I/O lines of unpowered chips were effectively disconnected was, well, pretty darn wrong. My mental model had the I/O lines, well, 'go hi-Z' in that case, I think I assumed that an unpowered output stage would not do anything, but even a simple CMOS model could have the output transistors acting as pass transistors to the power rails, which would then allow cross-talk between the bits. For I/O circuitry of realistic complexity, with all that anti-latch-up and anti-static protection, I'm sure all kinds of interesting things could happen.

Once the system was working again, I tested the interrupt part of the CTC (timer) circuitry, which I previously hadn't exercised. The interrupt only triggered once. What I'd failed to do is re-enable the interrupt during the interrupt handler. While the system support prioritised interrupts, you do need to re-enable the maskable interrupt explicitly, it seems.

My test code displayed the contents of a memory location, which was updated by a timer interrupt. Except, it flickered weirdly all over the place. Turns out I'd failed to make my 'display contents of memory location on LEDs' code update the display fast enough, so I was getting a certain amount of aliasing of the actual updates. I switched to a loop continually reading the variable, and the output now looks lovely.

So, everything's working nicely as I pay attention to the details. Making these mistakes wasn't great of me, but it fortunately wasn't too hard to debug. Next up, getting that serial I/O working.

Posted 2013-11-14.

Electronics for Newbies: SMD soldering

Well, it's been some time since the last update, so I thought I'd put a rambling in. Having exercised all the infrastructure I'd built, I've found only one (not terribly unexpected) flaw: I haven't debounced the reset and NMI buttons. Reset isn't a problem - a few resets back to back are fine. NMI is slightly annoying, as the next NMI may start before the previous completes, perhaps even before the register state is properly saved. Oh, and ideally, of course, one button press would lead to one NMI! Still, NMIs aren't core to my plans so I'll live with it until I think of a nice way to stick a little debounce in.

Otherwise, looking back, I reckon the ability to read the current state of the memory banking configuration is overkill. Any software tweaking the mapping can track the state itself. But hey, it's only one chip to implement. On the other hand, if I admit it's overkill, then the read-only RAM mappings are overkill too, really, and indeed I should probably say that the whole banking thing is overkill. All I really need is circuitry to disable ROM and have a full 64K of RAM, and everything else is probably excessive. So, let's just say it was worth it for the fun.

Then I took a break from this Z80 project to learn some surface mount. It's pretty clear now that veroboard is not nice for complex circuits, and I'll want to do proper PCBs in the future. However, why stop at through-hole PCBs? Surface mount is very popular with hobbyists nowadays, and all the funkier stuff comes in SMD packaging. I've got a decent soldering iron, so I really should be able to do it.

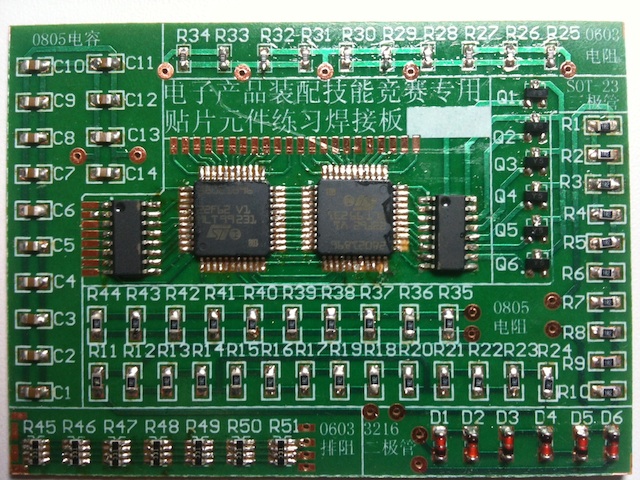

I bought a cheapo SMD practice kit off the internet (hurrah, China!), read a couple of tutorials, and this is the result:

It may look not great, but actually pretty much all the pins, when inspected carefully, are soldered up without solder globbing together adjacent pins. I think the uneven lighting and highlights make it look worse than it is. In the end, everything was good (enough), except where I lifted some tracks on the first QFP that I tried:

The main thing I learnt is that flux is magic. I got myself a flux pen, and it was a complete life-saver. The QFPs really want to be done with drag soldering (i.e. don't try to solder each pin individually, but just apply 'enough' solder and pull it along with the iron until all the pins are done). Unsurprisingly, unless you're an expert, you'll get 'jumpers' - adjacent pins soldered together. The great thing is that if you apply a shedload of flux and then wave your soldering iron over it, it'll generally just snap into the right configuration and remove your shorts. Whatever it's doing to surface tension or whatever, it's magic!

The other thing I learnt is that watchmaking tools are really helpful for this kind of stuff. Decent tweezers, lighting and loupes make the job much more pleasant than it would otherwise be.

I'm clearly still no expert. I've only got down as far as 0.8mm pitch on the QFPs. However, it was a very heartening experience, and I'm extremely tempted to use SMD devices in future projects!

Posted 2013-10-30.

Return of the blog

My blog and book reviews disappeared for a few days as I moved to a set of static pages that are generated up-front. cgis have never really suited me - I don't like the possibility of exploits, and I always managed to annoy sysadmins by getting the scripts spammed. So, a change of hosting gave me the excuse (or requirement, I guess :) to switch to the static approach.

So, one Lua script later and it's all back. More or less. I think there are a some bits and bobs that I'll want to improve, but basically I think this'll do.

Posted 2013-10-24.

Electronics for Newbies: Now with documentation...

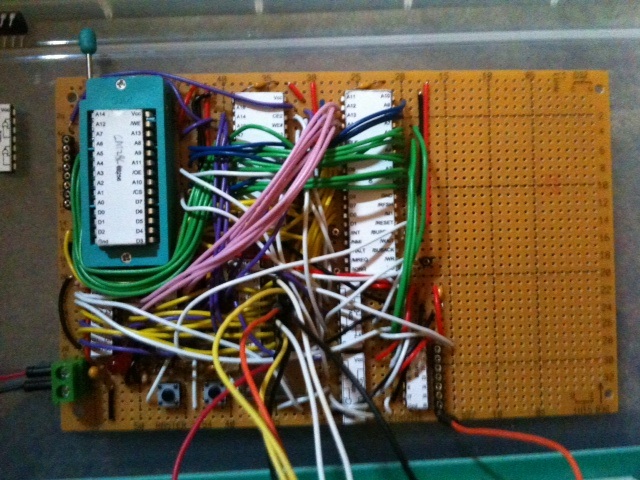

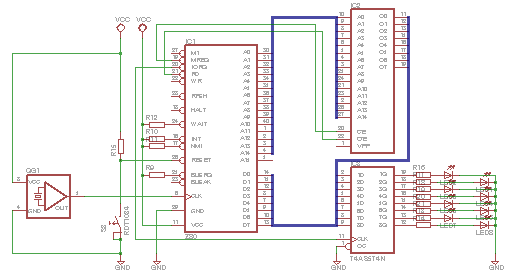

I've not made any real progress since my last Z80ing post, but I think I can still flesh out the documentation side a little. I've sketched up a loose circuit diagram for what I've built - skipping all the dull stuff like decoupling caps, and the RC reset circuit, etc.

I also have a couple of photos, of where I've got to with the PCB (see the fugly mess of wires, in rainbow organisation, see the empty space reserved for SIO etc.), and how I hooked it up to a latch and LEDs for testing...

Yeah, they're a bit uninspiring and blurry, but what do you want out of a quick photo at night on an old phone?

I have written a little bit of system exerciser code, and one thing I'd rediscovered was that the chips used to do the banking have inverting memories. So, it powers up to access the memory at 0xFF000, but if you then want to map it in software, you actually want to map it from 0x00000. Or perhaps a more sane way to view it is the boot ROM is mapped at 0x00000, but the data in the EEPROM has to be juggled by shuffling all the data to flip the top eight bits of addressing.

So, taking that into account, and ignoring the fact that only partial memory addressing is done (hence much mirroring of the same address space), the 'physical' software-oriented memory map looks like the following:

- 0x00000 - 0x07FFF 8 pages of EEPROM

- 0x80000 - 0x9FFFF 32 pages of RAM, mapped read-only

- 0xC0000 - 0xDFFFF Same 32 pages of RAM, mapped read-write

Each page is a 4kB mappable unit that can be put anywhere in the Z80's 'virtual' address space.

Posted 2013-09-30.

Awesomes

While it's not something I tend to talk about on the general internet, I think it would be a little remiss if I never mentioned my children. So, I'd just like to point out that they're awesome. That is all, so back to the normal electronics and rants.

Posted 2013-09-30.

Electronics for Newbies: Main Screen Turn On!

So, after a big pile of soldering, I'm back to where I was a few weeks ago on a breadboard. Namely, I have a CPU and a EEPROM wired together, plus a latch on to some LEDs. I should be able to run my 'Nightrider' light blinker on it. Admittedly, the circuit also has RAM and memory banking, but I wanted to test the features incrementally..

So, I started up the test program and... it didn't work. Time to debug!

Investigation of the address and data bus showed that every read cycle, it was reading an 0xFF from 0x7038. The high 'F' of the address nybble is the memory banking hardware - it initialises to read from the high end of the banked memory space, which maps to the top of the ROM. The '0x38' part is more interesting. It turns out that '0xFF' is the machine code for 'RST 38', so the address contains an instruction to jump immediately to the same instruction. Why did the EEPROM contain this value at this address, causing this infinite loop?

Reading the EEPROM back through the programmer, it turns out that while I thought I'd instructed the programmer to program in my blinker program at a base of 0x7000, it apparently didn't. Another reprogram, and I could see the address and data buses going all over the place, apparently executing the program correctly.

Still no blinking lights.

Extensive diagnostics revealed the (embarassing) source of the problem: I'd put the LEDs in the wrong way round.

Next steps, I'll be writing a test program to exercise the RAM and banking, checking it all works, and then preparing to add a CTC and SIO, giving me timer interrupts and serial I/O. Once that's working, I hope to add a SD card reader (apparently, this can be done even with 70s tech), and I'll have myself a simple CP/M machine!

Posted 2013-09-23.

Electronics for Newbies: A bit of an update

So, it's been a few weeks since my last post. As mentioned earlier, I'm now incrementally building a simple Z80-based computer on stripboard. It's been lots of little incremental things: Inspired by the pdf from here, I wrote a small PostScript file that generates chip labels for the chips I'm interesting in, which makes wiring everything up far nicer - no need to continually refer to the data sheet. I've also spent quite an extended period waiting for a cheap ZIF to arrive, which is perhaps slightly silly of me, rather than spending a couple of quid more for express delivery. Soldering a large number (or at least what feels like a large number) of wires is very tedious.

Initially I built and tested the banking circuitry. Having had experience with breaking things through shorts, and other surprises, plus having had enough experience of developing software, I'm taking a 'test early, test often' approach. After double-checking for shorts (the loupes I have from playing around with watches being fantastic for this), I'm now powering the circuit up with an ammeter attached, so I can make sure it's drawing the power I'm expecting. I guess somebody sufficiently paranoid would have some current-limiting circuitry in place, but that's outside my ability. Fortunately, nothing was shorting or otherwise drawing unexpected current.

The oscillator unit I'd plonked in, plus the banking circuitry, took around 35mA. Those 74LS-series little SRAMS are thirsty. I followed this up by soldering in the Z80 itself, and letting it run receiving NOPs from the banking software, and the power consumption's basically the same. Add in the EEPROM and the RAM with output enable lines high (i.e. off), current pretty much the same. Everything's noise compared to the power consumption of those LS chips. I think I'll really be going for pure CMOS in the future!

Data buses are fully wired-up, so all I need to do now is get the address bus in place. Then I can test my simple 'Cylon' program again, run some RAM tests, memory banking exercisers and I'll be ready for the next expansion: Adding a CTC and SIO. With timer interrupts and serial I/O, it'll be a proper little computer.

Posted 2013-09-14.

Electronics for Newbies: Memory Banking

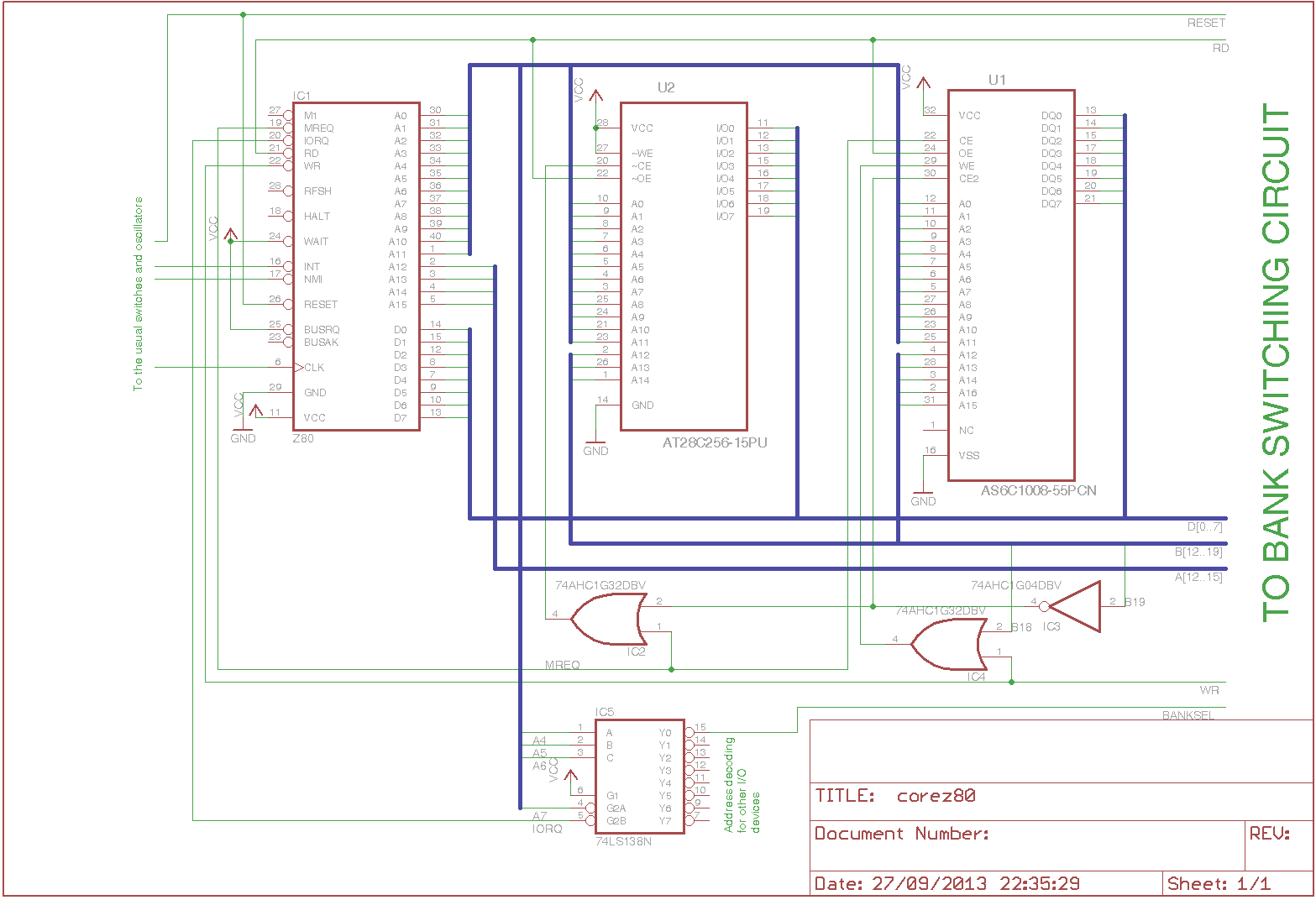

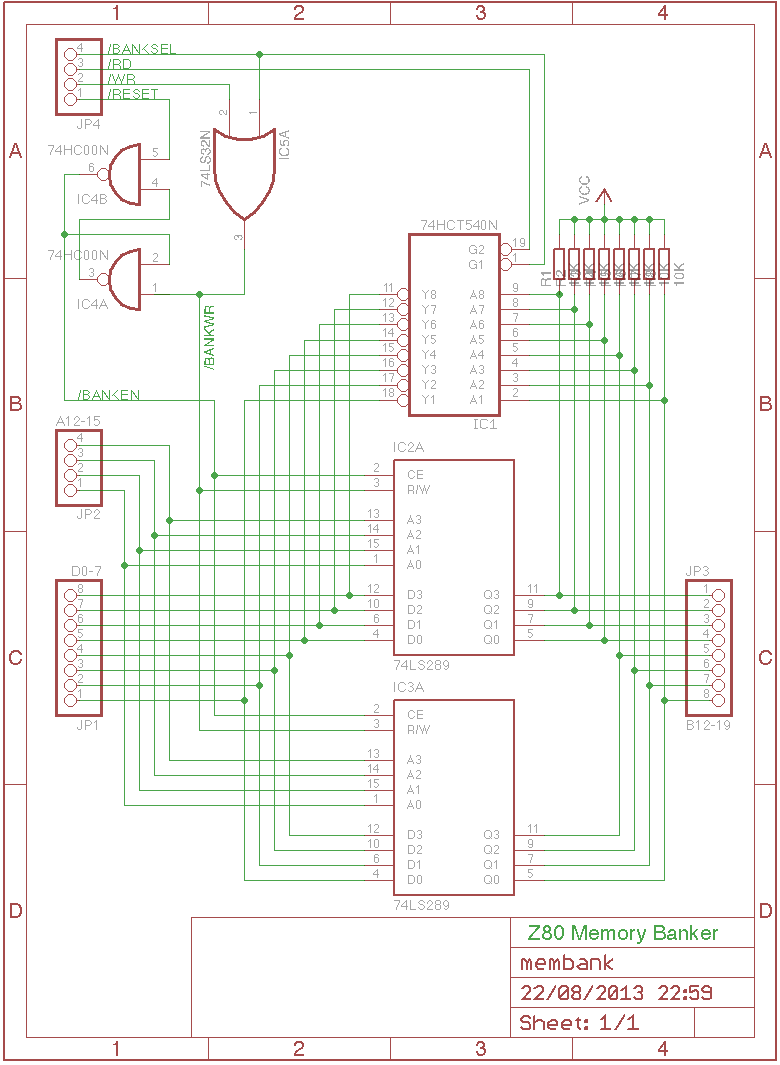

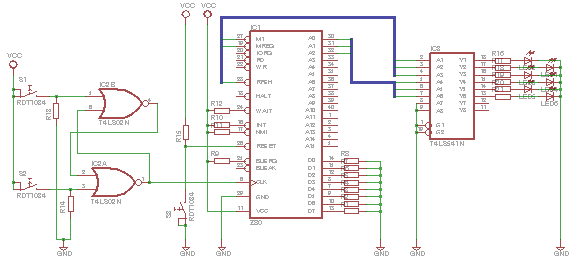

So, the main piece of interest in my noddy Z80 is the memory banking, allowing it to address more than 64K at once. Back when I had a Spectrum, this seemed something like magic, and now it's a pleasure to have a go at it myself. Without further ado, here's my dodgy schematic, thanks to Eagle.

The basic idea is that each 4K chunk of memory can be mapped to one of 256 locations, bringing the total address space to one meg. All rather 8086. To make this work, we want a little memory with 4 bits of addressing (top address bits in), and 8 bits of data (top address bits out). The 74289 more or less does the job, but only has 4 bits of data, so we use two.

Labelling-wise, An is the CPU's address bus, and Bn is the segmented/paged full memory bus. A0-11 map straight to B0-11. Dn is the normal data bus. For control signals, we have /BANKSEL, which is triggered by address decoding and IO access, plus the normal /RD, /WR and /RESET.

So, how does the circuit work? Initially, ignore the Bn bus, and the control circuitry, and just look at the 74540 and 75289s. It's really just a simple memory. Select the location via the A12-15 lines, and either write or read over the data bus. The 74LS289 has inverting outputs, so we use an inverting buffer. The 74289 is only available in LS format at best (by the time they were making CMOS 74-series chips, they probably wondered why anyone would ever want such a tiny memory...), so I need to use the HCT version to buffer it. The 74289 has open collector outputs, which means that when they're disabled their output is all ones, and if you do a read through the inverting '540 you'll see zero, which is a nice initial value. I'm using 10K pull-ups because it's what I have to hand, and is in the appropriate ball-park for pull-ups.

So, what's all this about using A12-15 to control the configuration of banking, given that IN/OUT takes an 8-bit address that goes on the lower bits? Well, it doesn't really. You can actually control the high bits with the appropriate instructions (An OUT (BC), I think), which was used for example to scan the Spectrum's keyboard. Here I'm using it to massively simplify the banking logic.

Why use A12-15 to select the bank to read/write when doing I/O? Simply because when we're not doing an I/O operation to configure the banking, this circuit reads from A12-15 and writes the associated memory look-up to B12-19. In other words, it performs precisely the banking look-up we need. B12-19 are TTL level, but that's ok, 'cos it's used to drive TTL-level-supporting SRAM and EEPROM.

Now, the control circuitry. Reads happen when /BANKSEL and /RD are low. Writes happen when /BANKSEL and /RW are low (signal /BANKWR). However, on start-up the '289s may be full of random cruft, and we want to start with known EEPROM mapped in. To that effect, we have a flip-flop providing the /BANKEN signal to say whether banking is switched on or not. It is reset high be /RESET, and then enabled low by /BANKWR when data is actually written to the banking I/O address (I'm of the opinion that I/O reads should try to avoid triggering side effects, hence using /BANKRW, not /BANKSEL).

That's it in theory, and my soldering up will eventually reveal the practice. What could I have done differently? Well, I was tempted to have /BANKEN also reset upon, for example, NMI, so that the NMI could go to a monitor from a known state. A further extension could enable I/O reads to return the configuration, even if /BANKEN is off, enabling such a monitor to save down the banking state before doing its own thing and eventually restoring the state of the previously running program. However, I really want to keep the complexity and chip count down, and I suspect I'm not going to have the time to write complex software to really take advantage of such features. So, KISS. In which case, this whole thing is actually pointless overkill, but it's been fun.

Doing all this stuff feels surprisingly adventurous. I have to remind myself there are people ten years younger than myself working on the cutting edge, and pottering about with what is fundamentally retro technology is just so no big deal, but this is just a lot of fun. Despite a PhD (in hardware synthesis!) in the way, it feels pretty much like no gap from trying to build a simple ALU from vanilla 74-series chips in my teenage years (doing it all wrong, and having lots of fun...).

Posted 2013-08-23.

Electronics for Newbies: Getting serious

It's pretty much time to move off protoboard. For the first time in many, many years I'm soldering up a circuit board. Admittedly, it's stripboard. And this is where the problem is. When I last did this stuff, I was making small circuits, with not many connections. Digital circuitry with a lot of busses means an awful lot of wires. It also means cutting a shedload of tracks. Not that I'm in to the high-frequency end of things, but it's extremely naff for that too. With all the wires and the low density of the holes, the density you can put chips in compared to a regular PCB is very low. While I'm happily committed to stripboard for this project, it's clearly not viable for doing anything serious.

So, custom-made PCBs. I don't fancy etching my own. I don't like the look of home-etched PCBs, I don't want more nasty chemicals around with the children, and there are plenty of places that'll produce professional-looking boards. At least simple surface mount is likely to be in my future, at which point things like solder mask is really useful. It's all going in that direction.

This means I'll need proper PCB software. My half-assed attempts at schematics in Eagle are going to need to be kicked up a notch if I'm going to get a PCB out at the end of it, so I'm working through the tutorial and all that. There are plenty of tutorials from people like Sparkfun showing how to get from Eagle to a nice professional-looking PCB.

It's about at this point I realise how highly the game has been raised in terms of amateur electronics, from electronics mags in the early '90s with simple analogue circuits. You can get modules for pretty much all near-cutting-edge technology, ready for amateur use. Break-out boards for all kinds of exotic BGA devices, as well as all the microcontroller boards with really useful headers. Sticking these things together is now like scripting languages when programming. Lots of high level glue and productivity-enhancing short-cuts.

However, this is not all. Even when people take the harder route, fully doing their own PCBs, things are way more advanced. Tonnes of people using really fiddly surface mount, and doing all kinds of amazing stuff. While I'm only hacking at stuff, it's nice to see the standard that can be aspired to, even amongst hobbyists.

Posted 2013-08-20.

And while I'm at it: Cory Doctorow

While I'm slagging off pseudo-geeks with stupid glasses, Cory Doctorow. I've had this rant for a while.

Cory Doctorow is a paid shill of the EFF. His doctorate is a "no study, life experience only" one from a "distance learning university" his wife runs. He is technically clueless, careless and disengenuous.

By 'paid shill', I mean he was once employed by them. By his degree, I mean he got an honorary degree in computer science from the Open University, where his wife works. The rest is accurate. My biggest gripe, I guess, is that he's disengenuous. The above is probably how he'd present someone in his situation if he didn't like them.

Take, for example, the recent Boingboing article where it turns out that the NSA funded some of GCHQ's operations. At the reasonable end of the spectrum, these are organisations that work together, and if there's a project where one party is doing more of the work, and the other party gets more of the results, it kinda makes sense from a funding point of view. However, it's not a big leap to the idea that actually, the US is paying GCHQ to spy for it. GCHQ is not an intelligence agency for the UK, but actually partially a paid consultancy for the US. Viewing it like that is kinda shocking, but that's not the angle Cory goes for. No, "NSA bribed UK spooks GBP100M for spying privileges". WTF? I guess my employer bribes me to turn up to work, too.

And that's just a random article from this morning. Everything he writes, he disengenuously spins like this. He's got hate on for the Daily Mail. Fair enough. However, the opposite of right-wing spin carefully designed to confirm the suspicions of its readers is not left-wing spin carefully designed to confirm the suspicions of its readers. It's presenting the truth. Cory's just a left-wing Daily Mail writer.

On lesser charges, he's incredibly careless. I guess this just goes with being prolific. He'll publish stories that meet his agenda without fact-checking. I remember reading an article saying my MEP was proposing particularly evil Internet regulation. I mailed him a 'What's that about?' mail, and it turns out it's BS. Great. You see corrections again and again.

However, this carelessness extends not just to passing on third-party information, but also to writing about things he thinks he knows about. This is perhaps most visible in his computer-science-related writing. The Knights of the Rainbow Table is perhaps the single worst piece of science fiction I've ever read. A complete misunderstanding of the efficiency of compute farms and the scaling of brute-forcing problems makes it painful reading. Like a stopped clock being right, weak passwords, weak hashing and passwords shared across sites has made password-breaking an actual issue, and he's claiming prescience on it. *sigh*

This is a person who'll use the phrase 'if-then loops', and invariably ends up describing any game-theoretic situation as Prisoner's Dilemma even if it's not (although kudos to him, he recently issued a correction when someone pointed out the mistake). A little knowledge is a dangerous thing, and he's definitely on the 'little knowledge' end of things.

However, I can't really complain too heavily about this don't-get-the-details, don't-care, on-to-the-next-big-thing, let's-go-to-TED attitude. Dr. Doctorow is, in effect, an optimised product of the internet. His science fiction is amongst the worst I've read. His writings are shallow, predictable, designed to righteously infuriate their reader and/or pass on internet memes and most definitely free. He is the epitome of free driving out good, and people's inability to avoid "junk food" in all its forms. Have pity on humanity.

Posted 2013-08-02.

Electronics for Newbies: A RAM-less microcomputer

After my success with a single-instruction computer, I decided to kick it up a notch: An output-only, ROM-only computer! Before describing my exciting journey I should point out that my previously analysis was wrong: The DJNZ 0x10 was jumping each time - not going to the next instruction - but as the instruction was the same each time, we were jumping by 0x10, and I was only monitoring the bottom few bits of the address bus, I couldn't tell the difference!

The changes were about as incremental as possible, so no exciting debugging was necessary. Between each step I tested, and there were no hideous surprises:

- I added an EEPROM, hooked up to the address and data bus. It contained the world's simplest program to do a 'Knightrider' display on an output port. No delay were included, as we're single-stepping.

- I moved the buffer over to the data bus, to display it instead. I added an extra LED on /IORQ, so I could see the values it planned to write out.

- I swapped the buffer for a latch, triggering on /IORQ. With enough single-stepping, I could now see the pattern.

- In a separate circuit, I tested the 2MHz oscillator module I'd bought. (Slow? Yes, but I'd rather it work reliably...) It worked fine. \o/

- I replaced the single-step circuit with the oscillator, and modified the program to include delay loops. It now ran all prettily on its own.

- Finally, I added a cap to pull reset down on start up, to prevent the need for manual intervention.

The final schematic (apart from the reset cap, which I forgot) looks something like the following. The part numbers aren't quite right because I'm lazy and the software I use didn't seem to have the exact parts.

I have a lovely little video of the thing working, but I don't think there's anyone in the world sad enough to want to see it, so I won't put it online. Your loss!

Posted 2013-08-01.

Hipsters: Big bushy beards and dorky glasses

While I'm in a ranty mood, I thought I'd attack another pet peeve: Hipsters. Yes, I'm officially old, and I'm maligning a well-maligned and indeed easily-maligned group. Get over it. Age has some privileges.

What are the distinguishing features of hipster developers? The common ones appear to be big bushy beards and those big retro glasses. Yes, there are plenty of other features, but those are the ones that get me.

Beards: Kernighan has a beard. Ritchie had a beard. Thompson has a beard. You aren't allowed to have a big, bushy beard, in the same way that an investment bank intern can't wear bright red braces and smoke cigars.

There's an exception for Haskell programmers. They're allowed beards. No, I don't know why.

Dorky glasses: Dorky glasses were last worn by NASA rocket scientists while they were getting mankind to the moon. If you're building a social network for people who like to take photographs of their food, you should not be allowed these glasses.

I guess what gets me is that geekiness has become fashionable. But to become fashionable is to become misunderstood and shallowly imitated. Geeks may be inheriting the earth, but don't look like a geek, act like one. Speaking as someone for whom one of his best teenage memories was what his transatlantic peers might call "math camp", I'd like to say stop it, you're not a geek.

And of course, I will be wrong. Some of these geeky-looking people will actually be genuine, proper geeks. You can't judge a book by its cover. One of my most pleasant, more recent discoveries (sadly upon his death) was that all-American hero Neil Armstrong was a pretty hardcore geek. No big, bushy beard for him.

Posted 2013-08-01.

The British Censorwall: Libertarian Paternalism gone wrong

While having ISPs give their customers the option to filter stuff for them seems a laudable enough goal, the way this government is going about it seems highly suspicious to me. The proposal as it now stands makes it mandatory for ISPs to provide blocking, and the default is to have it on. For a government apparently enamoured with Libertarian Paternalism a la Nudge, this is particularly dodgy.

The idea behind Libertarian Paternalism is that in situations where the government or authority can't but get in the way, they should do so in a way that gives people the choice if they want it, but has a sensible default. So, for example, when Child Trust Funds were created, multiple providers were created (a good thing), but if you didn't choose one, you'd get one selected at random for you (better than not getting the money at all, but way worse than having an expert choose a sensible default - perhaps this is a bad example, but I can't actually think of a good example they've produced *sigh*).

In this particular situation, there's no actual concrete reason the government had to get involved. Having decided to get involved in Internet censorship, there was even less reason to take the route they have. Presumably, with a bit of arm-twisting, it'd be perfectly possible to get all the big ISPs signed up 'voluntarily'. Making it mandatory just clobbers minor and specialist ISPs with requirements that they might be ideologically opposed to. So, there must be some reason to make it fully mandatory. Moreover, making the default to be censored is a particularly odd decision.

Why would you go for default censorship? The normal argument is that you would make the default the thing that is most appropriate, best for people, etc., and people can override that if they like. However, everyone's survived fine so far without all this blocking, so it's not clear why this should be the new default. It's certainly not a subtle transition, but it emphasises the new regime. And this is what I suspect the decision is for - it's not a default that's best for consumers, it's the default best for the government. Assuming people don't override the default, they'll get nice big take-up of their blocking regime. If people can't choose an ISP that doesn't offer blocking, people can't vote with their money. If the default were no blocking, the take-up would be tiny, and the government would look stupid.

So, it's a radical reduction in choice. When I say people can't vote with their money, they really can't. If you're not charged for blocking, you're not getting free blocking, but instead everyone's paying for it, whether they want it or not. However opposed you are to this censorship, you'll be paying for it, as a prerequisite for getting internet access.

This ham-fisted nanny state stuff is supposed to be what Labour was all about. Why on earth are the Lib Dems and Conservatives, ramming it through?

Posted 2013-08-01.

Electronics for Newbies: The simplest possible microcomputer

So, the last time I did any electronics, according to my blogging, was 2010. I got stuck with some crystal oscillators which behaved in an annoyingly analogue fashion, and my plan was to go model them in SPICE to understand them better. Somewhat later, after having children eat up a few years of time, I realised that I don't actually have any interest in becoming an analogue electronics expert, and I might as well stick to the digital electronics (which is noisy and messy enough as it is!

So, getting back to the main plot, I'd rather like to build a simple 8-bit microcomputer. Z80-based, for preference. This is getting more and more difficult to justify, given that single chip microcontrollers are getting ludicrously powerful, let alone what you can get for hobbyist embedded stuff - Arduino and Raspberry Pi (hi Eben!) to name just a couple. It's almost a bit difficult to avoid cheating, since you'll probably want to use some modern interface bits and pieces, but it's far too easy to, well, make it too easy for yourself.

As it is, I'll be using nice modern software tools - cross-assemblers and schematic editors, modern docs off the internet, etc., but I hope to at least partly keep to the spirit. When it comes to I/O, I'll probably just tack on a modern intelligent LCD and PS/2 keyboard, despite both of them effectively cheating, once basic RS232 serial is working. I see little point in trying to give myself too much pain in a basic project!

First step, though, I wanted to create the simplest possible Z80-based system. To do this, I created a single-byte hard-wired ROM out of, well, 8 resistors. This is what I mean:

Specifically, I wired up the data bus to produce NOPs. The CPU should just step through memory, doing a combination of opcode reads for the NOPs and refresh cycles for the Z80's DRAM support thing. The plan was that I could then watch the pins of the CPU as it does its stepping, with my manual clock cycling. I put in the flip-flop to make sure things were nicely debounced. Pretty much everything had a 10k resistor between it and a power rail, having had previous experience of unnecessary accidental shorting breaking stuff. I've also missed out decoupling caps and the rather useful power LED to remind me not to faff with stuff while it's on.

So, I powered up the circuit and was mildly surprised to see it running through the following cycle of states (sampled consistently in clock high or low state - I forget which, and don't care much :)...

| Address | /M1 | /RFSH | /MREQ | Description |

|---|---|---|---|---|

| 0 | 111 | 111 | 111 | Init |

| 0 | 00 | 11 | 10 | Opcode |

| 0 | 111 | 001 | 101 | Refresh |

| 1 | 11111111 | 11111111 | 10011111 | ? |

| 2 | 00 | 11 | 10 | Opcode |

| 1 | 111 | 001 | 101 | Refresh |

| 3 | 11111111 | 11111111 | 10011111 | ? |

| 4 | 00 | 11 | 10 | Opcode |

| 2 | 111 | 001 | 101 | Refresh |

| 5 | 11111111 | 11111111 | 10011111 | ? |

| 6 | 00 | 11 | 10 | Opcode |

| 3 | 111 | 001 | 101 | Refresh |

| 7 | 11111111 | 11111111 | 10011111 | ? |

Each row represents several cycles where the address bus remains the same. The 0s and 1s are the values of that line for each of the cycles at that address. The very first line is post-reset initialisation. After that, there appear to be cycles of three different patterns...

Specifically, it shows is the odd and even addresses behaving differently. According to the M1 line, opcodes are read at addresses 0, 2, 4 and 6. After reading the opcode, there are refreshes, running at once per instruction, at addresses 0, 1, 2 and 3, and then some non-opcode read at addresses 1, 3, 5 and 7, taking 8 cycles.

So, what's that about? Odd and even instructions apparently behave differently. Perhaps it's not actually a pair of instructions, but treated as a single instruction. The total instruction time is 13 cycles. An online search reveals that one of the few instructions taking 13 T-states is DJNZ, in the 'not jumping' case. It is an instruction with a single byte operand. It's opcode is... 0x10. Perhaps my D4 pull-down line wasn't working properly?

Sticking a 'scope on the pins showed nothing surprising. However, if I applied 0V directly to the pin, rather than through a breadboard connectiong, it started working properly. I expect a nice floaty CMOS input would be fine for showing one thing on the scope, and something else to the CPU. Fun, fun, fun.

So, my hardwired program was apparently 'DJNZ 0x10'. I now have a better understanding of the Z80's instruction cycle (including a realisation of quite how slow old CISC processors are - how many cycles to complete a simple operation?!). I also have a bit of a worry about how painful debugging a non-trivial microcomputer may be. If a circuit as simple as this needs debugging and investigation, how much fun will a real computer be?!

Posted 2013-07-28.

'Why Pascal is Not My Favourite Programming Language', revisited

I was having one of those conversations at work with someone who didn't want programming languages to "nanny" him. I am fully in favour of programming languages that nanny - most work ends up maintaining other people's code, other people are idiots (*), and anything that minimises the pain is a good thing - analysis tools, test suites, etc., and even just not having the language provide you with every conceivable option and say 'sure, I trust you'. There's a reason we don't use Perl for everything. I want languages to nanny people, especially the vast majority of the people who claim they don't need nannying, because they know better. Most don't.

(*) This isn't egocentric. It holds true for you, too. From your point of view, I'm an idiot. This is because everyone's an idiot, but because there are far more other people, and just one you, you notice other people more. And just perhaps, you have a bit of a blind spot to your own stupidity. Also, I'm being a little unfair. Most people are idiots all of the time, and some people are just idiots some of the time. Nonetheless, everyone generates bugs.

The proposed root of this person's view was that their first proper programming language was Pascal, and that nannied far too much. This reminds me of Kernighan's Why Pascal is Not My Favourite Language. Admittedly, the Pascal I learnt to program with was the legendary Turbo Pascal 5.5, which played more than a little fast and loose with the original Pascal constraints, so I wasn't bitten by limitations.

A number of points made in that paper are quite valid - not so much about nannying, but about poor language design - such as having a fixed array size as part of its type. On the nannying side, it enforces structured programming (no breaks or early returns, which do tend to bite), it lacks a macro processor (either used for defining constants, badly, or providing exciting ways to screw things up), and there's no way to cast pointers around to construct your own allocators, etc.

In the end, C beat Pascal handily, but at what cost? C is a great systems programming language, but it's still used even now for the most inappropriate tasks. On the one hand, the ability to cast pointers however you like and have no array bounds checking has lead to decades of security holes and nightmarish bugs. On the other, productivity is dire, as there's no way to really climb up the layers of abstraction. Manual memory management is error-prone, and efficient generic containers will just make you want to cry.

In comparison, some of those ideas in Pascal are somewhat prescient. Structured programming can help optimising assemblers. If you can't tweak and cast pointers any way you like, you have a hope of creating sandboxable programs, and a lack of aliasing may help vectorisation. A restricted language helps with all kinds of analysis. The mindset behind Pascal is effectively the mindset behind Java and C#. The modern C is C++, which is just epically twisted and nutty, and will actually positively encourage you to shoot your own foot off.

For most enterprise applications programming, Java or C# is the right choice. The Pascal side beats the C side. If you work in a large company with lots of other developers, where reliability and maintainability counts above micro-optimisation and being a unique snowflake, well, suck it up. And occasionally, just occasionally, a runtime binary patch is the correct solution, and you can run wild.

Posted 2013-07-11.

Cynicism about motivation in science - An inappropriate approach to climate change

Default cynicism is only slightly less lazy than pure naivety.

As I'm on the science end of things, I'll call it arts-student skepticism. The basic idea is that, when you hear someone's opinion, you question why they're saying it, based on their vested interests. "You can tell when a politician is lying - his lips are moving." In finance, you expect people to "talk their book" - say things that will encourage the markets to move in a way that profits them. This is certainly an improvement on taking whatever people say at face value. People who spot conflicts of interest often seem terribly proud of their cynical insight.

However, discarding whatever others say when it's convenient to is just broken too. It's a huge mistake to confuse the person saying something from the actual message, even if the correlation in general is huge. Idiots can hit on the truth, and geniuses can make mistakes. So, discarding messages based on 'Well, they would say that, wouldn't they? It's in their best interests' is pretty awful. It's laziness, rather than addressing the issue based on actually understanding the topic, and evaluating the speaker's argument.

Of course, it doesn't really help if the speaker doesn't actually provide much in the way of a logical argument. In the public sphere it's probably rather foolish to do such a thing as it's a) boring, b) opens you up to someone pointing out any mistakes you've made.

Public arguments are transmitted by journalists, who will mangle anything of consequence. Applying the cynical framework, their job is to get readers, not identify and transmit Platonic truth. And even if you're not cynical in that regard, journalists are non-experts who are trying to make what they're saying as accessible and interesting. If you ever read an article in an area you're an expert on, you'll see how wrong they get it. It's the same for whatever they cover, whether you know it or not.

And this is the world that climate change has to deal with. Handling climate change is politics. So, when a bunch of scientists say something, the default response is 'They're scaremongering to get themselves on the gravy train.'. Aaaaargh. Climate science is the messy end of science. It's a really complicated system we don't fully understand, and it's not like we can do controlled experiments. It's almost as bad as social science. However, it's still science. Go look at the evidence. Understand the issues. Skepticism is an important part of science, but it's about the ideas and evidence, not people.

Science has to meet politics, too. The evidence has to somehow eventually become policy, policy that won't annoy the electorate too much, and is internationally acceptable. Scientists in general are poor at this, and have to work hard to get their message across.

However, deliberate ignorance through a carefully selected diet of poor information and assumption of cynical motivation is repulsive. As Feynman said, "For a successful technology, reality must take precedence over public relations, for Nature cannot be fooled."

Posted 2013-06-27.