Simon Frankau's blog

Ugh. I've always hated the word 'blog'. In any case, this is a chronologically ordered selection of my ramblings.

Technology vs. social norms ('Like')

Back in the proper days of Usenet, people tended to write long posts, and even if they weren't long they had big headers and got distributed all over the place, so the cost of being spammy was non-trivial. It was not Twitter.

So, there was fairly heavy social pressure not to write 'me too!' e-mails. Which people inevitably did, and were told not to. They added nothing to the conversation, you see. Well, kinda they did, in that they could emphasise consensus, but the cost to do so was too high, so everyone pretended such 'aol' posts were worthless in the world of weighty conversation.

Come Facebook, posts are much, much shorter. On the other hand, people still want to agree with the poster. You don't even need to comment to agree - there is a 'Like' button. So, rather than creating a social solution to a technical problem - the Usenet approach - the technology is modified to meet social needs.

While it's traditional to bemoan the move away from Usenet, in this way, I think the change is an improvement.

And then there's Twitter, which is just all spam. ;)

Posted 2013-06-23.

Notes on being a software developer 2: Things to learn from books etc.

First, the fundamentals. If you've got the right mindset, and you know what you want the machine to do, you can probably write something to make it do it in some half-hearted way. The trick is to make it do it fast (algorithmic complexity) and well (formalise the problem, consider corner cases, implement to spec). And that is algorithms, the core of the subject.

Most developers will, admittedly, spend most of their time gluing together other people's libraries. But abstractions leak and you'll need to look inside the black box. You may well need to write your own libraries. And remember that nothing a computer does is magic, so you should be able to get up to your elbows in gubbins on any part. So, even if your day to day job isn't coding clever algorithms, it should be your bread and butter. Put another way, do you want to be a mechanic, or an engineer?

So, algorithms, algorithms, algorithms. That includes data structures and complexity, and all that. I give two thumbs up to Cormen et al's Introduction to Algorithms. It covers the basics and does some very interesting advanced topics, and has lots of references to papers for further stuff.

As it provides a rich toolkit of ideas, plus useful vocabulary, add basic computer science theory. Computation theory with regular languages and context-sensitive grammars, lambda calculus, that kind of thing. First order predicate calculus. Probably learn ML, Haskell and/or Scheme.

Eventually, it all hits hardware, so learn computer architecture. Hennessy and Paterson will do the trick. Great book. Readings in Computer Architecture was also pretty awesome. Digital logic and maybe a little VLSI help round it out. Abstractions leak, so it's still useful if you never pick up a soldering iron.

Then there are the other staples of a traditional computer science undergrad degree: Graphics, security and cryptography, networking, compilers, databases, etc. They pretty much all have interesting ideas associated with them. Learn a bit of all of them, and dig deeper on the ones that pick your fancy.

You've probably noticed that I'm being heavy on the theory, and very light on the specific technologies. That's because it really is all about the ideas. The technologies keep changing, and while ideas change too, it's at a much slower rate, and it allows you to see those things that are invariant.

Learning specific language is necessary, of course. They're fundamental tools, and if you can use a language efficiently, things are pretty bad. Moreover, some languages are really effective ways of getting across concepts. On the other hand, some languages are sufficiently baroque that people become experts in the language and its tricks, not programming. *cough* C++ *cough* People can end up thinking they're designing in the abstract, when in fact they're designing in a particular language. Obviously, what you design must be implementable in the language used, and sometimes it makes sense to play to the language's features and idioms, but fundamentally, you should be designing something that architecturally makes sense even to someone who doesn't know the language. Again, as an example, some people have their concept of what object orientation should be broken by C++.

So, learn languages, but I'd recommend wide rather than deep. Both is best.

Then there are specialised algorithmic areas that have some really interesting stuff, but don't show up on the wider radar much. Good psuedorandom number generators and hashes. Compression and error detecting/correcting codes. That kind of stuff. Between my undergrad and now, machine learning has become a real subject with lots of interesting things to learn. Outside but near to computer science, there's plenty of discrete maths. Play with stuff like game theory, perhaps?

A lot of this is about getting the best possible toolkit to think with. So, practice is useful. There are lots of puzzly things that both give you practice, and may throw you new stuff too. Project Euler is fun, as are competitions like Google's Code Jam, and there is an apparently never-ending set of interview puzzles, all of which make good exercises. I'm sure you can find more.

This has been fairly algorithmically-focused. There's a lot of process and design stuff that is incredibly important, but I'm not sure it's something that's easy to pick up from books. There are books like Code Complete which I guess are worth reading, but I think this is particular area where experience seems to do its thing, or at least it's not well-enough served by books.

On the other hand, I'm actually quite positive about books on management and leadership. Too many people treat managing teams or leading projects as this incidental thing they fall into. Whether or not you like it or are interested in it, if you're going to do it, do it right. As managers like to write documents, there are plenty of decent business books in this area, and it's worth reading a few. Even if you're not managing, you can understand what your manager should be doing, how to make their life easier, and use them to make your life easier. Books can be useful because they allow you, even in a possibly dysfunctional management environment, to understand how things can and should work.

I guess the idea here is to be an idea infovore. Try to keep learning new things, but particularly favour those things that give you new ways of looking at the world, new tricks, and ways to get to the nub of it all.

Posted 2013-05-19.

Paper round: Java and trading

A number of months ago, I moved to a team which does algorithmic trading in Java. I had little expertise in algorithmic trading or Java, so I did a little bit of reading. Not a huge amount, but just enough to get an idea of the field. I also just read a bunch of papers that seemed fun that I hadn't got around to otherwise.

Making a Faster Cryptoanalytic Time-Memory Trade-Off - Philippe Oechslin This paper describes rainbow tables, which is a space efficient way of storing password reverse look-up tables. I'd seen them about the place, including being referenced by (but not understood by) Cory Doctorow - see the awful Knights of the Rainbow Tables for example. I'd read the Wikipedia explanation, but it never really clicked. So, I read the original paper, and it made sense for a while. It's slightly fallen out of my head now, but it clicked nicely for a while. :)

Implementing lazy functional languages on stock hardware: the Spineless Tagless G-machine - Simon Peyton Jones A while ago, I tried reading SPJ's book on lazy language implementations, which went via spines and all the rest. I move incrementally from finding it heavy going to getting lost. Given that the STG is core to GHC, and Haskell was my day job for so long, I thought I owed it to try to understand the STG. So, I read this extended paper, and it's very straightforward! Without spines and all that, and devoid of the memoisation part, the non-strict evaluation is quite clear, and the virtual machine it describes is not far off a strict machine. The adding of updates for memoising results is clever and subtle, but it written in a way that makes it feel not totally impossible. It's a really well-written exposition that makes the whole thing come together for me.

JSR 133 (Java Memory Model) FAQ - Jeremy Manson and Brian Goetz As the algo trading system I work with is (unsurprisingly) multithreaded, and the Java memory model is new since I last used Java in anger (i.e. around the turn of the millennium), I thought it would be a good idea to get up to speed on what Java guarantees. So, this document provides a nice summary of that.

JSR-133: Java Memory Model and Thread Specification This is the official spec itself. It's got a formal model. However, I found it quite disappointing. The informal rationale didn't really make things clear, and the formal model was simultaneously a bit woolly in places, and actually surprisingly unintuitive. It could just be that I'm a bit dumb. However, I like to pretend that I'm not, and even if I were, a decent write-up would allow me to understand it easilt enough anyway.

The JSR-133 Cookbook for Compiler Wrters - Doug Lea And this one tries to cover everything again from another angle - that of a compiler writer. A slightly interesting alternative, but not really terribly insightful.

Generics in the Java Programming Language - Gilad Bracha The other particularly funky thing that turned up in Java was generics. The basic idea of generics is simple. The full subtle complexity is... subtle and complex. You can do most stuff without worrying about it. I hoped this document might cover the other side. It didn't. I think I might need to go to the spec.

The Reyes Image Rendering Architecture - Cook, Carpenter and Catmull That's Java, so now a random other paper. And it's a bit of a classic. Reyes is the algorithm behind Renderman, Pixar's rendering system. So, it's a kinda lightweight, high-level paper, but it does give you the flavour of it, micro-polygons and all. It's all rather interesting, and well worth reading.

NVIDIA Fermi compute architecture Not actually related to Reyes! So, in my last role, we were looking at GPUs for computation. I realised that I didn't really know what the architecture involved really was. Turns out, it's basically a multiprocessor system where each processor is itself a SIMD processor with multithreaded scheduling (a la Hyperthreading). Not a surprise, I guess, but fun to understand where the state of the art is/was.

Ecology of the Modern Institutional Spot FX: The EBS Market in 2011 - Anatoly Schmidt EBS is one of the online exchanges. Some people access it through GUIs, others through algorithmic trading systems. It's interesting to see how it's used, and who makes money off who, etc. Also, it's interesting to see the size of the market, the overall structure, etc. This paper focusses on liquidity in this market, but does provide some other interesting background.

The Trading Profits of High Frequency Traders - Baron, Brogaad, Kirilenko This paper tries to work out who profits from whom, in terms of traders they categorised as aggressive, medium and passive HTFs, fundamental traders, small traders, non-HFT market makers and opportunistic traders. They did this by analysing data from S&P 500 eMini trading. It does this competently enough, not really revealing anything that will help make profits, but at least making the structure of the market that little bit clearer.

Does Academic Research Destroy Stock Return Predictability? - McLean and Pontiff This paper looks at published studies of stock market anomalies, and investgiates whether the returns decrease once they're published, presumably as they're arbitraged away. It seems they do. Not a real surprise. What's impressive is how non-commercial this kind of research is. Not only does it not actually produce a convenient list of stock market anomalies you might trade off, but it doesn't look at these anomalies in terms of risk-reward tradeoffs. So, it's not clear what the limits of arbitraging away are - has it just hit a level where the risk isn't worth it, or what? Useless social science research. Gah.

Is Everything We Know About Password Stealing Wrong? - Florencio and Herley This rather random paper is a simple one-trick pony. It points out that because banks can mostly undo disputed transactions, online theft mostly consists of stealing money from mules ('work from home, processing transactions!'), and it's waaay harder to find mules than compromised accounts. While it's not deep, it provides a fairly sensible reality check on the economics of password stealing. Fun.

A Parameter-Free Hedging Algorithm - Chaudhuri, Freund and Hsu I saw a presentation where this approach was mentioned for an online-learning decision theoretic system. It made sense at the time. Then I read this paper. It describes the simple algorithm, succinctly. It proves useful properties in an appendix. What it doesn't do is motivate the underlying problem. It explains the advance over the previous unexplained state of the art. As I'm lacking all the background in this area, I'm completely missing the point of what this is about and why I should care!

The Influence of Organizational Structure on Software Quality: An Empirical Case Study - Nagappan, Murphy and Basili Conway's law says organisations produce systems that mirror their organisational/communications structure. This paper extends that idea - that bug defect rates can be predicted from organisational information, when related to source control information - so if code is edited by lots of different people in various unrelated teams, you know you've got problems. The 'empirical case study' in question is Windows Vista. They claim good results, even compared to other metrics that look at the code itself, but it never really convinces me. There's some correlation/causation stuff going on here - I'm not quite sure if the analysis is picking up the right stuff, but it does make a very fun formalised anecdote.

Searching for Build Debt: Experiences Managing Technical Debt at Google - Morgenthaler, Gridnev, Sauciuc and Bhansali 'Technical Debt' is such a great phrase - it's actually pretty good at getting across to management why, just because it works right now, not tidying things up is a bad thing and will bite us in the future. All projects get technical debt. Well, either that or they're not real world projects, and probably won't ever be used. :p So, how to manage technical debt is a bit of an issue, and any ideas how others do it are interesting. Sadly, this paper covers a few very specific cases, and so falls rather short of my hopes.

CP-Miner: Finding Copy-Paste and Related Bugs in Large-Scale Software Code - Li, Lu, Myagmar and Zhou Another perennial issue is code duplication. At least it is where I work - I work with mathematicians and scientists who aren't necessarily highly experienced with good software development practices. And sometimes, they're up against tight deadlines. So, copy-and-paste is a way of life. So, code bloats, bugs are only fixed in one of several locations, etc. There are tools out there to analysis this stuff, so I thought I'd read up on them. This paper starts inauspiciously, but is actually rather good. The algorithm does some neatish stuff, the analysis shows more-or-less what you'd expect (most of the copy-and-paste in the Linux kernel is in drivers), and it does a rather neat thing of spotting copy-and-paste related bugs. The only downside is that the actual CP-Miner software is only available commercially, and the open source equivalents are nothing like as good. Ho hum.

Posted 2013-05-12.

Christminster, completed

I have finally completed Gareth Rees's highly recommended

After that, I zipped through the rest. Having spent so long, I was willing to take hints. The telephone puzzle is actually technically broken (grr), and the rusty key was just me forgetting about one aspect of the game. Finally, completion! What next?

Posted 2013-05-09.

Notes on being a software developer 1: Learning stuff

I'm writing up my thoughts on, if not how to be a better developer, at least how to be more like a developer who thinks like I do. I'll leave it up to you to decide whether this is a good thing or not.

Rather embarassingly, one of my strongest beliefs is that you can't make a good developer through paint-by-numbers, despite what I'm doing here being to create a big piece of paper with numbered regions. I guess what I'm hoping to produce is a guide for those with the potential, to help them shortcut the process.

Or, alternatively, I can give up the pretence that it's to help some hypothetical junior developer, and say it's a braindump of my worldview, because I'm arrogant. Doesn't really matter, does it?

Anyway, this is quite long, so I'll break it into chunks...

Learning stuff

- Learn from your mistakes Some people have n years of experience. A lot of people seem to have one year's worth of experience, repeated over and over. If you don't learn from your mistakes, it's not going to be much of a career. Learning from successes is actually a little dangerous - there are often multiple ways of doing things, and sticking with one that worked ok in the past may stop you from exploring even better solutions. This is not suggesting change for change's sake, but be wary of ignoring better solutions, having found a "good enough" one. With failures, the cause is often clear.

- Learn from others' mistakes If you only learn from your own experience, you can only gain one lifetime's worth of experience, at the rate of one year per year. If you can properly learn from others, you can learn so much more, and moreover you won't actually have to spend the time doing it wrong. When someone tells you "don't do it that way", it's very possible they're wrong (see other rules), but also that they have some experience or knowledge that you can learn off.

- Learn who to trust Vociferousness, experience and intelligence are all impressively unreliable indicators of whether someone's opinions are trustworthy or useful! Some combination of coherence and logic to their thoughts, effective domain-specific experience, empirical evidence and self-knowledge of the speaker might all be more helpful indicators, but it's difficult to articulate. Which is a shame, as it's really important to choose the right people to listen to (and no, "people I agree with" is not a good criterion unless you want to get stuck in a rut). But you trust me, right?

- Learn outside work This isn't a workaholic "do work at home" thing. This is just having "learn new stuff" as a hobby, something you do all the time. Sometimes it's tangential to work, sometimes it's unrelated, sometimes it's basically work stuff that's interesting and you don't want to leave it in the office (if there's nothing at work like that, get a better job). Always keep learning.

- Learn inside work Often, you'll accidentally learn things as a side effect of doing your job. Learning new stuff should be a big part of your job. It makes you more effective and more valuable, so it's a good use of time. Colleagues will often have practical knowledge that just isn't available in any books. Clearly it's more useful to learn the generally applicable things, rather than workplace-specific minutae, but there you go. Part of this is being very willing to ask questions, even if they sound dumb. Generally, if you phrase it in terms of wanting to clarify things, to make sure your inferred model is actually right, it doesn't sound so dumb. Especially if it reveals that you misunderstood something, or that the person you're speaking to doesn't really understand it either!

- Learn from the good stuff As well as finding clever people you trust to learn from, learn from the writings of the best. Find out what the best books are, and read them. Generally they're written by the real experts in the fields, and they're well-written, clear, comprehensive and just awesome. Why would you pick up some "in 24 hours for dummies" book, when you could get a Cormen et al or Hennessy and Patterson? Once you hit the end of standard textbooks, start reading the best research papers. Open source gives you the opportunity to read and learn from high quality code, and I should do more of that (but choose wisely!). And nowadays, there are online courses from some really decent academics (although the standard mostly seems to stay around first year undergrad), and some really funky research-level blogs. So much to learn.

- Learn hard Read up on how Ben Franklin taught himself stuff. It's astonishing. If you can even vaguely emulate that... wow.

I'm pretty sure all the above must sound really, really obvious, but I thought I'd spell it out because there just seem to be so many people who don't keep learning, and over time just put themselves at a disadvantage. Nutters.

Posted 2013-04-20.

The flaw in LinkedIn's endorsement system

Steve Jobs said that A players hire A players, B players hire C players. I have a suspicion that something not dissimilar happens with LinkedIn endorsements. Who would you endorse as having skills you feel you are strong at? Personally, I'd only endorse people who are really good at that thing - the endorsements are a reflection on my judgement, after all. So, generally, I end up only endorsing those better at the thing than I am. To endorse those weaker than yourself is either to say that you're incredibly brilliant, or have low standards.

However, such endorsements aren't really helpful, either. If you're looking for a top X, why would you trust the judgement of a relatively weak X in comparison? I guess endorsement allows the creation of self-regarding clusters of Xers who all endorse each other, but I'm not sure that's helpful either.

It's a bit of a difficult system to avoid, too. You see messages like 'Do you want to endorse Simon Peyton Jones's Haskell skills?', 'Do you want to endorse the Pope's Catholicism skills?' and 'Do you want to endorse this bear's arboreal defecation skills?', and... well, it's hard not to (NB: I've only actually seen one of those). So, top people get stupidly obvious recommendations from people that don't really matter.

About the only useful case I can see is something like a 'recommend a plumber' - you see a few recommendations like 'I'm an Xer, I needed a Yer as I know nothing about Y. Fred did all the Ying I needed, and now I'm happy.' Useful for consultants to sell their customer-facing skills, but not much else.

Posted 2013-04-11.

Why I don't like Bitcoin

Bitcoin is fascinating cryptography, but bad economics. It's structured to produce a deflationary currency. Yuck. It eliminates the banks and all that, but banks do a whole pile of stuff actually quite well, with quite an advanced structure. Bitcoin is stone-age, comparatively.

Bitcoin makes promises it can't keep. Your transactions are super-secret, except actually they're just there in the log, and they're only secret if no-one knows who owns the account. Cryptography keeps your money secure, except everyone and their dog involved with Bitcoin gets hacked. Bitcoin is a useable method of payment, except the exchange rate swings wildly all over the place, making it more appropriate just for speculation. Bitcoin will make you rich, but, well, actually it's made some people rich, but there have been nice, big crashes, and late-comers aren't going to get rich.

Bitcoin has a depressing world-view. You can't trust anyone, so heavy crypto is needed. Yes, I know that's a pretty standard view for crypto projects, but in this case it's pretty much right. It seems everyone is out to scam you, and the government won't help you this time. Bitcoin "makes the government scared" because they won't be able to steal your money in taxes. Government clearly being an evil arm-twisting force, rather than a mechanism by which we get schools, healthcare, social security, law and order, and all that non-libertarian stuff. And more practically, Bitcoin is used to order assassinations and arrange drug deals. Yeah, I know that the same could be said for cash. Doesn't make it a good thing for a new invention to do.

Bitcoin is a waste. The zero-sum nature of Bitcoin mining drives it towards the price of a Bitcoin being the marginal cost of mining it in terms of hardware, power, etc. Bitcoin generation is proof-of-pointless-work. We're wasting CPU time and using electricity up, pushing climate change along for the sake of the algorithm. If you think the high-frequency trading arms race is bad, it's nothing on this deliberate, brazen burning of compute power.

Bitcoin is Arnold Rimmer's ideal currency. Dressed up in the politics of crypto-libertarianism, of freedom from the banks and government, of progressive cleverness, is the underlying hope to get rich quick. Ideally, in a way that the government can't tax. For that, it's structured really well. The deflationary structure allows those that get in early to get their Bitcoins more easily. Then it's just a matter of getting the price up. To get the price up, those early adopters shout loudly and get the next round of people in, driving up prices in full bubble style.

Underlying it all, there's no concrete value. That's just like fiat currency, you might say. Well, yes, except fiat currency is backed by governments and centuries of experience. They mostly don't gyrate wildly within a day. And sometimes, those currencies do collapse. Aha, you may say, Bitcoin is immune to inflation by design, so it can't collapse into hyperinflation. Sure, the number of coins in circulation is controlled, but if confidence collapses, no-one's going to pay for them.

None of this means that Bitcoin won't be succesful. It just means that I don't like it.

Posted 2013-03-08.

Truth in Infographics: Tufte vs. McCandless

I saw this link on Facebook, and my opinion on it was a bit too big to fit on Facebook. So I'm writing it here, instead.

The summary of the video is that the wealth inequality in the US is too big. This is something I entirely agree with. However, the way this is presented stresses me. The analysis is straightforward and slick, cutting through the complexities to deliver a simple message.

However, it's a complex subject. As an engaging, entertaining introduction to the subject, it completely discourages you from engaging in critical thinking, or even realising there's more to it than what you're showing. You can come away thinking 'Yes, this is a serious problem', when that's not actually the evidence being shown. Ugh.

What's wrong with the analysis? The initial analysis was based around what a survey of 5000 Americans thought was the wealth distribution per quintile, and what was the ideal distribution. This is then contrasted against the actual distribution. The message meant to be taken away was 'inequality is way more than it should be'. The message I read got was 'people are incredibly naive and mathematically weak'.

The estimate was that the top 20% had a little over half the wealth. In other words, the top 20% has, on average, four times as much wealth as everyone in the other 80%. Despite the fact that you're taking an arithmetic mean over a portion of society including wads of millionaires and a number of billionaires. The outliers really skew stuff, and it seems people don't get that.

The 'ideal' distribution had the wealthiest 20% having less than double what the middle 20% had. That's a level of naivety that boggles me. Even ignoring the billionaires and the millionaires, people from the land that gave you the American Dream, people reckon that the upside to entrepreneurship, or years of training, or whatever should top out at around double what everyone else does.

And all this is before you think about what 'distribution of wealth' means. Wealth has a funny relationship with lifestyle. Most people don't buy a house outright, so the availability of credit is important, which is probably a bit more tuned to income than wealth per se. Actual wealth is likely to vary over a lifetime - you save for your retirement, then use the money up. Wealth means different things in different places - you can earn a lot more in a big city, but need to spend a lot more too. So, taking all that into account, the 'ideal' distribution looks incredibly flakey, even before considering that people may have different amounts of wealth for reasons beyond where they live and how old they are.

So, at this point, I feel the evidence is that the public don't know what they're talking about, and should be ignored when it comes to defining a sensible level of inequality, at least until they've done some proper in-depth data analysis. The video, however, uses them as a baseline for what follows.

The actual figures give the top 20% having a little over 80% of the wealth, and the top 1% having around 40% of the wealth (but 24% of the income). This is, I think, meant to be shocking, but it's pretty much in the nature of a long-tailed distribution like this.

As the data is nice and easy to find, I looked at the distribution of US billionaires. Even at that level, the top 20 of 100 billionaires had half of their wealth. The richest person had 20 times as much as the hundredth richest person. Wealth seems to follow some exponential process, and you'll always look very poor compared to those above you.

I really don't think it's worth trying to eliminate the form of the distribution, as it seems something fairly fundamental. Indeed, this exponential curve is what you expect with money growing/shrinking multiplicatively. What you can do, though, is try to tweak the constants of the process - progressive taxes, prevention of tax avoidance, chunky inheritance tax laws, etc.

However, the video captures nothing of this. It rails against a curve shape seen pretty universally around the world, rather than the specifics of how inequality has been increasing for decades in the US. It takes a real issue and turns it into mental mush.

So now... Tufte vs. McCandless. Edward Tufte has shown us that good information visualisation is vital. Data can be made beautiful, and insights laid bare through appropriate representation. If it's truthful, it should be beautiful. Infographics, represented by McCandless's Information is Beautiful have taken this idea and run with it. A superficial understanding of the issues by graphic designer types lead to a pile of data being made simple and beautiful. However, a lack of understanding of the underlying thoughtfulness of Tufte's work means that while these diagrams follow in form, they miss the real point. A superficial understanding leads to a superficial presentation.

However, we've been trained to believe in simple, straightforward explanations displayed elegantly. If it's beautiful, it must be truthful. Just as we believe talking heads with strong opinions over far more knowledgable experts who hedge their opinions, we trust these bold graphics.

And thus we demean the discourse on vital issues. Ho hum.

Posted 2013-03-06.

More ZX Spectrum Cross-stitch!

I couldn't resist doing a bit more ZX Spectrum cross-stitch. These ones are a bit less colourful, but still make me happy with retroness. For the less well-informed, these are a still from the Manic Miner "game over" animation, and the message that ZX Basic gave you upon successful completion of a command.

These bookmarks were late Christmas presents for a couple of friends, so they're finally unembargoed. Hopefully this has got the cross-stitch out of my system for a while!

Posted 2013-02-06.

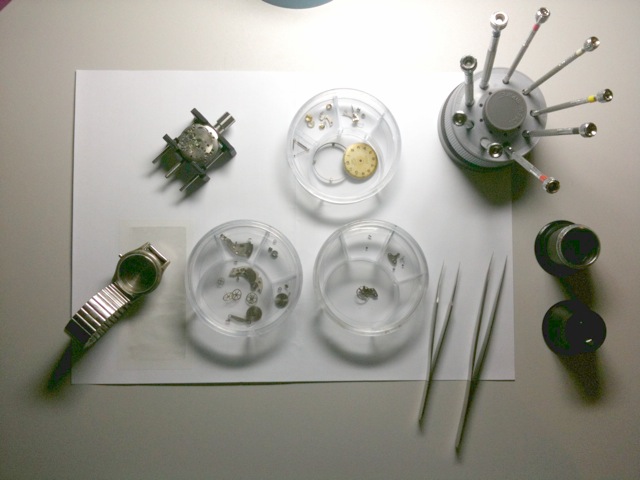

I have destroyed a Swiss watch

I've killed a 17 jewel mechanical Swiss watch. All in a good cause, though. I've been intrigued by mechanical watches for some time, so I finally got an online course in how to disassemble and reassemble a watch. It's really the very basic stuff, but you have to start somewhere. I started with a super-cheap clunker off ebay, and I'm glad that I did, since I promptly destroyed it. To my surprise, I didn't total the balance, but instead broke the head off the crown wheel. Yes, I read that it turns the other way, but then I went and got it confused with the ratchet wheel. Oh, and I broke a little bit off part of the keyless works. Ho hum. I have learnt a lot, though.

I've taken a photo of it in its disassembled state, along with a few of the cool new toys^Wtools I've needed to get it into this state. One of the things I've learnt from these tools is how precise movements human beings can make - working manually down to a scale of 0.1mm is quite doable, and it's astonishing what you can see with a loupe and even just a little practice...

The movement in question is an FHF 28, which has the extra fun of an extra layer due to its way of doing centre seconds. I notice in the photo they have that their keyless works broke in the same way as mine. I think it must be a design flaw!

Anyway, at this point, I have disassembled it all into myriad parts, but I've yet to reassemble it. I do hope I haven't forgotten everything...

Posted 2013-02-04.

The Circle of Fifths part 2 - Scales

When I rambled on about the circle of fifths, one of the things baked into it was the way there are twelve semi-tones in an octave. Various web pages discuss the golden ratio as an explanation for the number of notes, on the grounds that if you include the octave note there are 13 semi-tones, 8 white notes, and then 5 black notes. Hmmm.

So, what's the real thing that controls the number of notes in an octave? It basically comes down to having a note that's very close to a fifth. That is, given even-tempering, so that each semi-tone is the same ratio above the previous note, there is a note very close to 1.5x the base note. As it happens, with a twelve note scale, 2^(7/12) = 1.4983.

Put another way, what we're looking for is good rational approximations to log_2 1.5. And a really useful tool here is ... continued fractions! Continued fractions rock, and I'm rather disappointed they never appeared in the first year of undergrad maths I did. In the end, I learnt about them as a side-effect of learning some number theory for Project Euler.

Continued fractions provide a nice way of created truncated rational approximations to irrational numbers. So, log_2 1.5 is 1/(1 + (1/(1 + 1/(2 + 1/(2 + 1/(3 + ...)))))). According to the Wikipedia page algorithm, the approximations in increasing order of accuracy are 1, 1/2, 2/3, 3/5, 4/7, 7/12, 17/29, 24/41.

So, a 12-note scale is as good as it gets, unless you want a 29-note or 41-note scale. And that sounds excessively complicated.

Where did this Fibonacci/golden ratio business come from? Well, phi = 0.61803..., and log_2 1.5 = 0.58496..., so if you're looking for mystical numbers around 0.6, you might assume the golden ratio's involved. It comes up everywhere else, and 7/12 makes you think of 8/13. A case of mistaken identity!

Posted 2013-02-04.

Miranda and the Whale

Bit of an odd one here, so bear with me.

Miranda, our just-under-two daughter, is fearless. She clambers all over the furniture, and when she falls off she doesn't cry. She looks very thoughtful, and then tries to do the same thing again, until she can do it without hurting herself. The upshot of this is that she's very good at moving around, far better than David at the same age.

Apparently unrelated is the "London Whale", which lost several billion for the CIO at JP Morgan. They traded illiquid, exotic credit products. By no means the key feature, but one of the things you always see in exotics blow-ups, was the dodgy marks.

To mark a trader's book to market price, you need the prices of various things. If the things are illiquid and trade irregularly, this is more difficult than it sounds. Normally, these illiquid price marks are supplied by the traders, since they're active in the market and know what the current situation is. Some sanity-checking is supplied by risk management groups, but that's about it.

So, unsurprisingly, when things are going badly, there's a tendency of traders to set their marks to avoid showing a loss. This can cause further problems, since the marks they use to price their positions are also used to price new trades - if you've got a lot of X, and are mismarking the price high to make your position look better, you'll be saying 'ooh, everyone else is selling X cheaply' - if you can't keep up the doublthink, you'll just make things worse.

The thin, theoretical, connecting idea is 'take the pain early'. If something's going badly, covering it up or ignoring it will just make things worse. If you take the hit early, you can do it in a more controlled manner, learn from it effectively, avoid having waste time papering over the problem, and get it right next time.

This is, anyway, my view on software development (although I think I've said this several times now, in different forms). Sometimes, due to time constraints or whatever, you can't get it all right from the start. Finding the techniques to make sure that the stuff that needs to get done gets done regardless is an important skill.

On the other hand... pretty much everyone senior in the Eurozone seems to believe that trying to resolve problems is somewhat dangerous, and kicking the can down the road for years on end is the best solution. So, what do I know?

Posted 2013-02-04.

Analysing the musical 'circle of fifths'

I'll start with a detour, but bear with me.

It's fun to read children's stories to children. David is just starting to read, so he's starting to learn the concept of rhyming. This means I sometimes explain the rhymes in the story. It then comes back to me how much of the enjoyable flow of these stories is simply rhythm and rhyme, which in turn are complex terms for 'has the right number of syllables in each line' and 'ends with the same sound'.

Of course, this is all the same stuff I did at GCSE, but I never really cared for poetry. When it's a fun story for small children, I haven't bothered to look under the hood at how it's all put together. When I do so, it kind of takes the joy out of it!

Similarly, I have the score for Beethoven's 9th kicking around somewhere. It's amazing how something so magical can, on the page, boil down to what is an awful lot of repetetive, boilerplate-like structure. Working within that framework, there's still enough freedom to create pieces that are fantastic or dross, but having the framework revealed is very odd.

I find the structure of classical music particularly odd, since some things are driven by convention, and some by more fundamental concerns, and it's sometimes a bit unclear which is which. For example, particular intervals sound good because they're very close to simple frequency ratios. At the other end of the spectrum, there are standard forms, like the sonata, which are arbitrary, but provide a framework to build in, and listen in. Inbetween, you have things like the number of notes in an octave, which is semi-arbitrary - the precise number of notes is just something we've learnt to get used to, but the choices are constrained by choosing a number of notes so that our scale can include those simple ratio intervals.

So, in classical music (especially harmony) there's quite a lot of things to learn that 'just are', and it's difficult to tell if they sound good because we're used to it, or because they're fundamental. One thing you learn is the 'circle of fifths' - C major has no sharps or flats, and if you repeatedly go up a fifth you add a black note each time, passing through G major, D major, A major etc. When you run out of black sharp notes the other notes get sharpened, until you work through all the scales, and finally your adding of sharps turns out to be the removal of flats, and you end up at C major again.

Is this something that only works for a conventional major scale, or would it work with whatever interval structure we chose?

Let's start with why we cover all the possible starting notes by repeatedly moving up a fifth. There are 12 tones - an octave has eight 'white notes', including the repeated note, so that's seven notes, plus the five 'black notes'. A fifth is actually an increment of seven semi-tones. Seven and twelve are co-prime, so repeatedly adding a fifth (as addition of seven modulo twelve) will cover all the values. So, depending on the interval chosen and the number of notes we fit in our 'octave', this coverage may or may not happen.

Next, why does moving up a fifth add a sharp? Well, the intervals for a major scale are 'T T S T T T S', where 'T' is a tone (two semitones) and 'S' is a semi-tone. If we rotate the sequence along four elements, to start on the fifth, we get 'T T S T T S T'. The change between playing C major notes starting on G and playing G major is in the final two steps - whether we do a tone followed by a semitone, or vice versa, and that's basically whether the last note is sharpened or not.

What other schemes would work like this? Well (assuming even tempering, so that all 'semi-tone' equivalents are the same size), if your 'scale' consists of a set of 'tones' and 'semitones', the requirement is that there is a rotation of the scale that differs from the original scale by swapping around a neighbouring pair of 'S' and 'T'. For a scheme like the major scale, with a scale of 5 tones and 2 semitones, I think the only scheme where this works is a rotation of the major intervals (which is why the normal circle of fifths doesn't work for minor keys). For scales constructed differently, there are other possibilities.

Finally, why, when we start on C major, do we sharpen the notes whose sharps are black notes first? This is simply because our cycle of scales moves up a fifth each time, so that we're doing an 'almost half octave' step each time. The black notes are in two groups (of size two and three), and we alternate sharpening a note from each group sequentially.

Overall, what does this tell us? We could construct a very similar system with, e.g. decades and a 'circle of sixths', with a group of three black notes then four black notes. Alternatively, by tweaking the intervals used, you could get very different systems where, for example, different base notes share exactly the same notes in their scales. This probably makes modulation somewhat interesting.

At the end, I'm once again left wondering what is really fundamental to music, and what's arbitrary (but fascinating) structure.

Posted 2012-12-13.

A ZX Spectrum tape-loading themed scarf!

Enjoying my recent success in crafty things, I had a go at creating something I'd wanted for quite some time: A ZX Spectrum taped-loading-themed scarf! A very rough introduction is that the tape loading was accompanied by horizontal coloured bars in the border area: it consisted of even red/cyan bars (accompanied by a constant tone), followed by uneven yellow/blue bars, representing the data itself. So, I wanted a scarf with red/cyan stripes at the end, and yellow/blue in the middle.

I'd wanted one for a while, but didn't think exactly anyone would want to make one, certainly not to my odd and exacting standards! After doing the cross-stitch business, I realised I could do it. I'd never knitted before (or since), and I only learnt how to knit - still no idea about purling! However, this was enough to get me going.

So, the gear: I opted for 6mm bamboo knitting needles. Apparently wood is good for beginners, as it's not too slippy, so less chance of it all falling off the needles (a bad thing). 6mm is reasonably chunky, but that goes with chunky wool, if you want to do chunky knitting! Chunky knitting means less knitting to cover a certain area, and given my impatience, that seemed like a good thing. For a scarf, chunky knitting looks pretty ok.

Then, I bought wool. This was a surprise - the colour selection is rubbish. Even going to a big online store, it's pretty limited. I wanted really bold primary colours, and... the selection is poor. I ended up with 50g balls of 'Bergere de France Magic+' in Brique, Estuaire, Seneve and Gynura. This is a 'chunky' wool, and handy interweb scarf calculators told me that 4 balls would be about right for the scarf I was looking at. I should have perhaps got a bit more, but at around a fiver a ball, I thought it'd be overkill.

So, onto the knitting itself. Each stripe was a multiple of two rows (across and back), so that the colour-changing always happened on the same side, simplifying my life. I was quite surprised how easy it was to get a reasonable pace with the knitting - nothing insanely fast, but it doesn't take much practice to get going. Of course, in places I have uneven tension or lost or gained stitches and other weirdness, but reasonable uniformity came pretty quickly.

Within the tolerances of the pattern, I tried to get the relative band widths correct, using the timings from The Spectrum ROM Disassembly. This book also provided me with the details of the encoding and colour pattern. So, I knitted the lead tone, the sync bit, the data block header byte (0xFF, I think), 'SF' in ASCII, and then the parity byte. Unfortunately, at this point I ran out of wool (I had plenty of red/cyan, but not yellow/blue). So, I finished off with another red/cyan lead tone. (One thing I hadn't really realised before is that you can only get one side having the exact pattern you want - the other side switches the lines about a bit - you can see this in the picture with David).

In effect, the scarf contains data as if the end of the data block were overwritten by another lead tone, just like the kind of stupid mistakes I used to make when recording on the Spectrum! If you tried to load this scarf, the data would load successfully, but you'd get an 'R Tape loading error'!

How happy am I with the result? My itch has been scratched. The quality's not perfect but it's good enough, the length is just a little short (but I couldn't face spending 30 pounds just on wool!), yet it covers my neck, it has pedantic attention to detail on the technical side, I learnt to knit, and it amused my work colleagues. Can't ask for more than that, really...

Posted 2012-11-18.

8-bit cross-stitch: Head Over Heels

Buoyed up by my 'success' with the CPU display, I decided to take on another project I'd been thinking about for some time: 8-bit pixel art cross-stitch. It's always struck me that cross-stitch is basically pixel art, except it was pretty much unaware of it. And there's some rather good, or at least nostalgic pixel art from the '80s and '90s that deserves resurrection. And cross-stitch can't be that hard, can it? I mean, it's all girly and everything.

So, I cross-stitched up the main characters from the ZX Spectrum classic Head Over Heels, and you can see the results thereof. I think it's turned out rather well! On the details side, I used 14 count white Aida material, with size 24 cross stitch needles. I used 3 strands of DMC stranded cotton, colours 310 (black), 996 (for cyan) and 307 (for yellow). The design was taken from a screenshot, with the image fed through www.myphotostitch.com to convert it into cross-stitch instructions.

It turns out that cross-stitch is quite straightforward, but rather slow and tedious. I'm glad the image wasn't too large! I was originally considering making it double-size (4 squares per pixel), but decided not to as it was my first go. I'm very glad I didn't. I still have to mount the result, which will be a whole new pile of hassle, I'm sure.

After doing this work, I discovered spritestitch.com, which does lots of this kind of stuff. I didn't sign up. I mean, they've all got to be weirdos, right? ;)

Posted 2012-09-30.

Minor successes in geeky craftwork: RISC CPU display

Hennessy and Patterson's Computer Architecture: A Quantitative Approach was the first really good textbook I'd read. It was a proper advanced undergrad textbook. It covered things from first principles, with detailed, highly-readable explanations that went on to advanced topics. The references to the literature both demonstrated how this tome of most of a thousand pages was but an introduction to the field, but also showed how to get to the cutting edge of the field.

And one of the great things about it was its real quantitative approach. Design trade-offs were illustrated through benchmarks, applications of Ahmdahl's Law, etc. The excitement of the RISC revolution was palpable in the text. At the time, if you wanted a high-end workstation, you didn't get an x86 box, you got a computer with a RISC CPU. Five main architectures were used as illustrations throughout the book - the Alpha, PA-RISC, MIPS, Sparc and PowerPC. Now, only Sparc is holding on by its fingernails in the server market. We're in a strange world where x86 is still king.

When decorating my study, I wanted to produced something which harked back to the time when it briefly seemed that logic might beat marketing momentum. A little display of examples of each of the architectures (with chips taken from decently spec'd machines - no getting the MIPS out of a washing machine!). This was a few years ago. A bit of ebaying got me a selection of chips - along with some rather funky heat sinks. However, with children, I never found the time to assemble the display.

Finally, though, I have. Not particularly impressively - it's rather half-done, but as a parent, this is small victory for me! Ideally I'll find time to build it into something more case-like, but I'm surprisingly proud of myself!

Posted 2012-08-27.

Online courses

I've been taking some online courses through Coursera, a co-operation between Stanford and some other places. I originally signed up for 'Model thinking' (a rag bag of quantitative and semi-quantitative models for social science), 'Game theory' and 'Information theory'. The latter shows one particular problem: The course never happened. The other two merely had delayed starts. The other problem is that the courses tend to be very much introductory-level. They assume nothing, and tend to let mathematical detail slide. Don't pretend for one moment that you're attending Stanford. I guess they're pretty accessible, though.

The content is generally pretty good, there are review quizzes and the like, and one of my favourite features is that you can watch the course video at up to two times normal speed. It turns out I can listen a lot faster than people like to speak, especially if I can pause the video for a good think should something subtle or clever appear.

The 'Model thinking' course was mathematically very simple, but it opened up a new way of thinking to me. I'm used to the physicits 'Put it all together into a unifying framework' approach, with models that can be complex, but are (mostly) testable. Social science lacks a grand unifying theory, but instead you can make do with very simple models to get a qualitative feel for behaviour. When you have a problem, throw as many different models as you can at it, and see how they agree.

'Game theory', as an introductory course, didn't feel like it taught me much I didn't more-or-less know already, given I'd read a not-very-good text on the subject a few years ago. On the other hand, it pressed the knowledge home, through explanation on videa, plus a variety of quizzes. It demonstrates the strengths and weaknesses compared to, say, just buying yourself a big textbook and reading it. The depth won't be as great and/or the pace will be slower, but it seems to me to be a much more effective way of jamming knowledge into your head, even if there isn't personal feedback from a tutor.

I'm now working through 'Machine learning'. My view of AI is... not wholly positive, and I'd kinda got the feeling of this kind of stuff as people farting around and not really getting anywhere. However, in the last decade, it's all started to click. I mean, Google's doing translation using machine learning and statistics, cheapo cameras have face recognition built in, and all this kind of stuff. Huge data sets and huge computing power, plus new research have made all kinds of stuff possible.

'Machine learning' isn't trying to screw around passing the Turing test, either. What I realised when looking at this course is that it's basically fancy forms of regression and classification. Indeed, the course starts off with linear regression! In a sense, it's all super-fancy curve fitting. There are programming exercises, including cool stuff like using neural networks for OCR.

As I said, though, these courses tend to be rather introductory level. The pacing of the course and exercises means that you've got a better chance of absorbing the basics than just picking up a big textbook, but I don't think it really suffices. So, I have bought a big book, namely Bishop's Pattern Recognition and Machine Learning. I'll see how I get on with that.

And there are plenty of courses I'd still like to do. I'm having to ration myself to prevent either me or Caroline from going mad, but it'd be nice to do the machine vision/image processing course...

Posted 2012-07-31.

Mac-fixing Encore

To follow up on the MBA fix, here's what I then pulled out of the DVD drive of the Mac Mini that sits by our TV. Ah, children.

Posted 2012-07-17.

Fixing a Macbook Air

Aaages ago, my Macbook Air broke. The hinge snapped, doing nasty things to the display, and the official Mac repair route would have been so pricy that it didn't quite seem worth it. I bought another MBA second-hand, and my original came in handy for spares when the disk in the second MBA broken (the disk in my first MBA was already a replacement. They don't seem terribly reliable to me).

I finally got around to replacing the broken hardware. Getting an Official Mac Guy to fix it would have cost too much (especially given it's an original 2008 MBA, and thus nicely out of date). Using official Apple parts would have been too much. Even dodgy OEM parts where expensive enough.

What actually happened is the metal hinge 'clutch' broke in two. The pieces spronged, and ground against nearby bits of case to break the plastic antenna cover. Also, because the hinges were no longer lining up properly, moving the display caused the hinge assembly to eat into the LVDS cable, until the screen display stopped working.

So, new hinge clutch, antennta cover and LVDS cable. Oh, and a replacement disk, for the one I scavenged earlier. I settled on an SSD this time. Maybe it would be more reliable. I had to fully disassemble the MBA, including ungluing bits of the display assembly. It now works (better than the other one, thanks to the SSD), even if the display bezel looks a bit dodgy.

I feel I should put up a picture of all the broken parts (apart from the disk - that just looks like a working disk), so here you go. You can see the hinge clutch in two parts, the antenna cover with a bit broken off, and the LVDS cable with a bit of a cut in it. All very exciting.

Posted 2012-07-15.

Paper round: Distributed Systems

I recently realised, to my horror, that I really haven't been keeping up with a fair old amount of research. So, I decided to catch up on 'distributed systems'.

Back when I was an undergrad, we had a course on 'concurrent systems', which dealt partly with locking and race conditions and stuff, and then did a little bit of the distributed end of things. When I finally discovered that expensive 'middleware' was mostly fancy networking wrappers, I was remarkably disappointed and underwhelmed. Making a pile of networked PCs work together sounded like the boring end of research.

Since then, distributed systems have become Key To The Web. Real practical uses, with real practical requirements, instead of half-hearted academic systems. Things have come a long way, and I just haven't kept up. It's rather ironic, really, since my first job out of uni was working on compute farm infrastructure. This managed a queue of short-run compute jobs, and associated data distribution, so it's nothing to write home about algorithm-wise, but it taught me a lot about middleware, failure modes and performance. And then, I moved on.

I didn't track the research, which is a bit embarassing given all these famous papers by Google, etc. I am finally catching up on the area. It's not exactly practical to set up a grid at home, but reading the theory is better than nothing...

Here are my paper mini-reviews. If I were still acting like a proper researcher I would provide a proper bibliography, but these papers are well-known enough that paper titles suffice.

The PageRank Citation Ranking: Bringing Order to the Web Ok, it's not a distributed systems paper, but given it's what effectively kicked off Google, it seemed worth reading. I'd never actually read the details of the PageRank algorithm, but it's both simple and clever. Elegant, in other words. The paper is well-written and clued up, and having read this paper I don't begrudge Brin and Page becoming billionaires! It also reminded me how bad web search had got by that point. The example web search for 'university' had a 'major commercial search engine' producing more-or-less junk, whereas PageRank produced something basically sane.

The Anatomy of a Large-Scale Hypertextual Web Search Engine 'Large-Scale' is severely relative. What was large back then is tiny now. This paper is pretty much a partner to the PageRank paper, focussing on system architecture, both in terms of how the algorithms are glued together, and how they are parallelised. This early-days paper is perhaps not terribly relevant now, but is certainly interesting (if only from a historical perspective :p ).

Web search for a planet: The Google cluster architecture A not terribly informative introduction that tells you that web search is parallelisable, and that best bang-per-buck is achieved with mid-range commodity hardware. Hmmm. Well, maybe it's not that bad, but it's not an earth-shattering paper.

The Google File System A very readable paper, describing Google's big, distributed file system (used to manage hundreds of terabytes across thousands of machines). Demonstrates a nicely simple design - don't bother with full Posix, and everything is entirely user-space (building the server on top of the native file system). Funny consistency model, neat operation log.

The Chubby lock server for loosely-coupled distributed systems After the GFS paper, quite a disappointment. Chubby provides a smaller, simpler file system with locks on the files. It's mostly used to hold configuration for other systems, provide locks for other systems, and provide a mechanism for other distributed systems to elect masters. Bits of it feel like a nameserver with subscription/non-timeout-based caching. This all-in-one behaviour, coupled with a lack of details on common usage patterns made it feel like a bit of a wishy-washy design to me. What problem is it really trying to solve? Yuck, yuck, yuck.

Bigtable: A distibuted storage system for structured data This is really just a key-value-ish database built on top of GFS. It feels like another simple design, done well, explained well. The immutable design is rather neat.

MapReduce: Simplified data processing on large clusters Another popular yet underwhelming paper. The idea is simultaneously neat and straightforward: perform your grid calculations in a stylised map and aggregate format, and use that structure to make the gridded implementation simple, efficient, etc. The 'reduce' part is basically a monoid, and I think that whole area could be cleaned up by talking about it in that way. But maybe I'm just a Haskell nutter.

Dynamo: Amazon's highly available key-value store The paper doesn't start off well. It reads like an advert for just how darned brilliant Amazon are. As you read through the paper, either the advertising falls away, or you become immunised to it. What's left is a rather good paper. Dynamo is highly optimised for uniform good performance, and the paper goes into a lot of detail about the engineering. There's a lot more focus on the algorithms than in the Google papers, and it feels like they've built a complex system on top of pre-existing research, in a way that the Google descriptions don't. It's well-written and introduced me to various algorithms and ideas I hadn't really looked into before.

MAD Skills: New Analysis Practices for Big Data Randomly recommended by Wikipedia as an influence on modern Big Data stuff, this paper tries to sell the idea of agile practices for Big Data, rather than creating a fixed schema data warehouse and making it difficut to pile new data in. The start of the paper felt like it was trying too hard (UrbanDictionary.com reference, anyone?), but it soon settled down, into a spirited promotion of an agile approach (at the expense of a somewhat straw man waterfall approach, it seems), plus an explanation of how to do linear algebra and machine learning in SQL. I'm still somewhat in two minds about SQL for Big Data, but there you go...

Apache Hadoop Goes Realtime at Facebook Another Wikipedia recommendation, and the first one to really dig into using Hadoop. A fairly neat introduction into what it takes to pick up Hadoop and use it for a production-scale and production-reliability real-time system. An interesting combination of showing that such open source software in this area can be used for the big time, and what customisations Facebook needed to make. Technically, compared to most of the above papers, it's not much, but in effect it's showing a flattening in the technology curve - you can now get the kind of software previously only described in research papers off the shelf.

So, I've read enough papers to keep me going for a while. On the other hand, it's shown me how much more stuff I should really read up on. Cool things that I read about that I want to read more about include:

- Paxos and Byzantine fault tolerance, etc.

- Gossip protocols and anti-entropy protocols

- Bentley-McIlroy compression

- Turbo codes, erasure codes

- Consistent hashing

- Merkle trees

- Log-structured merge trees

Plenty to go!

BONUS PAPER: "Ulrich Drepper's How To Write Shared Libraries" One of our work projects is a library that works on both Windows and Linux. Once I'd discovered that Linux shared object files are dynamically linked purely by symbol names, not by library name/symbol name pair, I couldn't believe it. Ok, it gives you LD_PRELOAD, but leads to so many other possible brokennesses. I went and read a paper on it:

This (long) paper goes into excruciating depth about how it all works, and all the pain involved. It's very readable and comprehensive, and tells you all the things not to do, plus various micro-optimisations suitable for making a really efficient libc. It covers API and ABI compatibility, and is generally a good read, even if you can tell from time to time that English isn't his first language.

Posted 2012-07-11.

Apple's Time Machine

Apple's Time Machine is an interesting piece of technology. The GUI front end is friendly, simplistic, and brittle. I used it to back up my Macbook, but once the disk died, I couldn't use the GUI to access the network back-ups of the lost machine!

I think this is partly as I had to tweak it originally a bit to get it to back-up to something that wasn't a particularly Apple-supported network share, or perhaps it was because the Mac I was trying to access it was used a different version of MacOS.

Anyway, I was now somewhat frustrated - perhaps vexed even, as I couldn't access my back-ups. However, behind the scenes, all it is is a 'sparsebundle' disk image, containing a list of dated directories containing the entire filesystem as of that time. Very straightforward to access once you're in!

It wasn't quite that straightforward in practice - there were random error messages until it got itself into a sensible state, but still... not bad!

The technology that makes this work is rather nice. The collection of directories share as much data as possible with minimal overhead by having hard links on directories! And to decide what needs to be backed up, the filesystem tracks directories modified since the last back-up. The transparency and simplicity of the back-up system, within the constraints of it being efficient, are rather neat. It does feel (to me, at least) that while it pushes the technology forward, it stays true to the Unix approach.

And then they put a glitzy but brittle GUI on top. :)

Posted 2012-05-10.

Ignoring non-sensical solutions

Warning: Contains simple puzzle spoilers!

On and off I've been working through Rae's Quantum Mechanics. One of the things that annoys me is the way the solutions to problems are chosen. Take a particle in a potential well: A differential equation is given, solved, physically non-sensical solutions are discarded, and what remains must be the solution.

How on earth can we justify only considering the 'sensible' solution? Can we really claim we have a mathematical model if it involves such judgement calls?

It reminds me of a simple mathematical puzzle. We have a cell, which, every time step, dies with probability 1/3, or otherwise splits into two cells, each of which follow the same rule in future timesteps. The children behave independently. What is the probability it survives forever?

If x is the probability it doesn't survive forever, this is because it either dies in the next step, or both children must die - as they are independent, the probability of this is x^2. So, x = 1/3 + 2/3 x^2. Solving this gives x = 1 or 0.5. Ignoring x = 1, we have the answer x = 0.5. But... why can we ignore the incorrect solution of certain death?

One way to look at it is as a convergence issue. If you plug in numbers around 0.5 into 1/3 + 2/3 x^2, you get a number closer to 0.5. If you plug in a number just under 1, it'll end up further away from 1. This shows that 0.5 is a 'stable' answer, but 1 isn't.

However, this still isn't convincing. What you can do is define x_i as the probability of the colony dying out in i steps, with x_{i+1} = 1/3 + 2/3 x_i^2. We can then say the colony death probability is the limit as i goes to infinity, and show that the limit is 0.5, if x_0 is in the range [0,1), thanks to the above convergence properties, and solving the quadratic is simply a way of finding the converged value for the real solution.

In a similar fashion, I believe the differential equation isn't the real quantum problem. It's an equation that represents possible equilibrium solutions, but misses out how to select the real one from all the possibilities it brings. Somehow, there is a mathematical phrasing for the real problem we're trying to solve, one that eliminates the 'non-sensical' solutions automatically, but I'm not sure what it is.

Posted 2012-03-24.

On Simplicity

Again and again I see designs that are just... wrong. Given the choice between doing it right and doing it simple, the choice is 'simple'. I'm not the only one to notice this.

Unix is a traditional example. It's as simple as possible. Look at the file API. Let's say that you want to read some data. You can read(2) it. If we're streaming the whole file in, a bit of prefetching would be nice. If we're trying to read regularly-sized records from all over a file, we want different behaviour. The API ignores the notion of record sizes and advance knowledge of access patterns. Any optimisation has to be heuristic.

Perhaps worse is atomic file update. Also known as: Write to new file, hard-link old file to back-up file, move new file over old file. Moreover, there's magic in the semantics of the file system to make this work: the writes to the file should be committed to disk before the move is, so that you don't get a partially-written file in the case of a crash. Ugly hacks to do a conceptually simple operation.

Or look at TCP/IP. The internet's favourite way of distributing video. There's no quality of service built into basic TCP/IP, and a packet-switched network is just the wrong solution for that kind of problem.

Way back, PalmOS was another example (like MacOS before it) - a co-operatively multitasking OS. Simple to design and implement, great for lightweight hardware, able to beat its pre-emptively multitasked competitors, but you know that in the end it'll be running on more powerful hardware doing more complex things, where the original design looks horrendously underpowered.

This is really the key. A complex up-front design will be bad, since you don't understand all the corner cases, people don't want all the infrastructure yet, the current hardware can't really support it, and you'll be trounced in the marketplace by a simple design that works right now.

Real good design is one that can evolve. Unix started off life as an OS for an impressively under-spec'd machine. Amongst other limitations, one thread per process, no real-time guarantees, uniprocessor model, certainly no graphics or networking. Even when Unix had been extended, Linux started as an x86-specific (and uni-processor) monolithic kernel Unix clone. Somehow, the design has extended in a way that has not fundamentally compromised it. Sure, it's warty, but it's somehow scaled. TCP/IP's not dissimilar. Despite its grimness, video over TCP/IP is working out mostly ok.

Sometimes, even ignoring the evolution over time, doing the simple wrong thing is better than the right thing. When I heard about Erlang's error model (basically, 'if something goes wrong, explode, and have a hierarchy of processors that can deal with children exploding rather than attempting to recover), I was horrified. However, it sounds like the right thing, in the end. Error handling is tricky to write, tricky to test, and errors in error handling could make for a nice cascading failure. Playing it dumb may make it more safe and reliable than trying to think hard.

Einstein's maxim to make it as simple as possible, but no simpler, seems to have some relevance. The lesson is, make it simpler than you think 'possible' would be - you might be surprised. On the other hand, make it extensible, so that success and scope-creep doesn't induce long-term failure.

Posted 2012-02-27.

Differential 1-forms, again

A while ago, I tried to make sense of differential 1-forms by thinking of how they're used in terms of a type system. I got approximately nowhere fast (you can see the post!). I've recently been reading Penrose's Road to Reality which describes them in near-laymans terms, and this seemed a good point to try again...

He defines a vector field as a function which takes another function (from point in the manifold to real value), and then at each point calculates some weighted sum of partial derivatives, effectively taking the derivative in a particular direction. i.e. a vector field E acts on a scalar function P defined over the manifold - E(P). E is of type (Point -> Real) -> (Point -> Real).

He then defines dP = dx dP/dx + dy dP/dy + ... (you'll have to insert your own curly 'd's as appropriate), where the dx and dy and so on are differential 1-forms. Thus, ahem, dP . E = E(P). For some variant on a dot product which is not really explained.

So, one minute E is a function, then it's something you can dot product. This is probably in some vector space of functions, but the whole thing seems pointlessly messy to me.

Instead, I decided to build my own mental model, and assume that whatever the books have is just waffle disguising it.

Taking the derivative of a function is basically identifying the linear transform which it's locally like. In Euclidean space, taking the derivative of an R^n -> R^m gives you the information about a R^n -> R^m linear transform. However, for a manifold, the space you view the derivative in (a vector space) may be rather different from the space you started in (something curvy). This space for derivatives is the tangent space. The tangent space for the reals is the reals, so if you have a manifold M with a tangent space T, taking the derivative goes from M -> R to T -> R.

Thanks to duals, this derivative can also be represented as a member of T. We can then view the dx, dy etc. as basis elements of the tangent space, and dP as an element of the tangent space representing the derivative.

The tangent space allows you to tack directions onto each point. In other words, a vector field can be viewed as supplying an element of T for each point in M. This gives us a way of representing E. Happily enough, dP . E gives us the thing we were after.

Overall, I think I prefer defining dP as a function of type T -> R. That way, the covariant scaling effects are made clearer, and you can probably get away without a dot product (haven't thought hard about that second part). If you change the scaling on your parameterisation of the manifold, when your vector field entries increase in measured length, the derivatives (as a function from the tangent space to reals) also decreases proportionally, so that the final number you get stays the same.

I'm sure I've swept a lot of clever and subtle issues under the carpet here. However, I feel the textbooks' approach does too. Until I can get a handle on an alternative well-typed interpretation of these things, this will do nicely for me.

Update: I underestimated Penrose. In a later chapter he clarifies his handwavey notation. I have been using the dual of the normal convention. The vector field assigns each point an entry in its tangent space. Interpreting this vector space as the space of functions taking derivatives in all directions gives us the 'vector field as differential operator' view of the world. Treating it as a vector space, the dual space of functions from the tangent space to the reals is the differential 1-forms, aka covectors. The 'dot product' we see is really just applying one to the other, and we end up with the contravariance between the two as needed.

Penrose's rather nice geometric intuition of this is that vectors work as you expect - their length is as you understand, but covectors represent density. 'dx' can be thought of as the hyperplane of points perpendicular to the direction x, and represents a density of stuff in that direction. I'm still working through this chapter, but I expect this will fit quite nicely with integration...

Posted 2012-02-13.

Sony and Abstraction Failure

Being nasty to Sony is a bit like shooting fish in a barrel, but it won't stop me doing it.

I saw a Sony iPod dock in the shops the other day. Gosh, how the mighty have fallen. Sony admitting the existence of another company? I had to check that the dock wasn't only compatible with a special Sony iPod-alike.

The combination of seeing this Sony stuff and having played with my ickle iPhone for a few weeks made me really think about the differences between Sony and Apple. They both produce beautiful looking products. I'm generally a fan of Sony hardware. The firmware is mediocre at best. Their software is awful (Remember when they tried to install rootkits on customers? Pretty par for the course, really). Their networks have been hacked and millions of customers' details lost.

Fundamentally, Sony can't do abstraction - the more high-level something is, the worse it is. The WalkMan worked because it was largely mechanical, and lacked any embedded CPUs. I suggest Sony move into brick-making.

Posted 2011-11-17.